Difference between revisions of "Scratchpad unit"

| Line 179: | Line 179: | ||

.MAX_PRIORITY("LSB") | .MAX_PRIORITY("LSB") | ||

) controller ( | ) controller ( | ||

| − | .decode(input_mask & compare_mask), | + | .decode(input_mask & compare_mask), //input_mask is satisfied_mask from Input Interconnect |

.encode(selected_address), | .encode(selected_address), | ||

.valid(priority_encoder_valid) | .valid(priority_encoder_valid) | ||

Revision as of 11:09, 8 May 2019

Like existing GPU-like core projects, nu+ provides limited hardware support for shared scratchpad memory. The nu+ core presents a configurable GPU-like oriented scratchpad memory (SPM) with built-in support for bank remapping. Typically, scratchpad memories are organized in multiple independently-accessible memory banks. Therefore if all memory accesses request data mapped to different banks, they can be handled in parallel (at best, with L memory banks, L different gather/scatter fulfilled in one clock cycle). Bank conflicts occur whenever multiple requests are made for data within the same bank. If N parallel memory accesses request the same bank, the hardware serializes the memory accesses, causing an N-way conflict and an N-times slowdown. The nu+ SPM dynamic bank remapping mechanism, based on specific kernel access pattern, minimizes bank conflicts.

Contents

Interface and operation

Interface

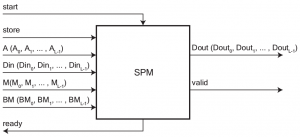

As shown in the figure above, the I/O interface of the SPM has several control and data signals. Due to the scattered memory access of the processor, all the data signals, both in input and in output, are vectors and every index identifies a corresponding lane of the core unit. So, SPM has the following data inputs for each lane:

A: address of the memory location to be accessed;Din: word containing data to be written (in the case of scatter operation);BM[0..W-1]: W-bit-long bitmask to enable/disable the writing of each byte ofDinword;M: bit asserted if the lane will participate in the next instruction execution.

As for inputs, the SPM has a single data output for each lane that is:

Dout: data stored at the addresses contained inA.

The store signal is an input control signal. If store is high, the requested instruction will be a scatter operation, otherwise it is a gather one. The value of store signal is the same for all the lanes. Due to the variability of latency it is necessary to introduce some control signals that allow to implement a handshaking protocol between the control logic of the SIMD core (owner of the CUDA Thread Block) and the SPM control logic. These signals are:

startis an input signal and is asserted by the core control unit to request the execution of an instruction;readyis an output signal and is asserted by the SPM when it can process a new instruction;validis an output signal and is asserted by the SPM when the execution of an instruction is finished and its outputs are the final outcome.

FSM model

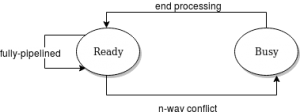

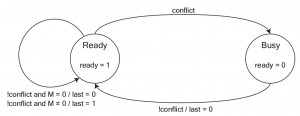

As said, the SPM takes as input L different addresses to provide support to the scattered memory access (and its multi-banking implementation). It can be regarded as a finite state machine with the following two states:

- Ready

- - the SPM is ready to accept a new memory request.

- Busy

- - the SPM cannot accept any request as it is still processing the previous one.

In the Ready state the ready signal is high and there are no issuing requests. In the Busy state all input requests will be ignored. Assuming the loaded instruction relates to conflicting addresses, the SPM goes into the Busy state and sets the ready signal as low. At the end of the memory access serialization, the SPM passes into the Ready state. If the SPM is involved in the execution of another instruction, the ready signal is low and the input instruction is not yet accepted. If an instruction is free of conflicts, the SPM executes it without passing in the Busy state. So consecutive instructions without conflicts can be executed without interruptions, just as if the SPM were a fully pipelined execution unit.

Customizable features

The SPM is totally configurable. In particular it is possible to customize the number of lanes of the SIMD core unit (L), the number of memory banks (B), the number of entries contained in a bank (D) and the number of bytes that make up an entry in a memory bank (W) simply by changing the value of the respective parameters in the SPM configuration file.

`define SM_PROCESSING_ELEMENTS 16 //L `define SM_ENTRIES 1024 //D `define SM_MEMORY_BANKS 16 //B `define SM_BYTE_PER_ENTRY 4 //W `define SM_ENTRY_ADDRESS_LEN $clog2(`SM_ENTRIES) //entry address lenght `define SM_MEMORY_BANK_ADDRESS_LEN $clog2(`SM_MEMORY_BANKS) //memory bank address lenght `define SM_BYTE_ADDRESS_LEN $clog2(`SM_BYTE_PER_ENTRY) //byte address lenght `define SM_ADDRESS_LEN `SM_ENTRY_ADDRESS_LEN + `SM_MEMORY_BANK_ADDRESS_LEN + `SM_BYTE_ADDRESS_LEN //address length

Architecture

Overview

The scratchpad unit is composed of the scratchpad memory and a FIFO request queue. The unit receives inputs from operand fetch unit and outputs toward the rollback unit and the writeback unit. The FIFO request queue size is customizable. A general request enqueued and forwarded to the SPM is organized as follows:

typedef struct packed {

logic is_store;

sm_address_t [`SM_PROCESSING_ELEMENTS - 1 : 0] addresses;

sm_data_t [`SM_PROCESSING_ELEMENTS - 1 : 0] write_data;

sm_byte_mask_t [`SM_PROCESSING_ELEMENTS - 1 : 0] byte_mask;

logic [`SM_PROCESSING_ELEMENTS - 1 : 0] mask;

logic [`SM_PIGGYBACK_DATA_LEN - 1 : 0] piggyback_data;

} scratchpad_memory_request_t;

Before the requests are queued, a phase of decoding and alignment checking of the type of operation and bitmask generation based on the opcode (to determine which lane are involved) is performed. Afterwards the control logic checks the alignment of the incoming request. Supported operations are both scalar and vector and on byte, half-word and word-aligned addresses. The alignment is checked by the following coding lines:

assign is_word_aligned [lane_idx] = !( |( effective_addresses[lane_idx][1 : 0] ) ); assign is_halfword_aligned [lane_idx] = !effective_addresses[lane_idx][0];

Then, the following code determines if the access is misaligned based on the current operation, the required alignment for the memory access and the lane mask:

assign is_misaligned = ( is_word_op && ( |( ~is_word_aligned & mask[`HW_LANE - 1 : 0] ) ) ) || ( is_halfword_op && ( |( ~is_halfword_aligned & mask[`HW_LANE - 1 : 0] ) ) );

In case of misaligned access or out-of-memory address, the unit enables the Rollback handler, which launches the corresponding trap.

always_ff @ ( posedge clk, posedge reset ) if ( reset ) spm_rollback_en <= 1'b0; else spm_rollback_en <= instruction_valid & ( is_misaligned | is_out_of_memory ); //Rollback handler enabled always_ff @ ( posedge clk ) begin spm_rollback_pc <= `SPM_ADDR_MISALIGN_ISR; //location of the corresponding trap spm_rollback_thread_id <= opf_inst_scheduled.thread_id; end

If an instruction is aligned, a new scratchpad_memory_request_t instance is created and filled accordly aligned with the operation type. Then, the new request is enqueued in the FIFO request queue, but only if the instruction is valid and if there is no rollback occurring. When the SPM is in the Ready state (the sm_ready signal is high) and the FIFO queue is not empty, the oldest request is dequeued and comes to the SPM. At the end of the SPM execution (when the sm_valid signal is high), the last phase aims to output the results.

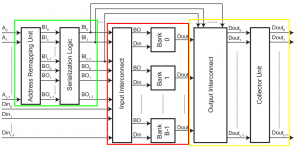

Stage 1

The first stage (in the green box in the figure above) has the task of carrying out conflict detection and serialization of conflicting memory requests. If the SPM is in the Ready state, it will carry out the incoming requests. If a conflict is detected while the SPM is in the Ready state, the SPM moves to the Busy state and serializes conflicting requests. If the SPM is in the Busy state, it will not accept inputs until the last serialized requests is served.

Address Remapping Unit

The Address Remapping Unit computes in parallel the bank index and the bank offset for each of the L memory addresses coming from the processor lanes. Bank index (BI[0..L-1] in figure above) is the index of the bank to which the address is mapped. Bank offset (BO[0..L-1] in figure above) is the displacement of the word into the bank. Furthermore, the Address Remapping Unit behavior can be changed at runtime in order to change the relationship between addresses and banks, allowing the adoption of the mapping strategy that best suits the executed workload. The Address Decode Unit is the component dedicated to actually performing the decoding process of the mapping strategy. The cyclic-mapping strategy is the only currently supported and is very easy to implement: it assigns the first word to the first memory bank, the second word to the second memory bank and so on, repeating the assignment process every B times (where B is the number of memory banks) and considering bank indexes 0 and B-1 as consecutive. For the cyclic-mapping strategy, indexes e offsets are obtained simply dividing the address as follows.

assign bank_offset = address[`SM_ADDRESS_LEN - 1 -: `SM_ENTRY_ADDRESS_LEN ]; assign bank_index = address[`SM_BYTE_ADDRESS_LEN +: `SM_MEMORY_BANK_ADDRESS_LEN ];

Serialization Logic Unit

The Serialization Logic Unit performs the conflict detection and the serialization of the conflicting requests. It implements an iterative algorithm which defines a set of non-conflicting pending requests to be executed at every clock cycle. Whenever an n-way conflict is detected, the Serialization Logic Unit puts the SPM in the Busy state and splits the requests into n conflict-free requests issued serially in the next n clock cycles, causing an n-times slowdown. When the last request is issued, the Serialization Logic Unit put the SPM in the Ready state. Notice that for the Serialization Logic Unit, multiple accesses to the same address are not seen as a conflict, as in this occurrence a broadcast mechanism is activated. This broadcast mechanism provides an efficient way to satisfy multiple read requests for the same word.

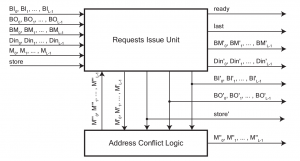

Among other things, as shown in the figure above, the Serialization Logic Unit takes in input the L-bit-long mask (M in the figure above) identifying the lanes to serve. At every clock cycle, it keeps track of the requests to be served in that cycle and to postpone to the next cycle by defining three masks of L bits:

M': pending requests' mask;M'': mask of non-conflicting pending requests to serve in the current clock cycle;M''': mask of still-conflicting pending requests to postpone.

The Serialization Logic Unit is composed of two main components: the Request Issue Unit and the Address Conflict Logic.

Request Issue Unit

The Request Issue Unit has the task of storing information about requests throughout the serialization process.

The Requests Issue Unit keeps the information on a set of requests for as long as it takes to satisfy it, saving the information in a set of internal registers. When the unit is in the Ready state, it selects the bitmask received from the SPM (M in the figure above or input_pending_mask in the snippet below) as pending requests' mask (M') and saves the datapath internally. When the unit is in the Busy state, it rejects input mask from SPM, internal registers are disabled and a multiplexer outputs data previously saved in internal registers. The unit remains in the Busy state and uses the still-conflicting pending requests' mask (M''') received from the Address Conflict Logic as pending requests' mask (M') until it receives a completely-low bitmask and the serialization process comes to an end. The following code shows the output decoder based on the current state of the unit:

always_comb begin case (current_state) READY : begin output_selection = 1'b0; input_reg_enable = 1'b1; ready = 1'b1; output_is_last_request = !conflict && (|input_pending_mask != 0); end ISSUING_REQUESTS : begin output_selection = 1'b1; input_reg_enable = 1'b0; ready = 1'b0; output_is_last_request = !conflict; end endcase end

The unit moves to the Busy state whenever it detects a conflict.

assign conflict = (|input_still_pending_mask != 0);

The last signal is asserted when a whole set of requests from an instruction is satisfied in a clock cycle. This signal will be used by the Collector Unit to validate the collection of the results of a block of serialized requests.

Address Conflict Logic

The Address Conflict Logic analyzes the mask of pending requests and determines a subset of non-conflicting requests that can be satisfied in the current clock cycle. Still-conflicting requests are defined doing a bitwise and between the pending requests' mask and the 1's complement of requests satisfied during the current clock cycle. Then, they are sent back to the Request Issue Unit according to the following code:

assign still_pending_mask = ~satisfied_mask & pending_mask;

The unit gives a priority value to each request and selects the highest priority request and the set of pending requests which do not point to the same memory bank as the set that can be executed. The priority value is related to the lane index (requests from lane 0 have the highest priority value and so on) and the evaluation takes up just one clock cycle. The Address Conflict Logic is composed of the following parts:

- Conflict Detection: it makes the conflicting requests' mask by comparing bank indexes of pending requests. If a request is pending and collides with an higher-priority request, the conflicts' mask will set a high bit in the position of the lower-priority lane. It is worth noting that all comparators are in parallel.

generate for (i = 0; i < `SM_PROCESSING_ELEMENTS; i++) begin for (j = i + 1; j < `SM_PROCESSING_ELEMENTS; j++) assign conflict_matrix[j][i] = ((bank_offsets[i] == bank_offsets[j]) && pending_mask[i]); end endgenerate

- Broadcast Selection: it implements the broadcasting mechanism, useful if several lanes require the read operation on the same word. It elects the address of the higher-priority pending request as broadcast address. Then it compares the bank indexes and the bank offsets of the pending requests with those of the elected broadcast address and sets a high bit in the position of the lane that refers to the same address. Since broadcasting makes sense for read-only instructions, the comparators' outputs are put in an AND gate together with the

¬store(surely high due to the read operation) to generate the broadcast mask.

generate for (i = 0; i < `SM_PROCESSING_ELEMENTS; i++) assign broadcast_mask[i] = ((bank_indexes[i] == broadcast_bank_index) && (bank_offsets[i] == broadcast_bank_offset) && !is_store); endgenerate

- Decision Logic: it determines the mask of the requests to be processed in the current clock cycle, starting from the conflicts' mask and the broadcast mask. A request can be executed immediately only if does not collide with any higher-priority request or if wants to read from the broadcast address. The useful requests are set according to the following code:

generate for (i = 0; i < `SM_PROCESSING_ELEMENTS; i++) assign satisfied_mask[i] = pending_mask[i] && (broadcast_mask[i] || !conflicts_mask[i]); endgenerate

Stage 2

The second stage (in the red box in the figure above) hosts the memory banks. According to the address, incoming requests from the lanes are forwarded to the appropriate memory bank.

Input Interconnect

The Input Interconnect is an interconnection network that steers source data and/or control signals coming from a lane in the GPU-like processor to the destination bank. Because the Input Interconnect follows the Serialization Logic Unit, it only accepts one request per bank. Its structure is composed of as many Bank Steering Units as there are memory banks.

Bank Steering Unit

Each Bank Steering Unit selects any request that refers to the i-th memory bank among all requests to be processed and forwards it correctly. A set of L comparators firstly checks if the bank indexes and the preloaded index i are equal. Then results are put in an AND gate together with the mask of the requests to be processed in the current clock cycle. In the case of read operations, due to the broadcasting mechanism, the bank offsets of incoming requests are all the same, so requests are equivalent and it is possible to forward any of them to the memory bank. The bitmask of pending requests of the i-th memory bank are sent to the Priority Encoder that chooses the index of the highest-priority pending request. To avoid performing incorrect operations, if there is no request for the selected memory bank, the selected index is put in an AND gate with the validity output of the Priority Encoder. Therefore, this output is high only if there is actually a request to be processed for the i-th memory bank.

generate

for (i = 0; i < `SM_PROCESSING_ELEMENTS; i++)

assign compare_mask[i] = input_bank_indexes[i] == BANK_ADDRESS;

endgenerate

priority_encoder_nuplus #(

.INPUT_WIDTH(`SM_PROCESSING_ELEMENTS),

.MAX_PRIORITY("LSB")

) controller (

.decode(input_mask & compare_mask), //input_mask is satisfied_mask from Input Interconnect

.encode(selected_address),

.valid(priority_encoder_valid)

);

assign output_enable = input_mask[selected_address] & priority_encoder_valid;

Banked memory

Then, there are the B memory banks providing the required memory elements. Each memory bank receives the bank offset, the source data, and the control signal form the lane that addressed it. Each bank has a single read/write port with a byte-level write enable signal to support instructions with operand sizes smaller than word. Furthermore, each lane controls a bit in an L-bit mask bus that is propagated through the Input Interconnect to the appropriate bank. This bit acts as a bank enable signal. In this way, we can disable some lanes and execute operations on a vector smaller than L elements.

Stage 3

The third and final stage (in the yellow box in the figure above) propagates the data output from the memory banks to the lanes that requested it storing the result in the corresponding register. If any conflicting requests have been serialized, results are collected and output as a single vector.

Output Interconnect

The Output Interconnect propagates the loaded data to the lane that requested it.

Collector Unit

There is a Collector Unit which is a set of L registers that collect the results coming from the serialized requests outputting them as a single vector.

24/4/2019

INTERFACCIA E FUNZIONALITA'

Vista modulare con ingressi-uscite (con descrizione) FSM (differenza comportamento fully-pipelined e n-way conflicts) ingressi parametrizzabili

MODULI

Stadio 0 (pipe) Stadio 1 (stage1) Stadio 2 (stage2) Stadio 3 (stage3)

ESEMPIO DI FUNZIONAMENTO

Si deve aggiungere l'attributo __scratchpad per avere la sicurezza che una variabile venga allocata in SPM. Definire qualche esempio più specifico (e.g. conv_layer_mvect_mt con uno o due core)