Applications

Contents

- 1 Complex Product Kernel

- 2 Using OpenMP on the nu+ manycore

- 3 References

Complex Product Kernel

A kernel running the complex product has been implemented in order to achieve cross verifications, data path evaluations and various types of considerations.

Scalar Registers

In the first instance, the implemented program executed a complex product of the type (a + jb)*(c + jd), where the values of the real and imaginary parts are saved in separate variables, for a total of four integer variables (two real parts and two imaginary parts). The output result is saved in two variables (real and imaginary part). In the first implementation of the kernel, the four variables were declared in the main program. This choice did not produce any results during the kernel emulation phase, since the values of the source operands were not loaded in the scalar registers of the processor. This is due to the fact that the variables are local and so they were loaded in the stack area that is limited in size.

int main () {

int ReA=3; // real part A

int ImA=4; // imaginary part A

int ReB=5; // real part B

int ImB=6; // imaginary part B

int Re=0;

int Im=0;

Re= (ReA*ReB)-(ImA*ImB);

Im= (ReA*ImB)+(ReB*ImA);

return 0;

}To overcome this limitation, variables have been declared globally. So the source operand values have been loaded correctly in the scalar registers of the processor. In addition, the right result has been produced and written in memory.

int ReA=3;

int ImA=4;

int ReB=5;

int ImB=6;

int Re=0;

int Im=0;

int main(){

Re= (ReA*ReB)-(ImA*ImB);

Im= (ReA*ImB)+(ReB*ImA);

return 0;

}In this last implementation, the value of the program counter at the time of loading all source operands in the scalar registers was $104 (hexadecimal value). The second and last store (two stores are required: one for the real part and one for the imaginary part) occurred when the PC value was $134. So, the implemented kernel used 13 instructions ($134-$104=$30 = 48; (48/4)+1=13) from the time of loading at the time of writing all the results in memory. To try to get a decrease in the number of instructions, tests on source operands were made to understand how modifying the data path could affect code optimization. First, source and result operands have been expressed as vector of two elements (an element represents the real and another imaginary part).

int A[2]={3,4};

int B[2]={5,6};

int Ris[2];

int main(){

Ris[0]= (A[0]*B[0])-(A[1]*B[1]);

Ris[1]= (A[0]*B[1])+(B[0]*A[1]);

return 0;

}In this case, the value of the program counter at the time of loading all source operands in the scalar registers was $F4 (hexadecimal value). The second and last store occurred when the PC value was $11C, saving 6 instructions from the previous case. The reason is because, by expressing the operands as vectors, it is not necessary to calculate the effective address of all the elements each time (operation taking 3 instructions), but only by means of the offset (only 1 instruction needed). So it is clear in this case the advantage of expressing the values of the operands in vector form rather than scaling form.

Vector Registers

In order to perform further tests, source operands have been declared as vec16i32 so they use vector registers rather than scalar registers. In the first instance, the implemented program executed a complex product of vectors where the values of the real and imaginary parts are saved in separate vectors, for a total of four vectors with 16 elements (two vectors for the real parts and two for the imaginary parts). The output result is saved in two vectors (real and imaginary parts).

#include <stdint.h>

#include <string.h>

vec16i32 ReA = {3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3};

vec16i32 ImA = {4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4};

vec16i32 ReB = {5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5};

vec16i32 ImB = {6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6};

vec16i32 ReR;

vec16i32 ImR;

int main(){

ReR= (ReA*ReB)-(ImA*ImB);

ImR= (ReA*ImB)+(ReB*ImA);

return 0;

}In this case, the value of the program counter at the time of loading all source operands in the scalar registers was $104 (hexadecimal value). The second and last store occurred when the PC value was $134.

Then, the source operands have been expressed as two matrix of two vectors of 16 elements (a vector for the real parts e a vector for the imaginary parts). The output result is saved in a matrix of 2 vectors.

#include <stdint.h>

#include <string.h>

vec16i32 A[2] = {{3,3,3,3,3,3,3,3,3,3,3,3,3,3,3,3},{4,4,4,4,4,4,4,4,4,4,4,4,4,4,4,4}};

vec16i32 B[2] = {{5,5,5,5,5,5,5,5,5,5,5,5,5,5,5,5},{6,6,6,6,6,6,6,6,6,6,6,6,6,6,6,6}};

vec16i32 Ris[2];

int main(){

Ris[0]= (A[0]*B[0])-(A[1]*B[1]);

Ris[1]= (A[0]*B[1])+(B[0]*A[1]);

return 0;

}In this last case, the value of the program counter at the time of loading all source operands in the scalar registers was $F4 (hexadecimal value). The second and last store occurred when the PC value was $11C, saving 6 instructions from the previous case. This time, you get the same advantages as in the scalar registers, thanks to the saving of instructions obtained calculating the address of the operands through the offset. Therefore it is preferable to express the operands in matrix form, and not as separate vectors.

Threads

Ultimately, matrices of 16 vectors have been used in order to perform a larger number of operations. In this way, you could also assign to threads a subset of operations parallelizing the execution. The two versions realized are composed of 4 matrices of 16 vectors (two matrices contain the real parts and two matrices contain the imaginary parts) and 2 matrices of 32 vectors (the first 16 vectors contain the real parts of a number and the last 16 vectors contain imaginary parts).

#include <stdint.h>

#include <string.h>

vec16i32 Re_A[16] = {

{64,90,89,87,83,80,75,70,64,57,50,43,36,25,18,9},

{64,87,75,57,36,9,-18,-43,-64,-80,-89,-90,-83,-70,-50,-25},

{64,80,50,9,-36,-70,-89,-87,-64,-25,18,57,83,90,75,43},

{64,70,18,-43,-83,-87,-50,9,64,90,75,25,-36,-80,-89,-57},

{64,57,-18,-80,-83,-25,50,90,64,-9,-75,-87,-36,43,89,70},

{64,43,-50,-90,-36,57,89,25,-64,-87,-18,70,83,9,-75,-80},

{64,25,-75,-70,36,90,18,-80,-64,43,89,9,-83,-57,50,87},

{64,9,-89,-25,83,43,-75,-57,64,70,-50,-80,36,87,-18,-90},

{64,-9,-89,25,83,-43,-75,57,64,-70,-50,80,36,-87,-18,90},

{64,-25,-75,70,36,-90,18,80,-64,-43,89,-9,-83,57,50,-87},

{64,-43,-50,90,-36,-57,89,-25,-64,87,-18,-70,83,-9,-75,80},

{64,-57,-18,80,-83,25,50,-90,64,9,-75,87,-36,-43,89,-70},

{64,-70,18,43,-83,87,-50,-9,64,-90,75,-25,-36,80,-89,57},

{64,-80,50,-9,-36,70,-89,87,-64,25,18,-57,83,-90,75,-43},

{64,-87,75,-57,36,-9,-18,43,-64,80,-89,90,-83,70,-50,25},

{64,-90,89,-87,83,-80,75,-70,64,-57,50,-43,36,-25,18,-9}

};

vec16i32 Im_A[16] = {

{64,64,64,64,64,64,64,64,64,64,64,64,64,64,64,64},

{90,87,80,70,57,43,25,9,-9,-25,-43,-57,-70,-80,-87,-90},

{89,75,50,18,-18,-50,-75,-89,-89,-75,-50,-18,18,50,75,89},

{87,57,9,-43,-80,-90,-70,-25,25,70,90,80,43,-9,-57,-87},

{83,36,-36,-83,-83,-36,36,83,83,36,-36,-83,-83,-36,36,83},

{80,9,-70,-87,-25,57,90,43,-43,-90,-57,25,87,70,-9,-80},

{75,-18,-89,-50,50,89,18,-75,-75,18,89,50,-50,-89,-18,75},

{70,-43,-87,9,90,25,-80,-57,57,80,-25,-90,-9,87,43,-70},

{64,-64,-64,64,64,-64,-64,64,64,-64,-64,64,64,-64,-64,64},

{57,-80,-25,90,-9,-87,43,70,-70,-43,87,9,-90,25,80,-57},

{50,-89,18,75,-75,-18,89,-50,-50,89,-18,-75,75,18,-89,50},

{43,-90,57,25,-87,70,9,-80,80,-9,-70,87,-25,-57,90,-43},

{36,-83,83,-36,-36,83,-83,36,36,-83,83,-36,-36,83,-83,36},

{25,-70,90,-80,43,9,-57,87,-87,57,-9,-43,80,-90,70,-25},

{18,-50,75,-89,89,-75,50,-18,-18,50,-75,89,-89,75,-50,18},

{9,-25,43,-57,70,-80,87,-90,90,-87,80,-70,57,-43,25,-9}

};

vec16i32 Re_B[16] = {

{64,64,64,64,64,64,64,64,64,64,64,64,64,64,64,64},

{90,87,80,70,57,43,25,9,-9,-25,-43,-57,-70,-80,-87,-90},

{89,75,50,18,-18,-50,-75,-89,-89,-75,-50,-18,18,50,75,89},

{87,57,9,-43,-80,-90,-70,-25,25,70,90,80,43,-9,-57,-87},

{83,36,-36,-83,-83,-36,36,83,83,36,-36,-83,-83,-36,36,83},

{80,9,-70,-87,-25,57,90,43,-43,-90,-57,25,87,70,-9,-80},

{75,-18,-89,-50,50,89,18,-75,-75,18,89,50,-50,-89,-18,75},

{70,-43,-87,9,90,25,-80,-57,57,80,-25,-90,-9,87,43,-70},

{64,-64,-64,64,64,-64,-64,64,64,-64,-64,64,64,-64,-64,64},

{57,-80,-25,90,-9,-87,43,70,-70,-43,87,9,-90,25,80,-57},

{50,-89,18,75,-75,-18,89,-50,-50,89,-18,-75,75,18,-89,50},

{43,-90,57,25,-87,70,9,-80,80,-9,-70,87,-25,-57,90,-43},

{36,-83,83,-36,-36,83,-83,36,36,-83,83,-36,-36,83,-83,36},

{25,-70,90,-80,43,9,-57,87,-87,57,-9,-43,80,-90,70,-25},

{18,-50,75,-89,89,-75,50,-18,-18,50,-75,89,-89,75,-50,18},

{9,-25,43,-57,70,-80,87,-90,90,-87,80,-70,57,-43,25,-9}

};

vec16i32 Im_B[16] = {

{64,90,89,87,83,80,75,70,64,57,50,43,36,25,18,9},

{64,87,75,57,36,9,-18,-43,-64,-80,-89,-90,-83,-70,-50,-25},

{64,80,50,9,-36,-70,-89,-87,-64,-25,18,57,83,90,75,43},

{64,70,18,-43,-83,-87,-50,9,64,90,75,25,-36,-80,-89,-57},

{64,57,-18,-80,-83,-25,50,90,64,-9,-75,-87,-36,43,89,70},

{64,43,-50,-90,-36,57,89,25,-64,-87,-18,70,83,9,-75,-80},

{64,25,-75,-70,36,90,18,-80,-64,43,89,9,-83,-57,50,87},

{64,9,-89,-25,83,43,-75,-57,64,70,-50,-80,36,87,-18,-90},

{64,-9,-89,25,83,-43,-75,57,64,-70,-50,80,36,-87,-18,90},

{64,-25,-75,70,36,-90,18,80,-64,-43,89,-9,-83,57,50,-87},

{64,-43,-50,90,-36,-57,89,-25,-64,87,-18,-70,83,-9,-75,80},

{64,-57,-18,80,-83,25,50,-90,64,9,-75,87,-36,-43,89,-70},

{64,-70,18,43,-83,87,-50,-9,64,-90,75,-25,-36,80,-89,57},

{64,-80,50,-9,-36,70,-89,87,-64,25,18,-57,83,-90,75,-43},

{64,-87,75,-57,36,-9,-18,43,-64,80,-89,90,-83,70,-50,25},

{64,-90,89,-87,83,-80,75,-70,64,-57,50,-43,36,-25,18,-9}

};

vec16i32 Re[16];

vec16i32 Im[16];

int main(){

static int N=16;

for (int i=0; i<N; i++){

Re[i]=Re_A[i]*Re_B[i]-Im_A[i]*Im_B[i];

Im[i]=Re_A[i]*Im_B[i]+Im_A[i]*Re_B[i];

}

return 0;

}#include <stdint.h>

#include <string.h>

vec16i32 A[32] = {

// Re_A

{64,90,89,87,83,80,75,70,64,57,50,43,36,25,18,9},

{64,87,75,57,36,9,-18,-43,-64,-80,-89,-90,-83,-70,-50,-25},

{64,80,50,9,-36,-70,-89,-87,-64,-25,18,57,83,90,75,43},

{64,70,18,-43,-83,-87,-50,9,64,90,75,25,-36,-80,-89,-57},

{64,57,-18,-80,-83,-25,50,90,64,-9,-75,-87,-36,43,89,70},

{64,43,-50,-90,-36,57,89,25,-64,-87,-18,70,83,9,-75,-80},

{64,25,-75,-70,36,90,18,-80,-64,43,89,9,-83,-57,50,87},

{64,9,-89,-25,83,43,-75,-57,64,70,-50,-80,36,87,-18,-90},

{64,-9,-89,25,83,-43,-75,57,64,-70,-50,80,36,-87,-18,90},

{64,-25,-75,70,36,-90,18,80,-64,-43,89,-9,-83,57,50,-87},

{64,-43,-50,90,-36,-57,89,-25,-64,87,-18,-70,83,-9,-75,80},

{64,-57,-18,80,-83,25,50,-90,64,9,-75,87,-36,-43,89,-70},

{64,-70,18,43,-83,87,-50,-9,64,-90,75,-25,-36,80,-89,57},

{64,-80,50,-9,-36,70,-89,87,-64,25,18,-57,83,-90,75,-43},

{64,-87,75,-57,36,-9,-18,43,-64,80,-89,90,-83,70,-50,25},

{64,-90,89,-87,83,-80,75,-70,64,-57,50,-43,36,-25,18,-9},

// Im_A

{64,64,64,64,64,64,64,64,64,64,64,64,64,64,64,64},

{90,87,80,70,57,43,25,9,-9,-25,-43,-57,-70,-80,-87,-90},

{89,75,50,18,-18,-50,-75,-89,-89,-75,-50,-18,18,50,75,89},

{87,57,9,-43,-80,-90,-70,-25,25,70,90,80,43,-9,-57,-87},

{83,36,-36,-83,-83,-36,36,83,83,36,-36,-83,-83,-36,36,83},

{80,9,-70,-87,-25,57,90,43,-43,-90,-57,25,87,70,-9,-80},

{75,-18,-89,-50,50,89,18,-75,-75,18,89,50,-50,-89,-18,75},

{70,-43,-87,9,90,25,-80,-57,57,80,-25,-90,-9,87,43,-70},

{64,-64,-64,64,64,-64,-64,64,64,-64,-64,64,64,-64,-64,64},

{57,-80,-25,90,-9,-87,43,70,-70,-43,87,9,-90,25,80,-57},

{50,-89,18,75,-75,-18,89,-50,-50,89,-18,-75,75,18,-89,50},

{43,-90,57,25,-87,70,9,-80,80,-9,-70,87,-25,-57,90,-43},

{36,-83,83,-36,-36,83,-83,36,36,-83,83,-36,-36,83,-83,36},

{25,-70,90,-80,43,9,-57,87,-87,57,-9,-43,80,-90,70,-25},

{18,-50,75,-89,89,-75,50,-18,-18,50,-75,89,-89,75,-50,18},

{9,-25,43,-57,70,-80,87,-90,90,-87,80,-70,57,-43,25,-9}

};

vec16i32 B[32] = {

//Re_B

{64,64,64,64,64,64,64,64,64,64,64,64,64,64,64,64},

{90,87,80,70,57,43,25,9,-9,-25,-43,-57,-70,-80,-87,-90},

{89,75,50,18,-18,-50,-75,-89,-89,-75,-50,-18,18,50,75,89},

{87,57,9,-43,-80,-90,-70,-25,25,70,90,80,43,-9,-57,-87},

{83,36,-36,-83,-83,-36,36,83,83,36,-36,-83,-83,-36,36,83},

{80,9,-70,-87,-25,57,90,43,-43,-90,-57,25,87,70,-9,-80},

{75,-18,-89,-50,50,89,18,-75,-75,18,89,50,-50,-89,-18,75},

{70,-43,-87,9,90,25,-80,-57,57,80,-25,-90,-9,87,43,-70},

{64,-64,-64,64,64,-64,-64,64,64,-64,-64,64,64,-64,-64,64},

{57,-80,-25,90,-9,-87,43,70,-70,-43,87,9,-90,25,80,-57},

{50,-89,18,75,-75,-18,89,-50,-50,89,-18,-75,75,18,-89,50},

{43,-90,57,25,-87,70,9,-80,80,-9,-70,87,-25,-57,90,-43},

{36,-83,83,-36,-36,83,-83,36,36,-83,83,-36,-36,83,-83,36},

{25,-70,90,-80,43,9,-57,87,-87,57,-9,-43,80,-90,70,-25},

{18,-50,75,-89,89,-75,50,-18,-18,50,-75,89,-89,75,-50,18},

{9,-25,43,-57,70,-80,87,-90,90,-87,80,-70,57,-43,25,-9},

// Im_B

{64,90,89,87,83,80,75,70,64,57,50,43,36,25,18,9},

{64,87,75,57,36,9,-18,-43,-64,-80,-89,-90,-83,-70,-50,-25},

{64,80,50,9,-36,-70,-89,-87,-64,-25,18,57,83,90,75,43},

{64,70,18,-43,-83,-87,-50,9,64,90,75,25,-36,-80,-89,-57},

{64,57,-18,-80,-83,-25,50,90,64,-9,-75,-87,-36,43,89,70},

{64,43,-50,-90,-36,57,89,25,-64,-87,-18,70,83,9,-75,-80},

{64,25,-75,-70,36,90,18,-80,-64,43,89,9,-83,-57,50,87},

{64,9,-89,-25,83,43,-75,-57,64,70,-50,-80,36,87,-18,-90},

{64,-9,-89,25,83,-43,-75,57,64,-70,-50,80,36,-87,-18,90},

{64,-25,-75,70,36,-90,18,80,-64,-43,89,-9,-83,57,50,-87},

{64,-43,-50,90,-36,-57,89,-25,-64,87,-18,-70,83,-9,-75,80},

{64,-57,-18,80,-83,25,50,-90,64,9,-75,87,-36,-43,89,-70},

{64,-70,18,43,-83,87,-50,-9,64,-90,75,-25,-36,80,-89,57},

{64,-80,50,-9,-36,70,-89,87,-64,25,18,-57,83,-90,75,-43},

{64,-87,75,-57,36,-9,-18,43,-64,80,-89,90,-83,70,-50,25},

{64,-90,89,-87,83,-80,75,-70,64,-57,50,-43,36,-25,18,-9}

};

vec16i32 Ris[32];

int main(){

static int N=16;

for (int i=0; i<N; i++){

Ris[i]=A[i]*B[i]-A[i+N]*B[i+N];

Ris[i+N]=A[i]*B[i+N]+A[i+N]*B[i];

}

return 0;

}In this case, the gain in terms of instructions saved due to the calculation of the addresses by offset is not visible because there is an addition of instructions due to the calculation of the indices. Therefore, in the latter case, it is convenient to write the result of source operands as 4 separate matrices instead of as two matrices of double size. The versions made were performed both with a single thread, two threads and four threads. In both versions, the number of instructions in each thread decreases proportionally with the total number of threads (for example, with two threads the instructions halve about).

int main(){

static int N=16;

int threadId = __builtin_nuplus_read_control_reg(2);

int numThread = 4;

for (int i=0; i<N/numThread; i++){

Re[((N/numThread)*threadId)+i]=Re_A[((N/numThread)*threadId)+i]*Re_B[((N/numThread)*threadId)+i]-Im_A[((N/numThread)*threadId)+i]*Im_B[((N/numThread)*threadId)+i];

Im[((N/numThread)*threadId)+i]=Re_A[((N/numThread)*threadId)+i]*Im_B[((N/numThread)*threadId)+i]+Im_A[((N/numThread)*threadId)+i]*Re_B[((N/numThread)*threadId)+i];

}

return 0;

}int main(){

static int N=16;

int threadId = __builtin_nuplus_read_control_reg(2);

int numThread = 4;

for (int i=0; i<N/numThread; i++){

Ris[((N/numThread)*threadId)+i]=A[((N/numThread)*threadId)+i]*B[((N/numThread)*threadId)+i]-A[((N/numThread)*threadId)+i+N]*B[((N/numThread)*threadId)+i+N];

Ris[((N/numThread)*threadId)+i+N]=A[((N/numThread)*threadId)+i]*B[((N/numThread)*threadId)+i+N]+A[((N/numThread)*threadId)+i+N]*B[((N/numThread)*threadId)+i];

}

return 0;

}As for the cross verification step, in all the tested versions the correct result was written in memory.

Using OpenMP on the nu+ manycore

Introduction

The openMP specification defines a portable parallel programming model, as reported in [1].

This model is supported by many compilers, like GNU-gcc or LLVM/clang. OpenMP directives, runtime-library functions and environment variables permit to specify how a compiler must act to divide the workload across many threads running in parallel, obeying “Single Program Multiple Data” paradigm without requiring any in-deep knowledge about the system architecture.

Execution model

OpenMP is based on fork-join execution model, with many threads running a task, defined, implicitly or explicitly, using openMP directives. An openMP program begins its execution as single thread, called the initial-thread, on an host-device. Many other target-device may be used to run a parallel region. The execution model is host-centric: the host-device explicitly uploads data and programs to target-device.

A parallel region is, essentially, a sequence of instructions running in parallel at the same time on many processing elements. Typically, a parallel region is defined using the “parallel” directive, as shown in the following examople:

double a = 0, b = 0;

int n_int = 0;

int nthreads = 0;

func_ptr func = NULL;

double h = (b - a) / n_int;

int i;

double partial = 0;

...

...

#pragma omp parallel for\

default(none) \

shared(a, h, n_int) \

private(i) \

reduction(+:partial)

{

for (i = 0; i < n_int; i++)

partial += func(a + float(i * h));

}After that, the initial-thread meets a “parallel” directive, creating a team of threads, becoming its master-thread, as shown in figure [fig:modello-di-esecuzione].

The code in the parallel section will be executed by each thread in the team. At the end of a parallel region there is an implicitly defined sync-barrier. The purpose of this sync-barrier is to suspend a thread when it completes its own task, until all threads have done their work. Only the master-thread will be awakened by the suspension, so it will execute a possible sequential region located immediately after the parallel region.

Parallel regions may be nested each-others. If nested parallel region isn't allowed, when, in a parallel region, a thread meet another parallel directive, it will create a new thread-team composed by itself and also become the master-thread.

Memory model

OpenMP supports a relaxed consistency memory model, like the one described in [2]. Each thread may have a its own “temporary view” of the memory. This concept is used to model hardware register and the cache-subsystem, which lie between cores and memory. Each thread also have a “thread-private” memory, an area for its exclusive use.

Variables used in parallel regions must have an “original counterpart”, defined outside the parallel section, as shown in figure:

The “view” that each thread have on each of those variables may be:

- shared: all references to a specific variable inside the parallel section will become a reference to the original counterpart;

- private: a new copy, with same name, same type and same size, will be created on the thread-private stack. The relationship between values of each copy depends on the specific private clause used.

- private variables are not initialized, so they start with random values like any other local automatic variable (and they are often implemented using automatic variables on the stack of each thread);

- firstprivate specifies that each thread should have its own instance of a variable, and that the variable should be initialized with the value of original counterpart, because it exists before the parallel construct;

- lastprivate clause specifies that the enclosing context's version of the variable is set equal to the private version of whichever thread executes the final iteration (for-loop construct) or last section (#pragma omp sections directive).

With nested parallel regions, a variable marked as private in the outer region may become shared in the inner region. Access to variables may be implemented with more than one load/store operation and there is no need to guarantee atomicity. This impose the use of explicit synchronization between access performed by different threads to the same variable.

Traditional implementation

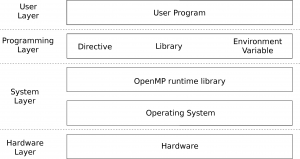

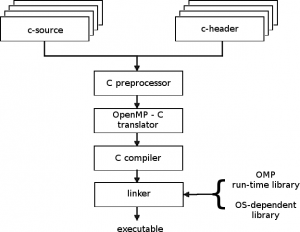

In a shared-memory general-purpose system, as well as in HPC system, openMP implementation is based on functionalities provided by the operating system and thread library, as shown in figure [fig:stack-OS]. In this kind of systems, OS and thread library provide thread handling functions and workload partitioning functions. Usually an openMP program is built as shown in figure [fig:processo-di-compilazione]: after the pre-compilation step, the code pass through a “translation” step, in which all openMP directives are substituted with explicit call to thread library functions, provided by OS.

As reported in[3][4], all codes in a parallel region will be encapsulated in a subroutine and the “parallel” directive translated as explicit call to thread creation function. In GNU-gcc, for example, using libgomp runtime library, the parallel directive will be translated in an explicit call to

void GOMP_parallel_start(void (*fn)(void*), void* data, int num_threads)which, in a GNU-Linux system, explicitly call functions from the pthread library. Parallel regions ends with a call to

void GOMP_parallel_end()

which implements sync-barriers as discussed above.

No operating system

Usually, in a system with OS and thread library, openMP directives will be translated with explicit call to OS thread library functions, as discussed above. nu+ hasn't neither OS, nor thread library, so the translation must be done "manually", as well as data and program uploading to target-device. Taking inspiration from [5], we will discuss the translation of some of the most frequently used directives, using practical examples.

As we will see, the translation procedure is very simple and easily automated. The following examples show how an openMP program can be translated in an openMP-like fashion with only a little effort.

Example: scalar product

Consider the following piece of code:

int i;

sequential_part();

#pragma omp parallel for \

default(none) \

private(i) \

shared (N, Re_A, Re_B, Im_A, Im_B, Re_R, Im_R)

for (i = 0; i < N; i++) {

Re_R[i] = Re_A[i]*Re_B[i] - Im_A[i]*Im_B[i];

Im_R[i] = Re_A[i]*Im_B[i] + Im_A[i]*Re_B[i];

}

another_sequential_part();First of all, using __omp_get_thread_id(), we will be able to distinguish between threads, so the sequential parts can be enclosed in an if-then statement. All functions with the "__omp" prefix are written ad-hoc, to simulate the presence of the openMP library. You can find the declaration of all these functions in the “__omp.hpp” header file attached to this article.

Each line in the parallel section will be enclosed in a subroutine like void parallel_region_X(void*) where X is the serial identification number of the parallel section. If we have two parallel sections, the first one will be enclosed in parallel_region_0(), then the second one will be enclosed in parallel_region_1().

Let's consider variables used in the parallel section. All shared variables will be passed to each thread through their memory address. For example typedef struct {...} parallel_region_X_args_t will contain the memory-address for each shared variable. In addition, the structure must contain the value to be assigned to the firstprivate variables. Variables marked as private, instead, will be defined internally to the subroutine that encapsulates the parallel region.

typedef struct {

int *N;

int *Re_A;

int *Re_B;

int *Im_A;

int *Im_B;

int *Re_R;

int *Im_R;

__omp_barrier_t start_barrier;

__omp_barrier_t end_barrier;

} parallel_region_0_args_t;

The parallel_region_0_args_t variable must be globally defined, so its life will span the entire program life-time, and it must be initialized. Ignore the two __omp_barrier_t, at this moment.

Parallel region

Let's look at the parallel section, enclosed in the parallel_region_0() subroutine. It starts with the declaration of i, the variable marked as private in the parallel section.

Please, note the usage of _args pointer to access to shared variables through their memory-address, stored in parallel_region_0_args_t structure. If shared variables are defined as global variables there is no need to pass them to each thread through the struct. Moreover there is no need to put an "_args->" prefix in front of them.

The first call to __omp_barrier() is used to stop all other threads until the master-thread reaches the parallel region. __omp_for_start() and __omp_for_end() are used to partition the outer for loop to each thread. Before the end of the parallel section there is another call to __omp_barrier(). Threads need to sync each-other and to wait for everyone to finish running before they can continue.

void parallel_region_0(void *args) {

parallel_region_0_args_t *_args = (parallel_region_0_args_t*) args;

int i;

__omp_barrier(&_args->start_barrier);

for (i = __omp_for_start(*(_args->N)); i < __omp_for_end(*(_args->N)); i++) {

_args->Re_R[i] = _args->Re_A[i] * _args->Re_B[i] - _args->Im_A[i] * _args->Im_B[i];

_args->Im_R[i] = _args->Re_A[i] * _args->Im_B[i] + _args->Im_A[i] * _args->Re_B[i];

}

__omp_barrier(&_args->end_barrier);

}Workload partitioning

In most cases, the parallel directive is used on a for loop. Consider a for loop, like for (i = 0; i < N; i++). As shown in the previous sections, this loop can be partitioned using __omp_for_start() and __omp_for_end() functions.

The first one is very simple:

int __omp_for_start(int upperbound) {

int id = __omp_get_thread_id();

int threads = __omp_get_num_threads();

return (id == 0 ? 0 : id*(upperbound)/threads);

}It takes thread id and number of threads used in the parallel region and

- if the id is 0, then the starting index is 0;

- otherwise, the starting index is computed as <math display="inline">\frac{id\cdot upperbound}{numthreads}</math>, where “upperbound” is the last index.

For example, consider four threads and a for loop ranging from 0 to 100. Threads with id=2 will start with index <math display="inline">\frac{2*100}{4}=50</math>.

The __omp_for_end() uses a similar approach:

int __omp_for_end(int upperbound) {

int id = __omp_get_thread_id();

int threads = __omp_get_num_threads();

return (id == threads-1 ? upperbound : (id+1)*(upperbound)/threads);

}It takes thread id and number of thread used in the parallel region and

- if the is equal to number of thread minus one, then the ending index is equal to upperbound

- else, the ending index is computed as <math display="inline">\frac{(id+1)\cdot upperbound}{numthreads}</math>.

For example, consider four threads and a for loop ranging from 0 to 100. Threads with id=2 will end with index <math display="inline">\frac{3*100}{4}=75</math>.

This solution is very simple and balances workload well enough, but it only works with loop that looks like for (i = 0; i < N; i++).

Main function

Let's look at the main function now. It's easy to understand, even without any word. Each line before the beginning of the parallel section, those which must be executed by master-thread only, are enclosed in an if-then statement. Same thing with the lines immediately after the end of the parallel section.

int main(){

// master-thread only code-section

if (__omp_get_thread_id() == __OMP_MASTER_THREAD) {

}

// begin of parallel region 0

parallel_region_0((void*)¶llel_region_0_args);

// end of parallel region 0

// master-thread only code-section

if (__omp_get_thread_id() == __OMP_MASTER_THREAD) {

}

return 0;

}Example: matrix product

Consider the following piece of code:

int i, j, k, tmp;

#pragma omp parallel \

default(none) \

private(i, j, k, tmp) \

shared (A, B, C, rows, cols)

{

#pragma omp parallel for \

private(i, j, k, tmp) \

shared (A, B, C, rows, cols)

for (i=0; i<rows; i++)

for (j=0; j<cols; j++) {

tmp = 0;

for (k=0; k<cols; k++)

tmp += A[i][k]*B[k][j];

C[i][j] = tmp;

}

}

If shared variables are defined as global variables there is no need to pass them to each thread through the struct defined above. This time we'll take the second solution, so we define the following struct.

typedef struct {

__omp_barrier_t start_barrier;

__omp_barrier_t end_barrier;

} parallel_region_0_args_t;This solution has a lesser impact on memory usage. As in the previous case, the parallel_region_0_args_t variable must be globally defined, so its life will span the entire program life-time, and initialized.

Parallel region

While the main function remains practically the same as the one discussed at the paragraph [subsec:Main-function], the use of shared variables defined as global to the program has a significant impact on the translation of the parallel region, as shown in the following code.

void parallel_region_0(void *args) {

parallel_region_0_args_t *_args = (parallel_region_0_args_t*) args;

int i, j, k, tmp;

__omp_barrier(&_args->start_barrier);

for (i=__omp_for_start(rows); i<__omp_for_end(rows); i++)

for (j=0; j<cols; j++) {

tmp = 0;

for (k=0; k<cols; k++)

tmp += A[i][k]*B[k][j];

C[i][j] = tmp;

}

__omp_barrier(&_args->end_barrier);

}If shared variables are defined as global, there is no need to pass them to each thread through the struct. Also there is no need to put an "_args->" prefix in front of them.

Example: integral calculation

In this example we will show how to translate an openMP program that uses the reduction clauses. Consider the following piece of code:

int i;

float tmp = 0;

#pragma omp parallel \

default(none) \

shared(a, b, h, n_int, func) \

private (i) \

firstprivate(tmp) \

reduction(+:partial)

{

#pragma omp for private(i)

for (i = 0; i < (int)n_int; i++)

tmp += func(a + float(i * h));

partial = tmp * h;

}As in the previous examples, shared variables are translated as globally defined variables.

float a = 0, b = 3;

int n_int = 1000;

float h = (b - a) / n_int;The tmp variable is a firstprivate variable, so, in the parallel_region_0_args_t there is a variable with same name, time and size. The reduction clause acts on the "partial" variable. In our implementation we will use an array with the same name and the same type of variable, used by each one of the threads to save the result of its calculation in a reserved locationA possible optimization, which would reduce the delay introduced by the memory coherence protocol, is to put the reduction-array in the scratchpad-memory. It will be necessary, however, to be careful of the precise hardware structure of the scratchpad-memory.. The master-thread will use this array to make the reduction. From now, we call this array "reduction array".

float partial = 0;

typedef struct {

float tmp;

float partial[__OMP_NUM_THREADS];

__omp_barrier_t start_barrier;

__omp_barrier_t end_barrier;

} parallel_region_0_args_t;The parallel_region_0_args_t variable must be globally defined, so its life will span the entire program life-time, and it must be initialized. Ignore the two __omp_barrier_t, at this moment. The tmp and partial variables must be initialized with the value of their original counterparts.

Parallel region

There is a local variable called tmp, initialized with the value passed to the function through the parallel_region_0_args_t struct. The __omp_for_start() and __omp_for_end() are used to partition the for loop to each thread. To assign a value to the thread's reserved location in the reduction array, each thread must use the __omp_assign_T_reduct, where T is the type of the variable, so __omp_assign_float_reduct() can be used to assign a float, __omp_assign_int_reduct() can be used to assign an integer, and so on. Near the end of the parallel section there is another call to __omp_barrier(). Threads need to sync each-other and wait for everyone to finish running before they can continue. After the sync-barrier there is a call to __omp_float_reduct_plus() function. The operation specified inside this function will be executed only by the master-thread, to make the reduction.

Impact of memory-barrier implementation

As discussed in [6], sync-barriers are implemented totally in hardware, so they are totally transparent to software. The basic concept is really simple: the host-controller sets up a barrier, indicating the amount of threads that will use that barrier. When a thread meets the barrier, it sends a message to a sync-barrier manager, which will suspend the thread. The message travels on the NOC into a dedicated virtual-channel and contains the sync-barrier identification number. Each time the sync-barrier manager receives a message from a thread, it decrements the value of a counter. When the value reaches zero, the barrier manager sends a message to awake the threads suspended.

The manager can handle the configuration of a barrier and its use in a decoupled way, handling the situation in which it receives a message for a barrier not yet configured. The number of sync-barrier that can be handled is customizable.

This implementation of sync-barrier is very well suited to an openMP implementation, allowing to synchronize threads on the same tile, or different tiles. In addition, the transparency with which the mechanism can be used via software makes it very usable and very well suited to the openMP philosophy.

One of the few limitations of such implementation is not being able to reuse any barrier and not being able to configure barrier through a target-device, just because the configuration is performed, in this moment, only through the host-device.

References

- ↑ "OpenMP Specification"

- ↑ Daniel J. Sorin, Mark D. Hill, David A. Wood, "A Primer on Memory Consistency and Cache Coherence"

- ↑ "GNU libgomp"

- ↑ "LLVM/Clang OpenMP Reference"

- ↑ Woo-Chul Jeun, Soonhoi Ha, "Effective OpenMP Implementation and Translation For Multiprocessor System-On-Chip without Using OS"

- ↑ Articolo Daniele