The NaplesPU Hardware architecture

TODO: aggiungere riferimento alla configurazione single core

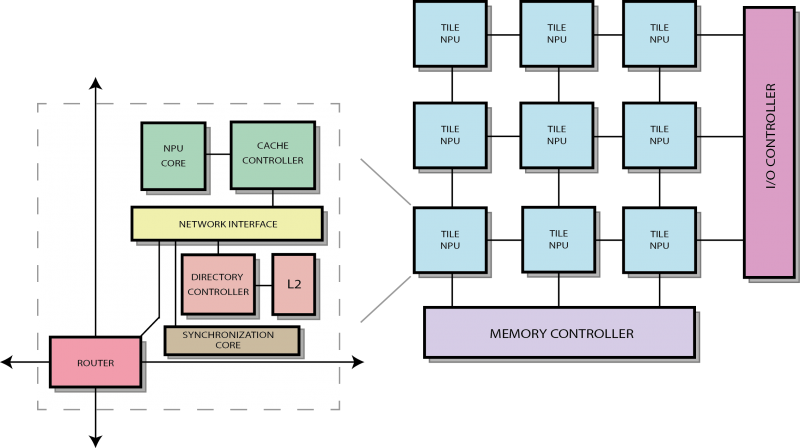

The nu+ many-core is a modular and deeply customizable system based on a regular mesh network-on-chip of configurable tiles, designed to be an extensible and parametric platform from the ground up suitable for exploring advanced ar- chitectural solutions. Developed in the framework of the MANGO FETHPC project, the main objective of nu+ is to enable resource-efficient HPC based on special-purpose customized hardware. This led to a modular design and an instruction layout that exposes enough freedom to extend both the stan- dard set of instructions and the baseline nu+ hardware design, hereafter discussed. This project aims to pose a fully customizable and easy to ex- tend many-core system, suitable for the exploration of both software and hardware advanced solutions for tomorrow’s systems.

The main objective of nu+ is to enable resource-efficient HPC based on special-purpose customized hardware. Our aim is to build an application-driven architecture to achieve the best hardware/software configuration for any data-parallel kernel. Specialized data-parallel accelerators have been known to provide higher efficiency than general-purpose processors for codes with significant amounts of regular data-level parallelism (DLP). However every parallel kernel has its own ideal configuration.

Each nu+ tile has the same basic components, it provides a configurable GPU-like open-source soft-core meant to be used as a configurable FPGA overlay. This HPC-oriented accelerator merges the SIMT paradigm with vector processor model. Furthermore, each tile has a Cache Controller and a Directory Controller, those components handle data coherence between different cores in different tiles.

User design can set an high number of parameter for every need, such as:

- NoC topology and Tile number.

- Threads per core number. Each thread has a different PC, so one core can executes as many program as many threads it has.

- Hardware lanes per thread. Each thread can be a vector operation (here called hardware lane).

- Register file size (scalar and vector).

- L1 and L2 cache size and way number.

There are all the hardware main section. Each of them covers important aspects of the hardware.

Hardware features

The system is based on a 2D mesh of heterogeneous tiles relying on a network-on-chip (NoC, described in Network architecture section). The NoC routers are tightly coupled with network interface modules providing packet-based communication over four different virtual channels. A two-stage look-ahead router is used implementing a wormhole flit-based communication. The networking infrastructure allows both intra-tile and inter-tile communication. One virtual channel is dedicated to service message flows. In particular, the manycore system supports a distributed synchronization mechanism based on hardware barriers (section Synchronization). Importantly, based on simple valid/ready interfaces, one can integrate any processing unit in each tile.

The GPU-like provided within the manycore is highly parameterizable, featuring a lightweight control infrastructure, hardware multithreading as well as a vector instruction set targeted at data-parallel kernels (described in nu+ core architecture section). In particular, the core is organized in 16 processing elements (PEs), each capable of both integer and floating-point operations on independent data, providing SIMD parallelism. Correspondingly, each thread is equipped with a vector register file, where each register can store up to 16 scalar data allowing each thread to perform vector operations on 16 independent data simultaneously.

To ensure scalability, the nu+ manycore implements a sparse directory approach and a distributed L2 cache, described in Coherence architecture section. Each tile deploys a coherence maintenance infrastructure along with the accelerator. A cache controller handles the local processing unit’s memory requests, turning load and store misses into directory requests over the network. It also handles responses and forwarded requests coming from the network, updating the block state in compliance with the given coherence protocol, while the accelerator is totally unaware of it.

The architectures of the cache and the directory controllers have been designed with flexibility in mind and are not bound to any specific coherence protocol. They are equipped with a configurable protocol ROM, which provides a simple means for coherence protocol extensions, as it precisely describes the actions to take for each request based on the current block state.

Hardware sections

Include:

Common:

Single Core:

- TODO Cache Controller

Many Core:

Deploy: TODO disegno/schema, interazione con host, loading memoria, avvio kernel

- TODO System interface descrizione dettagliata interfaccia item (comandi, console) e memoria

- TODO System deployment descrizione uart_router, memory_controller, con riferimento a template nexys4ddr

Testbench: