Difference between revisions of "The NaplesPU Hardware architecture"

(→Single Core Version) |

(→Configuring NaplesPU) |

||

| (22 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | The ''' | + | The '''NaplesPU''' many-core is a modular and deeply customizable system based on a regular mesh network-on-chip of configurable tiles, designed to be an extensible and |

parametric platform from the ground up suitable for exploring advanced architectural solutions. Developed in the framework of the MANGO FETHPC | parametric platform from the ground up suitable for exploring advanced architectural solutions. Developed in the framework of the MANGO FETHPC | ||

| − | project, the main objective of | + | project, the main objective of NaplesPU is to enable resource-efficient HPC based on special-purpose customized hardware. This led to a modular design and |

| − | an instruction layout that exposes enough freedom to extend both the standard set of instructions and the baseline | + | an instruction layout that exposes enough freedom to extend both the standard set of instructions and the baseline NPU hardware design, hereafter |

| − | discussed. This project aims to pose a fully customizable and easy to extend many-core system, suitable for the exploration of both software and | + | discussed. This project aims to pose a fully customizable and easy to extend the many-core system, suitable for the exploration of both software and |

hardware advanced solutions for tomorrow’s systems. | hardware advanced solutions for tomorrow’s systems. | ||

| − | The main objective of ''' | + | The main objective of '''NaplesPU''' is to enable resource-efficient HPC based on special-purpose customized hardware. Our aim is to build an application-driven architecture to achieve the best hardware/software configuration for any data-parallel kernel. Specialized data-parallel accelerators have been known to provide higher efficiency than general-purpose processors for codes with significant amounts of regular data-level parallelism (DLP). However, every parallel kernel has its own ideal configuration. |

| − | Each | + | Each NPU tile has the same basic components, it provides a configurable GPU-like open-source soft-core meant to be used as a configurable FPGA overlay. This HPC-oriented accelerator merges the SIMT paradigm with vector processor model. Furthermore, each tile has a Cache Controller and a Directory Controller, those components handle data coherence between different cores in different tiles. |

| + | |||

| + | [[File:npu_manycore.png|800px|NaplesPU manycore architecture]] | ||

| − | |||

== Tile Overview == | == Tile Overview == | ||

| − | + | The figure above captures a simplified overview of the NaplesPU many-core. Each NPU tile has the same basic components, it provides a configurable GPU-like | |

| − | accelerator meant to be used as a configurable FPGA overlay, an extendible coherence subsystem protocol independent, and a mesh-based networking | + | accelerator meant to be used as a configurable FPGA overlay, an extendible coherence subsystem protocol-independent, and a mesh-based networking |

system which routes hardware messages over the communication network. | system which routes hardware messages over the communication network. | ||

| Line 24: | Line 25: | ||

of advanced architecture customization capabilities, in order to enable a high-level utilization of the underlying resources. | of advanced architecture customization capabilities, in order to enable a high-level utilization of the underlying resources. | ||

| − | To ensure scalability, the | + | To ensure scalability, the NaplesPU manycore implements a sparse directory approach and a distributed L2 cache, described in [[Coherence|Coherence architecture]] section. Each tile deploys a coherence maintenance infrastructure along with the accelerator. A cache controller handles the local processing unit’s memory requests, turning load and store misses into directory requests over the network. It also handles responses and forwarded requests coming from the network, updating the block state in compliance with the given coherence protocol, while the accelerator is totally unaware of it. |

The architectures of the cache and the directory controllers have been designed with flexibility in mind and are not bound to any specific coherence protocol. They are equipped with a configurable protocol ROM, which provides a simple means for coherence protocol extensions, as it precisely describes the actions to take for each request based on the current block state. | The architectures of the cache and the directory controllers have been designed with flexibility in mind and are not bound to any specific coherence protocol. They are equipped with a configurable protocol ROM, which provides a simple means for coherence protocol extensions, as it precisely describes the actions to take for each request based on the current block state. | ||

== Hardware sections == | == Hardware sections == | ||

| − | This section presents main hardware components and their interaction in the | + | This section presents the main hardware components and their interaction in the NaplesPU project. |

===Common components=== | ===Common components=== | ||

| − | + | Basic components used all over the design are hereafter described, along with custom types and the GPGPU core provided. Those components are also part of the single-core version of the project (described later on). | |

| − | |||

* [[Include|Include]] | * [[Include|Include]] | ||

| Line 39: | Line 39: | ||

* [[Basic_comps|Basic components]] | * [[Basic_comps|Basic components]] | ||

| − | * [[Core| | + | * [[Core|NaplesPU GPGPU core architecture]] |

===Many Core System=== | ===Many Core System=== | ||

| + | Many-core advanced features, such as coherence subsystem and synchronization mechanism, are hereafter described. | ||

| + | * [[MC_System|System]] | ||

| + | |||

| + | * [[MC_Item|Item Interface]] | ||

* [[Coherence|Coherence architecture]] | * [[Coherence|Coherence architecture]] | ||

| − | |||

| − | |||

* [[Synchronization|Synchronization architecture]] | * [[Synchronization|Synchronization architecture]] | ||

| − | * [[ | + | * [[Network|Network architecture]] |

===Single Core Version=== | ===Single Core Version=== | ||

| − | The project provides a single- | + | The project provides a single-core version featuring a GPGPU core and a simplified caching system, hereafter described. |

* [[SC_System|System]] | * [[SC_System|System]] | ||

| Line 58: | Line 60: | ||

* [[SC_Item|Item Interface]] | * [[SC_Item|Item Interface]] | ||

| − | * | + | * [[SC_CC|Cache Controller]] |

* [[SC_Synch|Synchronization]] | * [[SC_Synch|Synchronization]] | ||

| Line 64: | Line 66: | ||

* [[SC_Logger|Logger]] | * [[SC_Logger|Logger]] | ||

| − | * | + | * [[System deployment|System deployment on FPGA]] |

| − | |||

| − | |||

| − | |||

| − | |||

===Testing coherence subsystem=== | ===Testing coherence subsystem=== | ||

| + | The coherence subsystem comes along with a dedicated testbench, described below. | ||

* [[Coherence Injection|Coherence Injection]] | * [[Coherence Injection|Coherence Injection]] | ||

| − | == | + | == Configuring NaplesPU == |

| − | One of the most important | + | One of the most important aspects of NaplesPU is parametrization. Many features can be extended by changing the corresponding value in header files, such as cache dimensions. User design can set a high number of parameter for every need, such as: |

| − | * NoC topology and Tile number related features (in ''' | + | * NoC topology and Tile number related features (in '''npu_user_defines.sv''' header file): |

| − | NoC_X_WIDTH - number of tile on the X dimension, must be power of 2 | + | NoC_X_WIDTH - number of tile on the X dimension, must be a power of 2 |

| − | NoC_Y_WIDTH - number of tile on the Y dimension, must be power of 2 | + | NoC_Y_WIDTH - number of tile on the Y dimension, must be a power of 2 |

TILE_MEMORY_ID - tile ID of the memory controller, defines the position in the NoC | TILE_MEMORY_ID - tile ID of the memory controller, defines the position in the NoC | ||

TILE_H2C_ID - tile ID of the host interface, defines the position in the NoC | TILE_H2C_ID - tile ID of the host interface, defines the position in the NoC | ||

| − | + | TILE_NPU - number of NPU tile in the system | |

TILE_HT - number of heterogeneous tile in the system | TILE_HT - number of heterogeneous tile in the system | ||

* Core related parameters: | * Core related parameters: | ||

| − | THREAD_NUMB - Threads per core, in ''' | + | THREAD_NUMB - Threads per core, in '''npu_user_defines.sv''' header file. |

| − | + | NPU_FPU - Allocates the floating point unit in the NPU core. | |

| − | + | NPU_SPM - Allocates a Scratchpad memory in each NPU core. | |

| − | HW_LANE - Defines the width of the SIMD extension, in ''' | + | HW_LANE - Defines the width of the SIMD extension, in '''npu_defines.sv''' header file. |

| − | REGISTER_NUMBER - Number of | + | REGISTER_NUMBER - Number of registers in both scalar and vectorial register files, in '''npu_defines.sv''' header file. Beware, changing the number of registers changes the position on special purpose register (such as PC or SP), the compiler has to be modified accordingly. |

| − | * Cache related parameters (in ''' | + | * Cache related parameters (in '''npu_user_defines.sv''' header file): |

| − | USER_ICACHE_SET - Number of sets in Instruction caches, must be power of 2 | + | USER_ICACHE_SET - Number of sets in Instruction caches, must be a power of 2 |

| − | USER_ICACHE_WAY - Number of ways in Instruction caches, must be power of 2 | + | USER_ICACHE_WAY - Number of ways in Instruction caches, must be a power of 2 |

| − | USER_DCACHE_SET - Number of sets in Data caches, must be power of 2 | + | USER_DCACHE_SET - Number of sets in Data caches, must be a power of 2 |

| − | USER_DCACHE_WAY - Number of ways in Data caches, must be power of 2 | + | USER_DCACHE_WAY - Number of ways in Data caches, must be a power of 2 |

| − | USER_L2CACHE_SET - Number of sets in L2 cache, must be power of 2 | + | USER_L2CACHE_SET - Number of sets in L2 cache, must be a power of 2 |

| − | USER_L2CACHE_WAY - Number of ways in L2 cache, must be power of 2 | + | USER_L2CACHE_WAY - Number of ways in L2 cache, must be a power of 2 |

| − | * System related configurations (in ''' | + | * System related configurations (in '''npu_user_defines.sv''' header file): |

IO_MAP_BASE_ADDR - Start of memory space allocated for IO operations (bypasses coherence). | IO_MAP_BASE_ADDR - Start of memory space allocated for IO operations (bypasses coherence). | ||

IO_MAP_SIZE - Width of memory space for IO operations. | IO_MAP_SIZE - Width of memory space for IO operations. | ||

DIRECTORY_BARRIER - When defined the system supports a distributed directory over all tiles. Otherwise, it allocates a single synchronization master. | DIRECTORY_BARRIER - When defined the system supports a distributed directory over all tiles. Otherwise, it allocates a single synchronization master. | ||

CENTRAL_SYNCH_ID - Single synchronization master ID, used only when DIRECTORY_BARRIER is undefined. | CENTRAL_SYNCH_ID - Single synchronization master ID, used only when DIRECTORY_BARRIER is undefined. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Changing number of thread === | === Changing number of thread === | ||

| − | + | As mentioned above, the number of threads is the same for each core and this parameter can easily be modified changing the '''THREAD_NUMB''' value in the '''npu_user_defines.sv''' header file. Each thread shares the L1 data and instruction cache. | |

| − | Changing the parameter value to the desired one is enough to obtain the thread number modification. Although | + | Changing the parameter value to the desired one is enough to obtain the thread number modification on the hardware side. Although the compiler has information on the number of threads, the linker uses those info to properly manage the stacks in the memory layout. The misc/lnkrscrpt.ld file in the toolchain repository has this information, in particular, the following row has to be updated coherently: |

threads_per_core = 0x8; | threads_per_core = 0x8; | ||

Such value is used in the crt0.s file in order to calculate stacks dimensions and locations. | Such value is used in the crt0.s file in order to calculate stacks dimensions and locations. | ||

Latest revision as of 13:11, 1 July 2019

The NaplesPU many-core is a modular and deeply customizable system based on a regular mesh network-on-chip of configurable tiles, designed to be an extensible and parametric platform from the ground up suitable for exploring advanced architectural solutions. Developed in the framework of the MANGO FETHPC project, the main objective of NaplesPU is to enable resource-efficient HPC based on special-purpose customized hardware. This led to a modular design and an instruction layout that exposes enough freedom to extend both the standard set of instructions and the baseline NPU hardware design, hereafter discussed. This project aims to pose a fully customizable and easy to extend the many-core system, suitable for the exploration of both software and hardware advanced solutions for tomorrow’s systems.

The main objective of NaplesPU is to enable resource-efficient HPC based on special-purpose customized hardware. Our aim is to build an application-driven architecture to achieve the best hardware/software configuration for any data-parallel kernel. Specialized data-parallel accelerators have been known to provide higher efficiency than general-purpose processors for codes with significant amounts of regular data-level parallelism (DLP). However, every parallel kernel has its own ideal configuration.

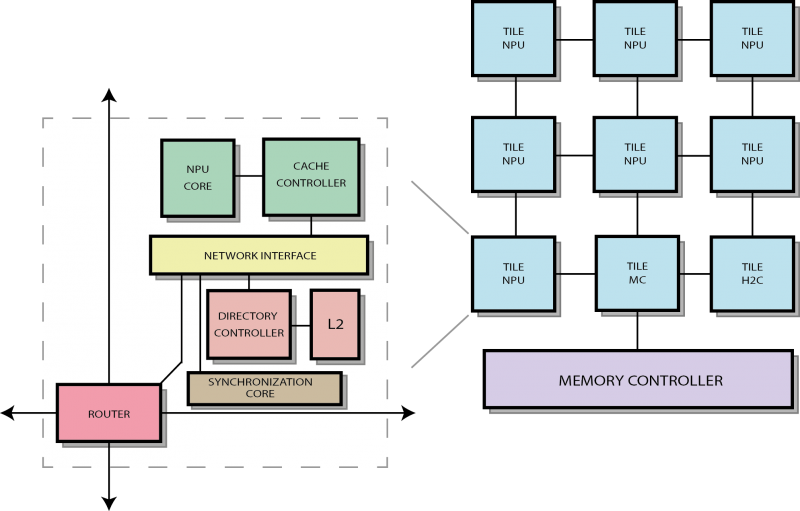

Each NPU tile has the same basic components, it provides a configurable GPU-like open-source soft-core meant to be used as a configurable FPGA overlay. This HPC-oriented accelerator merges the SIMT paradigm with vector processor model. Furthermore, each tile has a Cache Controller and a Directory Controller, those components handle data coherence between different cores in different tiles.

Contents

Tile Overview

The figure above captures a simplified overview of the NaplesPU many-core. Each NPU tile has the same basic components, it provides a configurable GPU-like accelerator meant to be used as a configurable FPGA overlay, an extendible coherence subsystem protocol-independent, and a mesh-based networking system which routes hardware messages over the communication network.

The system is based on a 2D mesh of heterogeneous tiles relying on a network-on-chip (NoC, described in Network architecture section). The NoC routers are tightly coupled with network interface modules providing packet-based communication over four different virtual channels. A two-stage look-ahead router is used implementing a wormhole flit-based communication. The networking infrastructure allows both intra-tile and inter-tile communication. One virtual channel is dedicated to service message flows. In particular, the manycore system supports a distributed synchronization mechanism based on hardware barriers (section Synchronization). Importantly, based on simple valid/ready interfaces, one can integrate any processing unit in each tile.

The accelerator merges the SIMT paradigm with vector processor model. The GPU-like model exposes promising features for improved resource efficiency. In fact, it provides hardware threads executing coupled with SIMD execution units, while reducing control overheads and hiding possibly long latencies. This accelerator effectively exploits multi-threading, SIMD operations, and low-overhead control flow constructs, in addition to a range of advanced architecture customization capabilities, in order to enable a high-level utilization of the underlying resources.

To ensure scalability, the NaplesPU manycore implements a sparse directory approach and a distributed L2 cache, described in Coherence architecture section. Each tile deploys a coherence maintenance infrastructure along with the accelerator. A cache controller handles the local processing unit’s memory requests, turning load and store misses into directory requests over the network. It also handles responses and forwarded requests coming from the network, updating the block state in compliance with the given coherence protocol, while the accelerator is totally unaware of it.

The architectures of the cache and the directory controllers have been designed with flexibility in mind and are not bound to any specific coherence protocol. They are equipped with a configurable protocol ROM, which provides a simple means for coherence protocol extensions, as it precisely describes the actions to take for each request based on the current block state.

Hardware sections

This section presents the main hardware components and their interaction in the NaplesPU project.

Common components

Basic components used all over the design are hereafter described, along with custom types and the GPGPU core provided. Those components are also part of the single-core version of the project (described later on).

Many Core System

Many-core advanced features, such as coherence subsystem and synchronization mechanism, are hereafter described.

Single Core Version

The project provides a single-core version featuring a GPGPU core and a simplified caching system, hereafter described.

Testing coherence subsystem

The coherence subsystem comes along with a dedicated testbench, described below.

Configuring NaplesPU

One of the most important aspects of NaplesPU is parametrization. Many features can be extended by changing the corresponding value in header files, such as cache dimensions. User design can set a high number of parameter for every need, such as:

- NoC topology and Tile number related features (in npu_user_defines.sv header file):

NoC_X_WIDTH - number of tile on the X dimension, must be a power of 2 NoC_Y_WIDTH - number of tile on the Y dimension, must be a power of 2 TILE_MEMORY_ID - tile ID of the memory controller, defines the position in the NoC TILE_H2C_ID - tile ID of the host interface, defines the position in the NoC TILE_NPU - number of NPU tile in the system TILE_HT - number of heterogeneous tile in the system

- Core related parameters:

THREAD_NUMB - Threads per core, in npu_user_defines.sv header file. NPU_FPU - Allocates the floating point unit in the NPU core. NPU_SPM - Allocates a Scratchpad memory in each NPU core. HW_LANE - Defines the width of the SIMD extension, in npu_defines.sv header file. REGISTER_NUMBER - Number of registers in both scalar and vectorial register files, in npu_defines.sv header file. Beware, changing the number of registers changes the position on special purpose register (such as PC or SP), the compiler has to be modified accordingly.

- Cache related parameters (in npu_user_defines.sv header file):

USER_ICACHE_SET - Number of sets in Instruction caches, must be a power of 2 USER_ICACHE_WAY - Number of ways in Instruction caches, must be a power of 2 USER_DCACHE_SET - Number of sets in Data caches, must be a power of 2 USER_DCACHE_WAY - Number of ways in Data caches, must be a power of 2 USER_L2CACHE_SET - Number of sets in L2 cache, must be a power of 2 USER_L2CACHE_WAY - Number of ways in L2 cache, must be a power of 2

- System related configurations (in npu_user_defines.sv header file):

IO_MAP_BASE_ADDR - Start of memory space allocated for IO operations (bypasses coherence). IO_MAP_SIZE - Width of memory space for IO operations. DIRECTORY_BARRIER - When defined the system supports a distributed directory over all tiles. Otherwise, it allocates a single synchronization master. CENTRAL_SYNCH_ID - Single synchronization master ID, used only when DIRECTORY_BARRIER is undefined.

Changing number of thread

As mentioned above, the number of threads is the same for each core and this parameter can easily be modified changing the THREAD_NUMB value in the npu_user_defines.sv header file. Each thread shares the L1 data and instruction cache.

Changing the parameter value to the desired one is enough to obtain the thread number modification on the hardware side. Although the compiler has information on the number of threads, the linker uses those info to properly manage the stacks in the memory layout. The misc/lnkrscrpt.ld file in the toolchain repository has this information, in particular, the following row has to be updated coherently:

threads_per_core = 0x8;

Such value is used in the crt0.s file in order to calculate stacks dimensions and locations.