Difference between revisions of "Network"

(→General architecture) |

|||

| (12 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | In a many-core system, the interconnection network has the vital goal of allowing various devices to communicate efficiently. | |

| − | |||

| − | + | The network infrastructure is mainly designed for coherence and synchronization messages. In particular, NaplesPU coherence system provides a private L1 core cache and a shared L2 directory-based cache, with the L2 cache completely distributed among all tiles. Each address has an associated home tile in charge to handle the state of the cache line is stored. Similarly, the home directory handles coherence requests from cores. | |

| + | On the same infrastructure, service messages flow either for host-to-device communication and handling IO mapped peripherals. | ||

| − | + | Each tile is equipped with multiple devices (called from now on network users) requiring network access. | |

| + | The network infrastructure must thus provide inter-tile and intra-tile communication capabilities. The interface offered to its users must be as generic as possible, and independent of the specific network topology and implementation details. | ||

| − | == | + | == General architecture == |

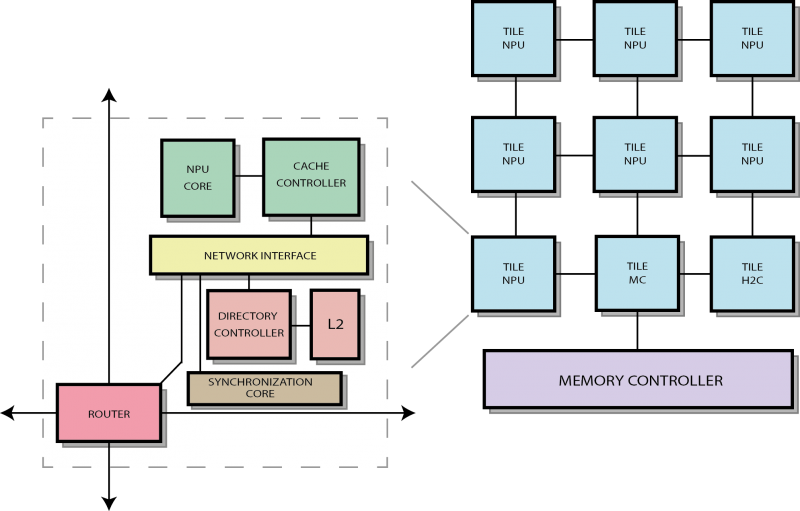

| − | + | [[File:npu_manycore.png|800px|NaplesPU manycore architecture]] | |

| − | |||

| − | + | Tiles are organized as a 2D mesh grid. | |

| − | |||

| − | [[ | + | Every tile has a [[Network router|router]], which is the component responsible for inter-tile communication, and a [[Network interface|network interface]], which offers a transparent interface to network users. |

| − | + | The network interface acts here as a bridge. Its interface must adapt to the requirements of multiple network users, converting requests from user’s format to network format, and backwards. | |

| − | + | Once a request is converted in network format, the router takes charge of its handling. | |

| − | |||

| − | |||

| − | + | The basic communication unit supported by the router is the flit. A request is thus broken down in flits by the network interface and sent to the local router (injection), and requests are rebuilt using flits received from the local router (ejection). | |

| + | The router has no information of application messages, and it just sees them as a stream of flits. As a sequence of flits can be arbitrarily long, the router can offer the maximum flexibility, allowing requests of unspecified length. | ||

| + | The ultimate goal of the router is to ensure that flits are correctly injected, ejected and forwarded (routed) through the mesh. | ||

| − | + | == Routing protocol == | |

| − | |||

| − | + | The NaplesPU system works under the assumption that no flit can be lost. This means that routers must buffer packets, and eventually stall in case of full output buffers, to avoid packet drop. In this process, as routers wait for each other, a circular dependency can potentially be created. Since routers cannot drop packets, a deadlock may occur, and we must prevent it from happening. | |

| − | + | As we route packets through the mesh, a flit can enter a router and leave it from any cardinal direction. It is obvious that routing flits along a straight line cannot form a circular dependency. For this reason, only turns must be analyzed. | |

| + | The simplest solution is to ban some possible turns, in a way that disallows circular dependency. | ||

| − | + | The routing protocol adopted in NaplesPU is called '''XY Dimension-Order Routing''', or DOR. It forces a packet to be routed first along the X-axis, and then along the Y-axis. It is one of the simplest routing protocols, as it takes its decision independently of current network status (a so-called oblivious protocol), and requires little logic to be implemented, although offering deadlock avoidance and shortest path routing. | |

| − | + | Besides that, NaplesPU Network System implements a '''routing look-ahead''' strategy. Although this is an implementation-phase optimization, it may be useful to report it here. Every router does the route calculation, and so the output port calculation, as if it were the next router along the path, and sends the result along with the flit. This means that every router, when receiving a flit, already knows the output port of that flit. This allows reducing router's pipeline length, as now the routing calculation can be done in parallel with other useful work (like output port allocation). Further details are discussed on the [[Network router|router]] page. | |

| − | |||

| − | + | == Virtual channels and flow control == | |

| − | + | Virtual channels are an extensively adopted technique in network design. They allow building multiple virtual networks starting from a single physical one. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | The | + | The main problem they try to solve is head-of-line blocking. It happens when a flit that cannot be routed (maybe because the next router input buffer is full) reaches the head of the input queue, preventing the successive flits, which potentially belong to independent traffic flows, from being served. If those blocked flits belong to different traffic flows, it makes sense to buffer them on different queues. |

| − | |||

| − | + | Virtual channels are called virtual for this reason: there is only a single link between two routers, but the result is like having multiple physical channels dedicated to each traffic flow. It is the router’s responsibility to ensure that virtual channels are properly time multiplexed on the same link. | |

| − | |||

| − | |||

| − | + | Virtual channels can also be used to prevent deadlocks, in case the routing protocol allows them. To achieve this, virtual channels allocation must happen in a fixed order; or an “escape virtual channel” must be provided, whose flits gets routed with a deadlock-free protocol. As long as virtual channels allocation is fair, a flit will eventually be served. | |

| − | |||

| − | |||

| − | + | In NaplesPU the number of virtual channels is represented by the constant parameter VC_PER_PORT, currently set to 4, as many as the type of network messages: ''Requests'', ''Responses'', ''Forwards'', ''Service Messages''. | |

| + | As a given message type is associated with a specific virtual channel, the network interface component must know on which virtual channel it has to inject the flits. The router, as stated before, has no knowledge of application messages. This means that it must ensure as little as to guarantee that messages don’t get routed on wrong virtual channels: for this reason, '''a flit will be kept on the same virtual channel in every router along with its path'''. Besides that, given the absence of a flit reorder logic in the input buffers, '''flits of multiple packets cannot interleave on the same virtual channel'''. That is, it must be ensured that once a packet is partially been sent, no other packets can be granted access to that specific virtual channel, until the request is completely fulfilled. | ||

| − | + | In NaplesPU every virtual channel has a dedicated input buffer, so flow control is also be implemented on a virtual channel basis. This means that every router sends to its neighbour information regarding the status of its buffers, for each virtual channel: they are called ''back-pressure signals''. In particular, back-pressure is implemented using a single signal, called '''ON/OFF''', which when turned high prevents other routers from sending packets to that specific virtual channel. | |

| − | + | == Data structures == | |

| − | |||

| − | + | The network infrastructure uses a bunch of simple data structures to represent information. | |

| − | The | ||

| − | + | The main data structure is the flit, which is the exact amount of information transferred between routers. | |

| − | + | typedef struct packed { | |

| + | flit_type_t flit_type; | ||

| + | vc_id_t vc_id; | ||

| + | port_t next_hop_port; | ||

| + | tile_address_t destination; | ||

| + | tile_destination_t core_destination; | ||

| + | logic [`PAYLOAD_W-1:0] payload; | ||

| + | } flit_t; | ||

| − | + | First of all, we must know which position a flit occupies in the original packet. In particular, we are only interested in a few scenarios: | |

| − | + | * this is a head flit (the first flit of a packet): it represents the start of a request; the router must decide when to grant access to the output virtual channel; | |

| − | + | * this is a body flit (not the first nor the last): the request has already been granted access to the output virtual channel, and body flits must leave from that same virtual channel, moreover the grant must be held; | |

| − | + | * this is a tail flit (the last flit of a packet): after serving this flit as a body flit, the grant can be released; | |

| − | + | * this is a head-tail flit (a packet composed of one flit): the flit gets routed as a head flit and the grant is released; | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | The | + | The flit type is thus defined in this way. |

| − | + | typedef enum logic [`FLIT_TYPE_W-1 : 0] { | |

| + | HEADER, | ||

| + | BODY, | ||

| + | TAIL, | ||

| + | HT | ||

| + | } flit_type_t; | ||

| − | + | The virtual channel id is a numeric identifier. As stated before, the router has no knowledge of each virtual channel semantic, although it is reported as a comment on each line. | |

| − | + | typedef enum logic [`VC_ID_W-1 : 0] { | |

| + | VC0, // Request | ||

| + | VC1, // Response Inject | ||

| + | VC2, // Fwd | ||

| + | VC3 // Service VC | ||

| + | } vc_id_t; | ||

| − | + | Due to the look-ahead routing technique, the next hop port field is the output port through which the flit must leave the current router. The output port at the current router is in fact calculated by the previous router on the path, as explained in [[#Routing protocol|this paragraph]]. Each router has 5 I/O ports: one for each cardinal direction, and the local injection/ejection port. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | typedef enum logic [`PORT_NUM_W-1 : 0 ] { | |

| + | LOCAL = 0, | ||

| + | EAST = 1, | ||

| + | NORTH = 2, | ||

| + | WEST = 3, | ||

| + | SOUTH = 4 | ||

| + | } port_t; | ||

| − | + | As tiles are organized in a mesh, they can be identified by a two-dimensional coordinate. | |

| − | + | typedef struct packed { | |

| + | logic [`TOT_Y_NODE_W-1:0] y; | ||

| + | logic [`TOT_X_NODE_W-1:0] x; | ||

| + | } tile_address_t; | ||

| − | + | To support intra-tile addressing, an additional field is provided. At the moment, the devices that need to be addressed are the cache controller and the directory controller. | |

| − | + | typedef enum logic [`DEST_TILE_W - 1 : 0] { | |

| + | TO_DC = `DEST_TILE_W'b00001, | ||

| + | TO_CC = `DEST_TILE_W'b00010 | ||

| + | } tile_destination_t; | ||

| − | + | == Implementation == | |

| − | + | *[[Network router]] | |

| − | + | *[[Network interface]] | |

| − | [[ | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | * | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | [[ | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 14:09, 25 June 2019

In a many-core system, the interconnection network has the vital goal of allowing various devices to communicate efficiently.

The network infrastructure is mainly designed for coherence and synchronization messages. In particular, NaplesPU coherence system provides a private L1 core cache and a shared L2 directory-based cache, with the L2 cache completely distributed among all tiles. Each address has an associated home tile in charge to handle the state of the cache line is stored. Similarly, the home directory handles coherence requests from cores. On the same infrastructure, service messages flow either for host-to-device communication and handling IO mapped peripherals.

Each tile is equipped with multiple devices (called from now on network users) requiring network access. The network infrastructure must thus provide inter-tile and intra-tile communication capabilities. The interface offered to its users must be as generic as possible, and independent of the specific network topology and implementation details.

Contents

General architecture

Tiles are organized as a 2D mesh grid.

Every tile has a router, which is the component responsible for inter-tile communication, and a network interface, which offers a transparent interface to network users. The network interface acts here as a bridge. Its interface must adapt to the requirements of multiple network users, converting requests from user’s format to network format, and backwards. Once a request is converted in network format, the router takes charge of its handling.

The basic communication unit supported by the router is the flit. A request is thus broken down in flits by the network interface and sent to the local router (injection), and requests are rebuilt using flits received from the local router (ejection). The router has no information of application messages, and it just sees them as a stream of flits. As a sequence of flits can be arbitrarily long, the router can offer the maximum flexibility, allowing requests of unspecified length. The ultimate goal of the router is to ensure that flits are correctly injected, ejected and forwarded (routed) through the mesh.

Routing protocol

The NaplesPU system works under the assumption that no flit can be lost. This means that routers must buffer packets, and eventually stall in case of full output buffers, to avoid packet drop. In this process, as routers wait for each other, a circular dependency can potentially be created. Since routers cannot drop packets, a deadlock may occur, and we must prevent it from happening.

As we route packets through the mesh, a flit can enter a router and leave it from any cardinal direction. It is obvious that routing flits along a straight line cannot form a circular dependency. For this reason, only turns must be analyzed. The simplest solution is to ban some possible turns, in a way that disallows circular dependency.

The routing protocol adopted in NaplesPU is called XY Dimension-Order Routing, or DOR. It forces a packet to be routed first along the X-axis, and then along the Y-axis. It is one of the simplest routing protocols, as it takes its decision independently of current network status (a so-called oblivious protocol), and requires little logic to be implemented, although offering deadlock avoidance and shortest path routing.

Besides that, NaplesPU Network System implements a routing look-ahead strategy. Although this is an implementation-phase optimization, it may be useful to report it here. Every router does the route calculation, and so the output port calculation, as if it were the next router along the path, and sends the result along with the flit. This means that every router, when receiving a flit, already knows the output port of that flit. This allows reducing router's pipeline length, as now the routing calculation can be done in parallel with other useful work (like output port allocation). Further details are discussed on the router page.

Virtual channels and flow control

Virtual channels are an extensively adopted technique in network design. They allow building multiple virtual networks starting from a single physical one.

The main problem they try to solve is head-of-line blocking. It happens when a flit that cannot be routed (maybe because the next router input buffer is full) reaches the head of the input queue, preventing the successive flits, which potentially belong to independent traffic flows, from being served. If those blocked flits belong to different traffic flows, it makes sense to buffer them on different queues.

Virtual channels are called virtual for this reason: there is only a single link between two routers, but the result is like having multiple physical channels dedicated to each traffic flow. It is the router’s responsibility to ensure that virtual channels are properly time multiplexed on the same link.

Virtual channels can also be used to prevent deadlocks, in case the routing protocol allows them. To achieve this, virtual channels allocation must happen in a fixed order; or an “escape virtual channel” must be provided, whose flits gets routed with a deadlock-free protocol. As long as virtual channels allocation is fair, a flit will eventually be served.

In NaplesPU the number of virtual channels is represented by the constant parameter VC_PER_PORT, currently set to 4, as many as the type of network messages: Requests, Responses, Forwards, Service Messages. As a given message type is associated with a specific virtual channel, the network interface component must know on which virtual channel it has to inject the flits. The router, as stated before, has no knowledge of application messages. This means that it must ensure as little as to guarantee that messages don’t get routed on wrong virtual channels: for this reason, a flit will be kept on the same virtual channel in every router along with its path. Besides that, given the absence of a flit reorder logic in the input buffers, flits of multiple packets cannot interleave on the same virtual channel. That is, it must be ensured that once a packet is partially been sent, no other packets can be granted access to that specific virtual channel, until the request is completely fulfilled.

In NaplesPU every virtual channel has a dedicated input buffer, so flow control is also be implemented on a virtual channel basis. This means that every router sends to its neighbour information regarding the status of its buffers, for each virtual channel: they are called back-pressure signals. In particular, back-pressure is implemented using a single signal, called ON/OFF, which when turned high prevents other routers from sending packets to that specific virtual channel.

Data structures

The network infrastructure uses a bunch of simple data structures to represent information.

The main data structure is the flit, which is the exact amount of information transferred between routers.

typedef struct packed {

flit_type_t flit_type;

vc_id_t vc_id;

port_t next_hop_port;

tile_address_t destination;

tile_destination_t core_destination;

logic [`PAYLOAD_W-1:0] payload;

} flit_t;

First of all, we must know which position a flit occupies in the original packet. In particular, we are only interested in a few scenarios:

- this is a head flit (the first flit of a packet): it represents the start of a request; the router must decide when to grant access to the output virtual channel;

- this is a body flit (not the first nor the last): the request has already been granted access to the output virtual channel, and body flits must leave from that same virtual channel, moreover the grant must be held;

- this is a tail flit (the last flit of a packet): after serving this flit as a body flit, the grant can be released;

- this is a head-tail flit (a packet composed of one flit): the flit gets routed as a head flit and the grant is released;

The flit type is thus defined in this way.

typedef enum logic [`FLIT_TYPE_W-1 : 0] {

HEADER,

BODY,

TAIL,

HT

} flit_type_t;

The virtual channel id is a numeric identifier. As stated before, the router has no knowledge of each virtual channel semantic, although it is reported as a comment on each line.

typedef enum logic [`VC_ID_W-1 : 0] {

VC0, // Request

VC1, // Response Inject

VC2, // Fwd

VC3 // Service VC

} vc_id_t;

Due to the look-ahead routing technique, the next hop port field is the output port through which the flit must leave the current router. The output port at the current router is in fact calculated by the previous router on the path, as explained in this paragraph. Each router has 5 I/O ports: one for each cardinal direction, and the local injection/ejection port.

typedef enum logic [`PORT_NUM_W-1 : 0 ] {

LOCAL = 0,

EAST = 1,

NORTH = 2,

WEST = 3,

SOUTH = 4

} port_t;

As tiles are organized in a mesh, they can be identified by a two-dimensional coordinate.

typedef struct packed {

logic [`TOT_Y_NODE_W-1:0] y;

logic [`TOT_X_NODE_W-1:0] x;

} tile_address_t;

To support intra-tile addressing, an additional field is provided. At the moment, the devices that need to be addressed are the cache controller and the directory controller.

typedef enum logic [`DEST_TILE_W - 1 : 0] {

TO_DC = `DEST_TILE_W'b00001,

TO_CC = `DEST_TILE_W'b00010

} tile_destination_t;