Difference between revisions of "Main Page"

(→Getting started) |

|||

| (54 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | The ''Naples Processing Unit'', dubbed '''NaplesPU''' or '''NPU''', is a comprehensive open-source manycore accelerator, encompassing all the architecture layers from the compute core up to the on-chip interconnect, the coherence memory hierarchy, and the compilation toolchain. | |

| + | Entirely written in System Verilog HDL, '''NaplesPU''' exploits the three forms of parallelism that you normally find in modern compute architectures, particularly in heterogeneous accelerators such as GPU devices: vector parallelism, hardware multithreading, and manycore organization. Equipped with a complete LLVM-based compiler targeting the '''NaplesPU''' vector ISA, the '''NPU''' open-source project will let you experiment with all of the flavors of today’s manycore technologies. | ||

| + | |||

| + | The '''NPU''' manycore architecture is based on a parameterizable mesh of configurable tiles connected through a Network on Chip (NoC). Each tile has a Cache Controller and a Directory Controller, handling data coherence between different cores in different tiles. The compute core is based on a vector pipeline featuring a lightweight control unit, so as to devote most of the hardware resources to the acceleration of data-parallel kernels. Memory operations and long-latency instructions are masked by exploiting hardware multithreading. Each hardware thread (roughly equivalent to a wavefront in the OpenCL terminology or a CUDA warp in the NVIDIA terminology) has its own PC, register file, and control registers. The number of threads in the '''NaplesPU''' system is user-configurable. | ||

| + | |||

| + | [[File:Overview.jpeg|1200px|center|NaplesPU overview]] | ||

| + | |||

== Getting started == | == Getting started == | ||

| − | This section shows how to approach | + | This section shows how to approach the project for simulating or implementing a kernel for NaplesPU architecture. Kernel means a complex application such as matrix multiplication, transpose of a matrix or similar that is written in a high-level programming language, such as C/C++. |

=== Required software === | === Required software === | ||

Simulation or implementation of any kernel relies on the following dependencies: | Simulation or implementation of any kernel relies on the following dependencies: | ||

* Git | * Git | ||

| − | * Xilinx Vivado 2018.2 | + | * Xilinx Vivado 2018.2 or ModelSim (e.g. Questa Sim-64 vsim 10.6c_1) |

| − | * | + | * NaplesPU toolchain |

=== Building process === | === Building process === | ||

| − | + | The first step is to obtain the source code of NaplesPU architecture from the official repository by cloning it from [https://gitlab.com/vincenscotti/nuplus] | |

In Ubuntu Linux environment, this step is fulfilled by starting following command: | In Ubuntu Linux environment, this step is fulfilled by starting following command: | ||

| − | <code> $ git clone | + | <code> $ git clone https://github.com/AlessandroCilardo/NaplesPU </code> |

| − | In | + | In the NaplesPU repository, the toolchain is a git sub-module of the repository so is needed to be created and updated. In Ubuntu Linux environment, just type the following command in a root folder of the repository: |

<code> $ git submodule update --init </code> | <code> $ git submodule update --init </code> | ||

| − | Then, third step is to install a toolchain. This process is described [[http://www.naplespu.com/doc/index.php?title=Toolchain here]]. | + | Then, the third step is to install a toolchain. This process is described [[http://www.naplespu.com/doc/index.php?title=Toolchain here]]. |

| − | + | === Simulate a kernel === | |

| − | * software, | + | The following folders are of particular interest for the purpose: |

| − | * tools, | + | * software, stores all kernels; |

| + | * tools, stores all scripts for simulation. | ||

| − | + | For simulating a kernel there are three ways: | |

| − | For | ||

* starting test.sh script | * starting test.sh script | ||

| − | * starting setup_project.sh from | + | * starting setup_project.sh from the root folder of the repository, if the simulator software is Vivado; |

| − | * starting simulate.sh from | + | * starting simulate.sh from the root folder of the repository, if the simulator software is ModelSim. |

| − | First of all, | + | First of all, source Vivado or ModelSim in the shell. This step is mandatory for all ways. In Ubuntu Linux environment: |

| − | + | <code>$ source Vivado/folder/location/settingXX.sh</code> | |

| − | |||

| − | + | where XX depends on the installed version of Vivado (32 o 64 bit). | |

| − | + | ==== test.sh script ==== | |

| + | The test.sh script, located in the npu/tools folder, runs all the kerels listed in it and compares the output from NPU with the expected result produced by a standard x86 architecture: | ||

| − | + | <code>$ ./test.sh [option]</code> | |

| − | |||

| − | + | Options are: | |

| + | * -h, --help show this help | ||

| + | * -t, --tool=vsim or vivado specify the tool to use, default: vsim | ||

| + | * -cn, --core-numb=VALUE specify the core number, default: 1 | ||

| + | * -tn, --thread-numb=VALUE specify the thread number, default: 8 | ||

| − | + | The test.sh script automatically compiles the kernels and runs them on NaplesPU and x86 architecture. Once the simulation is terminated, for each kernel, the results of both executions are compared by a Python script for verifying the correctness. | |

| − | |||

| − | + | In the tools folder, the file cosim.log stores the output of the simulator. | |

| − | === | + | ==== setup_project.sh script ==== |

| − | + | The setup_project.sh script can be run as follow from the root of the project: | |

| + | <code>$ tools/vivado/setup_project.sh [option]</code> | ||

| + | Options are: | ||

| + | * -h, --help show this help | ||

| + | * -k, --kernel=KERNEL_NAME specify the kernel to use | ||

| + | * -s, --single-core select the single core configuration, by default the manycore is selected | ||

| + | * -c, --core-mask=VALUE specify the core activation mask, default: 1 | ||

| + | * -t, --thread-mask=VALUE specify the thread activation mask, default FF | ||

| + | * -m, --mode=gui or batch specify the tool mode, it can run in either gui or batch mode, default: gui | ||

| + | This script starts the kernel specified in the command. The kernel ought be already compiled before running it on the NaplesPU architecture: | ||

| − | + | tools/vivado/setup_project.sh -k mmsc -c 3 -t $(( 16#F )) -m gui | |

| − | . | + | Parameter -c 3 passes the one-hot mask for the core activation: 3 is (11)2, hence tile 0 and 1 will start their cores. Parameter |

| + | -t $(( 16#F )) refers to the active thread mask for each core, it is a one-hot mask that states which thread is active in each core: F is (00001111)2 so thread 0 to 3 are running. | ||

| + | Parameter -m gui states in which mode the simulator executes. | ||

| − | == | + | ==== simulate.sh script ==== |

| + | The simulate.sh script can be run as follow from the root of the project: | ||

| − | [ | + | <code>$ tools/modelsim/simulate.sh [option]</code> |

| − | + | Options: | |

| + | * -h, --help show this help | ||

| + | * -k, --kernel=KERNEL_NAME specify the kernel to use | ||

| + | * -s, --single-core select the single core configuration, by default the manycore is selected | ||

| + | * -c, --core-mask=VALUE specify the core activation mask, default: 1 | ||

| + | * -t, --thread-mask=VALUE specify the thread activation mask, default FF | ||

| + | * -m, --mode=gui or batch specify the tool mode, it can run in either gui or batch mode, default: gui | ||

| − | + | This script starts the kernel specified in the command. The kernel ought be already compiled before running it on the NaplesPU architecture: | |

| − | + | == Full Documentation == | |

| − | + | [[The nu+ Hardware architecture|The NaplesPU Hardware Architecture]] | |

| − | [[ | + | [[toolchain|The NaplesPU Toolchain]] |

| − | [[ | + | [[ISA|The NaplesPU Instruction Set Architecture]] |

| − | [[nu+ | + | [[Extending nu+|Extending NaplesPU]] |

| − | [[ | + | [[Heterogeneous Tile|Heterogeneous Tile]] |

| − | [[ | + | [[Programming Model|Programming Model]] |

== Further information on MediaWiki == | == Further information on MediaWiki == | ||

| − | + | The '''NaplesPU''' project documentation will be based on MediaWiki. | |

| − | |||

| − | The ''' | ||

''For information and guides on using MediaWiki, please see the links below:'' | ''For information and guides on using MediaWiki, please see the links below:'' | ||

Latest revision as of 15:10, 21 July 2019

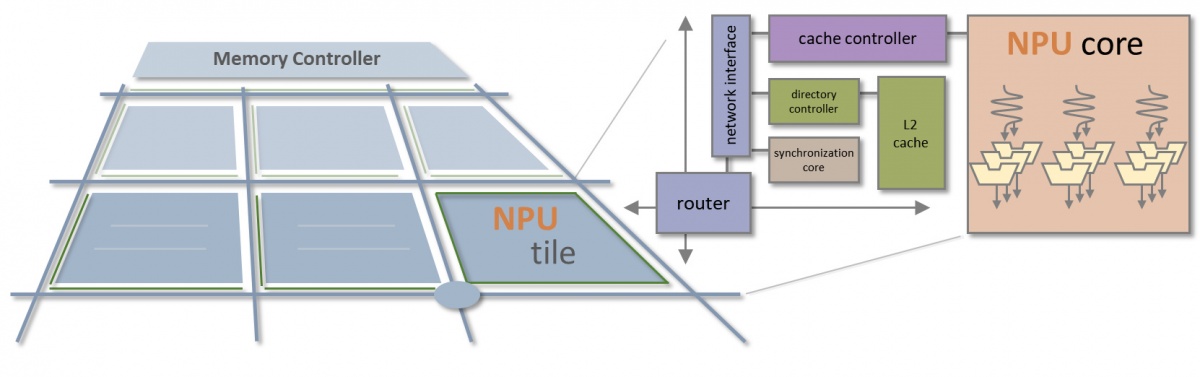

The Naples Processing Unit, dubbed NaplesPU or NPU, is a comprehensive open-source manycore accelerator, encompassing all the architecture layers from the compute core up to the on-chip interconnect, the coherence memory hierarchy, and the compilation toolchain. Entirely written in System Verilog HDL, NaplesPU exploits the three forms of parallelism that you normally find in modern compute architectures, particularly in heterogeneous accelerators such as GPU devices: vector parallelism, hardware multithreading, and manycore organization. Equipped with a complete LLVM-based compiler targeting the NaplesPU vector ISA, the NPU open-source project will let you experiment with all of the flavors of today’s manycore technologies.

The NPU manycore architecture is based on a parameterizable mesh of configurable tiles connected through a Network on Chip (NoC). Each tile has a Cache Controller and a Directory Controller, handling data coherence between different cores in different tiles. The compute core is based on a vector pipeline featuring a lightweight control unit, so as to devote most of the hardware resources to the acceleration of data-parallel kernels. Memory operations and long-latency instructions are masked by exploiting hardware multithreading. Each hardware thread (roughly equivalent to a wavefront in the OpenCL terminology or a CUDA warp in the NVIDIA terminology) has its own PC, register file, and control registers. The number of threads in the NaplesPU system is user-configurable.

Contents

Getting started

This section shows how to approach the project for simulating or implementing a kernel for NaplesPU architecture. Kernel means a complex application such as matrix multiplication, transpose of a matrix or similar that is written in a high-level programming language, such as C/C++.

Required software

Simulation or implementation of any kernel relies on the following dependencies:

- Git

- Xilinx Vivado 2018.2 or ModelSim (e.g. Questa Sim-64 vsim 10.6c_1)

- NaplesPU toolchain

Building process

The first step is to obtain the source code of NaplesPU architecture from the official repository by cloning it from [1]

In Ubuntu Linux environment, this step is fulfilled by starting following command:

$ git clone https://github.com/AlessandroCilardo/NaplesPU

In the NaplesPU repository, the toolchain is a git sub-module of the repository so is needed to be created and updated. In Ubuntu Linux environment, just type the following command in a root folder of the repository:

$ git submodule update --init

Then, the third step is to install a toolchain. This process is described [here].

Simulate a kernel

The following folders are of particular interest for the purpose:

- software, stores all kernels;

- tools, stores all scripts for simulation.

For simulating a kernel there are three ways:

- starting test.sh script

- starting setup_project.sh from the root folder of the repository, if the simulator software is Vivado;

- starting simulate.sh from the root folder of the repository, if the simulator software is ModelSim.

First of all, source Vivado or ModelSim in the shell. This step is mandatory for all ways. In Ubuntu Linux environment:

$ source Vivado/folder/location/settingXX.sh

where XX depends on the installed version of Vivado (32 o 64 bit).

test.sh script

The test.sh script, located in the npu/tools folder, runs all the kerels listed in it and compares the output from NPU with the expected result produced by a standard x86 architecture:

$ ./test.sh [option]

Options are:

- -h, --help show this help

- -t, --tool=vsim or vivado specify the tool to use, default: vsim

- -cn, --core-numb=VALUE specify the core number, default: 1

- -tn, --thread-numb=VALUE specify the thread number, default: 8

The test.sh script automatically compiles the kernels and runs them on NaplesPU and x86 architecture. Once the simulation is terminated, for each kernel, the results of both executions are compared by a Python script for verifying the correctness.

In the tools folder, the file cosim.log stores the output of the simulator.

setup_project.sh script

The setup_project.sh script can be run as follow from the root of the project:

$ tools/vivado/setup_project.sh [option]

Options are:

- -h, --help show this help

- -k, --kernel=KERNEL_NAME specify the kernel to use

- -s, --single-core select the single core configuration, by default the manycore is selected

- -c, --core-mask=VALUE specify the core activation mask, default: 1

- -t, --thread-mask=VALUE specify the thread activation mask, default FF

- -m, --mode=gui or batch specify the tool mode, it can run in either gui or batch mode, default: gui

This script starts the kernel specified in the command. The kernel ought be already compiled before running it on the NaplesPU architecture:

tools/vivado/setup_project.sh -k mmsc -c 3 -t $(( 16#F )) -m gui

Parameter -c 3 passes the one-hot mask for the core activation: 3 is (11)2, hence tile 0 and 1 will start their cores. Parameter -t $(( 16#F )) refers to the active thread mask for each core, it is a one-hot mask that states which thread is active in each core: F is (00001111)2 so thread 0 to 3 are running. Parameter -m gui states in which mode the simulator executes.

simulate.sh script

The simulate.sh script can be run as follow from the root of the project:

$ tools/modelsim/simulate.sh [option]

Options:

- -h, --help show this help

- -k, --kernel=KERNEL_NAME specify the kernel to use

- -s, --single-core select the single core configuration, by default the manycore is selected

- -c, --core-mask=VALUE specify the core activation mask, default: 1

- -t, --thread-mask=VALUE specify the thread activation mask, default FF

- -m, --mode=gui or batch specify the tool mode, it can run in either gui or batch mode, default: gui

This script starts the kernel specified in the command. The kernel ought be already compiled before running it on the NaplesPU architecture:

Full Documentation

The NaplesPU Hardware Architecture

The NaplesPU Instruction Set Architecture

Further information on MediaWiki

The NaplesPU project documentation will be based on MediaWiki. For information and guides on using MediaWiki, please see the links below:

- User's Guide (for information on using the wiki software)

- Configuration settings list

- MediaWiki FAQ

- MediaWiki release mailing list

- Localise MediaWiki for your language

- Learn how to combat spam on your wiki