Difference between revisions of "L1 Cache Controller"

(→Assumptions) |

(→Stall Protocol ROMs) |

||

| (46 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

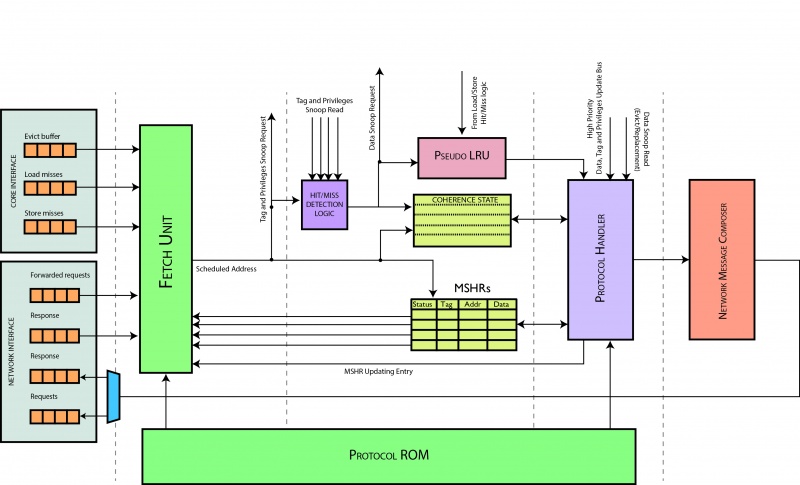

| − | + | Cache controller manages the L1 cache. In particular, it handles only coherence information (such as states) since L1 data cache is managed by load/store unit. | |

| + | |||

| + | [[File:l1_cache.jpg|800px|LDST_CC]] | ||

| − | |||

The component is composed of 4 stages: | The component is composed of 4 stages: | ||

| − | * stage 1: | + | * stage 1: schedules a pending request to issue (from local core or network); |

* stage 2: contains coherence cache and MSHR; | * stage 2: contains coherence cache and MSHR; | ||

* stage 3: processes a request properly with coherence protocol; | * stage 3: processes a request properly with coherence protocol; | ||

| − | * stage 4: prepares coherence request/response to be sent on network. | + | * stage 4: prepares coherence request/response to be sent on the network. |

| + | All these stages are represented in the figure below. The component has been realized in a ''pipelined'' fashion in order for the controller to be able to serve multiple requests at the same time. | ||

| − | + | === Assumptions === | |

| + | The design has been driven by these assumptions: | ||

| + | * cache controller schedules a request only when no other requests with on the same address are pending in the pipeline; | ||

| + | * coherence transactions are at the memory blocks level; | ||

| + | * cache controller does not schedule requests when another is in the pipeline and have the same set; | ||

| + | * only requests from the local core (load, store, replacement) allocate MSHR entries; | ||

| + | * information regarding cache block in a non-stable state are stored into the MSHR. | ||

| − | + | Two requests on the same block cannot be issued one after another; when the first request is issued, it may modify an MSHR entry after two clock cycles (stage 3), hence the second request may read a non-up-to-date entry. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Stage 1 == | == Stage 1 == | ||

| − | Stage 1 is responsible for the | + | Stage 1 is responsible for the scheduling of requests into the controller. A request could be a load miss, store miss, flush and replacement request from the local core or a coherence forwarded request or response from the network interface. |

=== MSHR Signals === | === MSHR Signals === | ||

| − | + | The arbiter in the first stage checks if a pending request can be issued, in order to eligible for scheduling no other requests on the '''same block''' should be ''ongoing'' (or under elaboration) in the cache controller. Ongoing requests are stored in the MSHR table. Tags and sets are provided by the MSHR for each type of pending requests and are forwarded to the arbiter at Stage 1. The arbiter uses the information provided on ongoing transactions to select a pending request. The MSHR provides a look-up port for each type of request, a <code>hit</code> single is provided along, the request is considered valid in the MSHR if such a signal is asserted: | |

// Signals to MSHR | // Signals to MSHR | ||

| Line 42: | Line 39: | ||

=== Stall Protocol ROMs === | === Stall Protocol ROMs === | ||

| − | In order to be compliant with the coherence protocol all incoming requests | + | In order to be compliant with the coherence protocol, all incoming requests on blocks in a non-stable state might be stalled. This task is performed through a series of protocol ROMs (one for each request type) that output state when the issue of relative request ought to be stalled, e.g. when a block is in state SM_A and a ''Fwd_GetS'', ''Fwd_GetM'', ''recall'', ''flush'', ''store'' or ''replacement'' request for the same block is received. In order to assert this signal, the protocol ROM needs the type of the request and the actual state of the block. The module <code>stall_protocol_rom</code> implements this logic: |

stall_protocol_rom load_stall_protocol_rom ( | stall_protocol_rom load_stall_protocol_rom ( | ||

| Line 74: | Line 71: | ||

); | ); | ||

| − | Note that response messages | + | Note that response messages are never stalled in the coherence protocol, such requests are stalled only if a pending request with the same set index is already in the pipeline: |

| + | |||

| + | assign can_issue_response = ni_response_valid & | ||

| + | !( | ||

| + | ( cc2_pending_valid && ( ni_response.memory_address.index == cc2_pending_address.index ) ) || | ||

| + | ( cc3_pending_valid && ( ni_response.memory_address.index == cc3_pending_address.index ) ) | ||

| + | ); | ||

| − | === Request | + | === Issuing a Request === |

| − | In order to issue a generic request it is required that: | + | In order to issue a generic request, it is required that: |

| − | * MSHR has | + | * MSHR has no pending requests for the same block; |

* if the request is already in MSHR it has to be not valid; | * if the request is already in MSHR it has to be not valid; | ||

| − | * if the request is already in MSHR and valid it must not have been stalled by | + | * if the request is already in MSHR and valid it must not have been stalled by the protocol ROM (see [[L1 Cache Controller#Stall Protocol ROMs | stall signals]]). |

| − | * further stages are not serving a request | + | * further stages are not serving a request on the same address (see [[L1 Cache Controller#Assumptions | assumptions]]); |

* network interface is available; | * network interface is available; | ||

| Line 96: | Line 99: | ||

ni_request_network_available; | ni_request_network_available; | ||

| − | Response messages | + | Response messages do not need feedbacks from MSHR since they do not allocate a new entry and they are never stalled. The same goes for flush requests even though they could be stalled by the relative stall protocol ROM. |

| − | |||

Finally a ''replacement'' request could be ''pre-allocated'' in MSHR (see [[L1 Cache Controller#MSHR Update Logic | MSHR update logic]]). In order for this request to be issued before every other request on the same block, an additional condition is added: | Finally a ''replacement'' request could be ''pre-allocated'' in MSHR (see [[L1 Cache Controller#MSHR Update Logic | MSHR update logic]]). In order for this request to be issued before every other request on the same block, an additional condition is added: | ||

| Line 107: | Line 109: | ||

( replacement_mshr_hit && replacement_mshr.valid && ( !stall_replacement || replacement_mshr.waiting_for_eviction ) ) ) | ( replacement_mshr_hit && replacement_mshr.valid && ( !stall_replacement || replacement_mshr.waiting_for_eviction ) ) ) | ||

... | ... | ||

| + | |||

| + | Note the control logic does not check if the MSHR has free entries, we made the following assumption which eases this control: only a request per thread can be issued and only threads can allocate an MSHR entry, it is sufficient to size MSHR to the number of threads x 2 in order for the MSHR to be never full and make the control about his filling useless. In the worst case, the MSHR has a pending request and a pending replacement per thread. | ||

=== Requests Scheduler === | === Requests Scheduler === | ||

| Line 112: | Line 116: | ||

# flush | # flush | ||

| + | # dinv | ||

# replacement | # replacement | ||

# store miss | # store miss | ||

| Line 117: | Line 122: | ||

# coherence forwarded request | # coherence forwarded request | ||

# load miss | # load miss | ||

| + | # recycled response | ||

Once a type of request has been scheduled this block drives conveniently the output signals for the second stage. | Once a type of request has been scheduled this block drives conveniently the output signals for the second stage. | ||

| − | |||

| − | |||

| − | |||

== Stage 2 == | == Stage 2 == | ||

| Line 128: | Line 131: | ||

=== Hit/miss logic === | === Hit/miss logic === | ||

| − | |||

Lookup phase is split in two parts performed by: | Lookup phase is split in two parts performed by: | ||

| Line 135: | Line 137: | ||

# cache controller (stage 2). | # cache controller (stage 2). | ||

| − | Load/store unit performs the first lookup using only request's set; so it returns an array of tags ( | + | Load/store unit performs the first lookup using only request's set; so it returns an array of tags (one per way) whose tags have the same set of the request and their privilege bits. This first lookup is performed at the same time the request is in cache controller stage 1. The second phase of lookup is performed by cache controller stage 2 using only the request's tag; this search is performed on the array provided by load/store unit. If there is a block with the same tag and the block is valid (its validity is checked with privilege bits) then a hit occurs and the way index of that block is provided to stage 3. The way index will be used by stage 3 to perform updates to coherence data of that block. <br> |

| − | If there | + | If there is no block with the same tag as the request's and no hit occurs, stage 3 takes the way index provided by LRU unit in order to replace that block (see [[L1 Cache Controller#Replacement Logic | replacement logic]]). |

... | ... | ||

| Line 146: | Line 148: | ||

assign snoop_tag_hit = |snoop_tag_way_oh; | assign snoop_tag_hit = |snoop_tag_way_oh; | ||

| − | Note that | + | Note that whenever a request arrives in stage 2 its way index in the data cache is not known yet (since hit/miss logic is computing it at the same time), hence coherence cache is looked up only issuing on the bus the request's set. The result of the snoop operation is forwarded to stage 3, which elaborates them. Stage 3 knows which way index to use for fetching correct data because meanwhile hit/miss logic will have provided it. |

| − | The choice of splitting lookup | + | The choice of splitting lookup into two separate phases has been made in order to reduce the latency of the entire process. |

=== MSHR === | === MSHR === | ||

| − | ''Miss Status Handling Register'' is used to handle cache lines data whose coherence transactions are pending; that is the case in which a cache block is in a non-stable state. | + | ''Miss Status Handling Register'' is used to handle cache lines data whose coherence transactions are pending; that is the case in which a cache block is in a non-stable state. Bear in mind that only one request per thread can be issued, MSHR has the same entry as the number of hardware threads. |

| − | + | An MSHR entry comprises the following data: | |

{| class="wikitable" | {| class="wikitable" | ||

| Line 170: | Line 172: | ||

* Valid: entry has valid data | * Valid: entry has valid data | ||

* Address: entry memory address | * Address: entry memory address | ||

| − | * Thread ID: requesting | + | * Thread ID: requesting HW thread id |

| − | * Wakeup Thread: wakeup thread when transaction is over | + | * Wakeup Thread: wakeup thread when the transaction is over |

* State: actual coherence state | * State: actual coherence state | ||

* Waiting for eviction: asserted for replacement requests | * Waiting for eviction: asserted for replacement requests | ||

| Line 177: | Line 179: | ||

* Data: data associated to request | * Data: data associated to request | ||

| − | Note that entry's ''Data'' | + | Note that entry's ''Data'' are stored in a separate SRAM memory in order to ease the lookup process. |

==== Implementation details ==== | ==== Implementation details ==== | ||

| − | + | Since MSHR has to provide a lookup service to stage 1 (see [[L1 Cache Controller#MSHR Signals | lookup signals]]) and update entries coming from stage 3 (see [[L1 Cache Controller#MSHR Update Logic | update signals]]) at the '''same time''', dedicated read and a write ports have been implemented for this purpose. <br> | |

===== Write port ===== | ===== Write port ===== | ||

A write policy is defined in order to define an order between writes and reads. This policy is can be set through a boolean parameter named WRITE_FIRST. <br> | A write policy is defined in order to define an order between writes and reads. This policy is can be set through a boolean parameter named WRITE_FIRST. <br> | ||

| − | In particular this module is instantiated with policy WRITE_FIRST set to false, this means MSHR will serve read operations ''before'' write operations; | + | In particular, this module is instantiated with policy WRITE_FIRST set to false, this means MSHR will serve read operations ''before'' write operations; write operations are '''delayed''' of one clock cycle after they have been issued from stage 3 (because a register delays the update). Here is the code regarding write port: |

// This logic is generated for each MSHR entry | // This logic is generated for each MSHR entry | ||

| Line 247: | Line 249: | ||

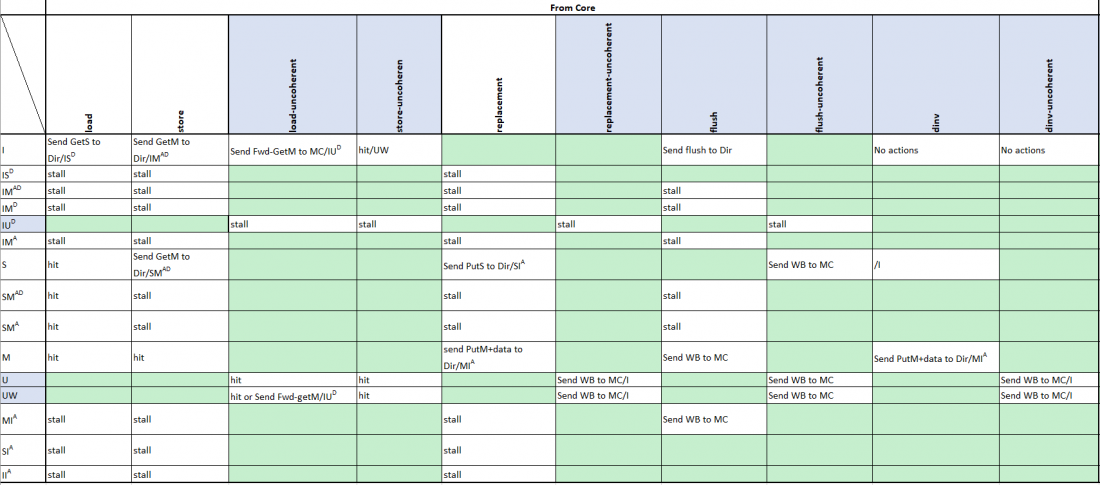

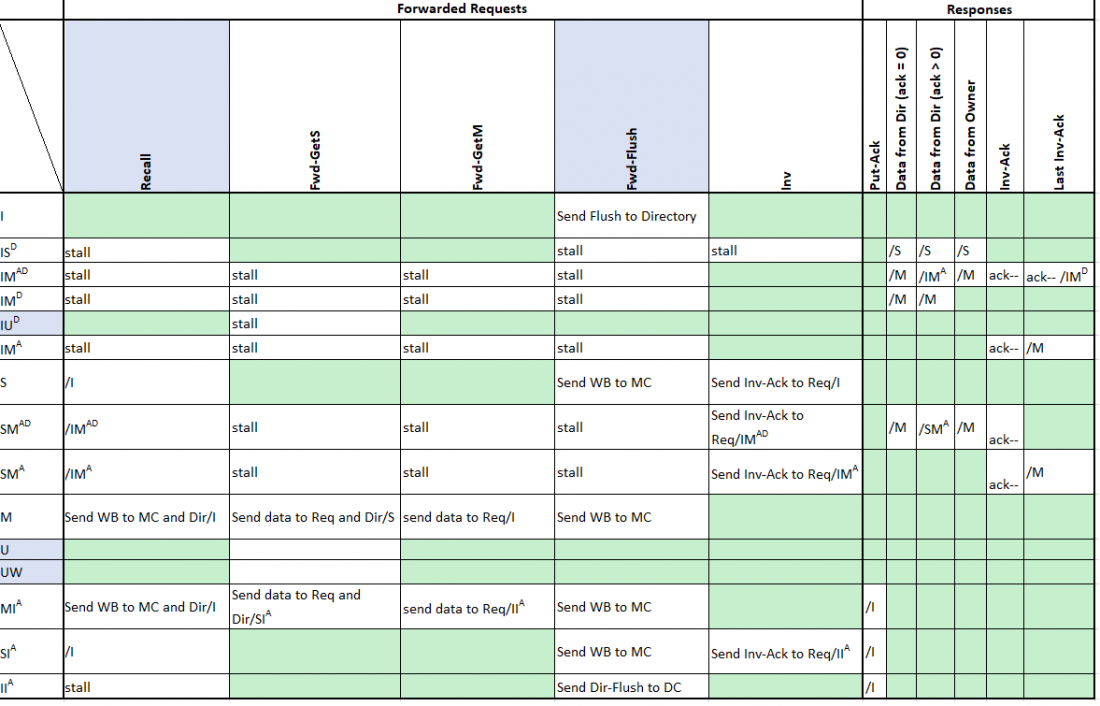

=== Protocol ROM === | === Protocol ROM === | ||

| − | This module implements the coherence protocol as represented in figure below. The choice to implement the protocol as a separate ROM has been made to ease further optimizations or changes to the protocol. It takes in input the current state and the request type and computes next actions. | + | This module implements the coherence protocol as represented in the figure below. The choice to implement the protocol as a separate ROM has been made to ease further optimizations or changes to the protocol. It takes in input the current state and the request type and computes the next actions. |

| − | [[File: | + | [[File:MSI_Protocol_cc-rom_p1_new.png|1100px|MSI_CC]] |

| − | + | [[File:MSI_Protocol_cc-rom_p2_new.png|1100px|MSI_CC]] | |

| − | Furthermore another type of request, ''flush'' | + | The coherence protocol used is MSI plus some changes due to the directory's inclusivity. In particular, a new type of forwarded request has been added, ''recall'', that is sent by directory controller when a block has to be evicted from L2 cache. A ''writeback'' response to the memory controller follows in response to a ''recall'' only when the block is in state '''M'''. Note that a ''writeback'' response is sent to the directory controller as well in order to provide a sort of acknowledgement. |

| + | |||

| + | Furthermore, another type of request, called ''flush'', has been added that simply send updated data to the from the requestor L1main memory. It also generates a ''writeback'' response even though it is directed only to the memory controller and does not impact on the coherence block state. Flushes are often used in applications for sending back to the main memory the output after the computation. | ||

| + | |||

| + | The above table refers to a baseline protocol which explains the main logic behind the Protocol ROM. Further optimizations, such as the uncoherent states, are deeply described in detail in [[MSI Protocol]]. | ||

=== Replacement Logic === | === Replacement Logic === | ||

| − | A cache block replacement | + | A cache block replacement might occur whenever a new block has to be stored into the L1 and all the sets are busy. In case of available sets, the control logic will select them avoiding replacement. Hence, an eviction occurs only when the selected block has valid information. Block validity is assured by privilege bits associated with it. These privilege bits (one for each way) come from Stage 2 that in turn has received them from load/store unit. The pseudo-LRU module, in Stage 2, selects the block to replace pointing least used way. |

replaced_way_valid = cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_read | cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_write; | replaced_way_valid = cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_read | cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_write; | ||

| − | + | The address of the evicting block has to be reconstructed. In particular, its tag is provided by tag cache from load/store unit (through Stage 2) while the index is provided by the requesting address which will take its place in the cache (since the two addresses have the same set). In case of a dirty block, the data has to be fetched and send back to the main memory, stored into the data cache in Stage 2. The address offset is kept low since the eviction operation involves the entire block. | |

replaced_way_address.tag = cc2_request_snoop_tag[cc2_request_lru_way_idx]; | replaced_way_address.tag = cc2_request_snoop_tag[cc2_request_lru_way_idx]; | ||

| Line 268: | Line 274: | ||

replaced_way_state = cc2_request_coherence_states[cc2_request_lru_way_idx]; | replaced_way_state = cc2_request_coherence_states[cc2_request_lru_way_idx]; | ||

| − | + | Recapping, a replacement request is issued if: | |

| − | * protocol ROM requested for a cache update; | + | * protocol ROM requested for a cache update due to a new incoming data; |

| − | * the block requested is not present in L1 cache (so the update request must be a block allocation); | + | * the block requested is not present in the L1 cache (so the update request must be a block allocation); |

* replaced block is valid. | * replaced block is valid. | ||

| Line 283: | Line 289: | ||

* entry update. | * entry update. | ||

| − | MSHR is used to store | + | MSHR is used to store information on pending transactions. Whenever a cache line is in the MSHR it has a non-stable state, and the state stored in the MSHR is considered the most up-to-date. So a new entry allocation is made every time the cache line state turns into a non-stable state. On the other hand, deallocation of an entry is made when a cache line's state turns into a stable state and it was pending in the MSHR, this means that the ongoing transaction is over. Finally, an update is made when there is something to change regarding the stored information in the MSHR, and the cache line state is still non-stable, e.g. if the penguin transaction is waiting for acknowledges from all the sharers, whenever an ack message arrives it increases the total number of ack received (hence update this information in the MSHR), but the transaction is still ongoing until all ack messages have arrived. Each condition is represented by a signal that is properly asserted by protocol ROM. |

cc3_update_mshr_en = ( pr_output.allocate_mshr_entry || pr_output.update_mshr_entry || pr_output.deallocate_mshr_entry ); | cc3_update_mshr_en = ( pr_output.allocate_mshr_entry || pr_output.update_mshr_entry || pr_output.deallocate_mshr_entry ); | ||

| − | + | Whenever the control signal <code>do_replacement</code> is asserted an MSHR entry is ''pre-allocated''. This is necessary otherwise data computed by [[L1 Cache Controller#Replacement Logic|Replacement Logic]] could be lost. The Stage 1 checks if an entry is pre-allocated during the scheduling by reading the <code>waiting_for_eviction</code> bit, see [[L1 Cache Controller#Request Issue Signals | Request Issue Signals]]. | |

| − | Note that | + | Note that, an issued request from the Stage 1 allocates a new entry, the index of an empty entry is provided directly by MSHR (through Stage 2). Remember that, due our previous assumptions, there is surely an empty MSHR entry otherwise, the request would have not been issued (see [[L1 Cache Controller#Request Issue Signals | Request Issue Signals]]). If the operation is an update or deallocation then the index is obtained from Stage 1 querying the MSHR on the index of the entry associated with the actual request (see [[L1 Cache Controller#MSHR Signals|MSHR Signals]]). |

cc3_update_mshr_index = cc2_request_mshr_hit ? cc2_request_mshr_index : cc2_request_mshr_empty_index; | cc3_update_mshr_index = cc2_request_mshr_hit ? cc2_request_mshr_index : cc2_request_mshr_empty_index; | ||

=== Cache Update Logic === | === Cache Update Logic === | ||

| − | Both data cache and coherence cache could be updated after a coherence transaction has been computed. Data cache | + | Both data cache and coherence cache could be updated after a coherence transaction has been computed. Data cache is updated according to the occurrence of a replacement, in that case, command <code>CC_REPLACEMENT</code> is issued to load/store unit; this command ensures load/store unit will prepare the block for eviction. Otherwise, an update to cache block has to be made; if the update involves only privileges then <code>CC_UPDATE_INFO</code> command is issued otherwise command <code>CC_UPDATE_INFO_DATA</code> is issued when both the new block and its privileges are updated into the L1 cache. |

// Data cache signals | // Data cache signals | ||

| Line 306: | Line 312: | ||

assign cc3_update_coherence_state_entry = pr_output.next_state; | assign cc3_update_coherence_state_entry = pr_output.next_state; | ||

| − | + | Data cache is updated whenever updating privileges for a block in the L1 is necessary, or whenever a new block is received and has to be stored in the cache along with its privileges. | |

| − | |||

| − | Data cache is updated | ||

... | ... | ||

| Line 314: | Line 318: | ||

... | ... | ||

| − | + | The code above describes the condition of updating a line in the L1 cache. The cache is updated whenever block state became stable (its transaction is over and it has been deallocated from the MSHR) and there is a cache hit (it is already stored in the cache). Otherwise, whenever the coherence protocol requires the update of the cache, this is signalled through the <code>pr_output.write_data_on_cache</code> bit, output of the protocol ROM. | |

... | ... | ||

| Line 320: | Line 324: | ||

... | ... | ||

| − | Furthermore if the request is a forwarded coherence request then L1 cache data are forwarded to the message generator in stage 4 in order to be sent to the requestor. | + | Furthermore, if the request is a forwarded coherence request then L1 cache data are forwarded to the message generator in stage 4 in order to be sent to the requestor. |

assign cc3_snoop_data_valid = cc2_request_valid && pr_output.send_data_from_cache; | assign cc3_snoop_data_valid = cc2_request_valid && pr_output.send_data_from_cache; | ||

| Line 327: | Line 331: | ||

== Stage 4 == | == Stage 4 == | ||

| − | This stage generates a correct request/response message for the network interface whenever | + | This stage generates a correct request/response message for the network interface whenever a message is issued from the third stage. |

== See Also == | == See Also == | ||

[[Coherence]] | [[Coherence]] | ||

Latest revision as of 15:21, 1 July 2019

Cache controller manages the L1 cache. In particular, it handles only coherence information (such as states) since L1 data cache is managed by load/store unit.

The component is composed of 4 stages:

- stage 1: schedules a pending request to issue (from local core or network);

- stage 2: contains coherence cache and MSHR;

- stage 3: processes a request properly with coherence protocol;

- stage 4: prepares coherence request/response to be sent on the network.

All these stages are represented in the figure below. The component has been realized in a pipelined fashion in order for the controller to be able to serve multiple requests at the same time.

Assumptions

The design has been driven by these assumptions:

- cache controller schedules a request only when no other requests with on the same address are pending in the pipeline;

- coherence transactions are at the memory blocks level;

- cache controller does not schedule requests when another is in the pipeline and have the same set;

- only requests from the local core (load, store, replacement) allocate MSHR entries;

- information regarding cache block in a non-stable state are stored into the MSHR.

Two requests on the same block cannot be issued one after another; when the first request is issued, it may modify an MSHR entry after two clock cycles (stage 3), hence the second request may read a non-up-to-date entry.

Stage 1

Stage 1 is responsible for the scheduling of requests into the controller. A request could be a load miss, store miss, flush and replacement request from the local core or a coherence forwarded request or response from the network interface.

MSHR Signals

The arbiter in the first stage checks if a pending request can be issued, in order to eligible for scheduling no other requests on the same block should be ongoing (or under elaboration) in the cache controller. Ongoing requests are stored in the MSHR table. Tags and sets are provided by the MSHR for each type of pending requests and are forwarded to the arbiter at Stage 1. The arbiter uses the information provided on ongoing transactions to select a pending request. The MSHR provides a look-up port for each type of request, a hit single is provided along, the request is considered valid in the MSHR if such a signal is asserted:

// Signals to MSHR assign cc1_mshr_lookup_tag[MSHR_LOOKUP_PORT_LOAD ] = ci_load_request_address.tag; assign cc1_mshr_lookup_set[MSHR_LOOKUP_PORT_LOAD ] = ci_load_request_address.index; // Signals from MSHR assign load_mshr_hit = cc2_mshr_lookup_hit[MSHR_LOOKUP_PORT_LOAD ]; assign load_mshr_index = cc2_mshr_lookup_index[MSHR_LOOKUP_PORT_LOAD ]; assign load_mshr = cc2_mshr_lookup_entry_info[MSHR_LOOKUP_PORT_LOAD ];

Stall Protocol ROMs

In order to be compliant with the coherence protocol, all incoming requests on blocks in a non-stable state might be stalled. This task is performed through a series of protocol ROMs (one for each request type) that output state when the issue of relative request ought to be stalled, e.g. when a block is in state SM_A and a Fwd_GetS, Fwd_GetM, recall, flush, store or replacement request for the same block is received. In order to assert this signal, the protocol ROM needs the type of the request and the actual state of the block. The module stall_protocol_rom implements this logic:

stall_protocol_rom load_stall_protocol_rom ( .current_request ( load ), .current_state ( load_mshr.state ), .pr_output_stall ( stall_load ) );

stall_protocol_rom store_stall_protocol_rom ( .current_request ( store ), .current_state ( store_mshr.state ), .pr_output_stall ( stall_store ) );

stall_protocol_rom flush_stall_protocol_rom ( .current_request ( flush ), .current_state ( flush_mshr.state ), .pr_output_stall ( stall_flush ) );

stall_protocol_rom replacement_stall_protocol_rom (

.current_request ( replacement ),

.current_state ( replacement_mshr.state ),

.pr_output_stall ( stall_replacement )

);

stall_protocol_rom forwarded_stall_protocol_rom ( .current_request ( fwd_2_creq( ni_forwarded_request.packet_type ) ), .current_state ( forwarded_request_mshr.state ), .pr_output_stall ( stall_forwarded_request ) );

Note that response messages are never stalled in the coherence protocol, such requests are stalled only if a pending request with the same set index is already in the pipeline:

assign can_issue_response = ni_response_valid & !( ( cc2_pending_valid && ( ni_response.memory_address.index == cc2_pending_address.index ) ) || ( cc3_pending_valid && ( ni_response.memory_address.index == cc3_pending_address.index ) ) );

Issuing a Request

In order to issue a generic request, it is required that:

- MSHR has no pending requests for the same block;

- if the request is already in MSHR it has to be not valid;

- if the request is already in MSHR and valid it must not have been stalled by the protocol ROM (see stall signals).

- further stages are not serving a request on the same address (see assumptions);

- network interface is available;

assign can_issue_load = ci_load_request_valid &&

( !load_mshr_hit ||

( load_mshr_hit && !load_mshr.valid) ||

( load_mshr_hit && load_mshr.valid && load_mshr.address.tag == ci_load_request_address.tag && !stall_load ) ) &&

! (( cc2_pending_valid && ( ci_load_request_address.index == cc2_pending_address.index ) ) ||

( cc3_pending_valid && ( ci_load_request_address.index == cc3_pending_address.index ) )) &&

ni_request_network_available;

Response messages do not need feedbacks from MSHR since they do not allocate a new entry and they are never stalled. The same goes for flush requests even though they could be stalled by the relative stall protocol ROM.

Finally a replacement request could be pre-allocated in MSHR (see MSHR update logic). In order for this request to be issued before every other request on the same block, an additional condition is added:

assign can_issue_replacement =

...

( !replacement_mshr_hit ||

( replacement_mshr_hit && !replacement_mshr.valid) ||

( replacement_mshr_hit && replacement_mshr.valid && ( !stall_replacement || replacement_mshr.waiting_for_eviction ) ) )

...

Note the control logic does not check if the MSHR has free entries, we made the following assumption which eases this control: only a request per thread can be issued and only threads can allocate an MSHR entry, it is sufficient to size MSHR to the number of threads x 2 in order for the MSHR to be never full and make the control about his filling useless. In the worst case, the MSHR has a pending request and a pending replacement per thread.

Requests Scheduler

Once the conditions for the issue have been verified, two or more requests could be ready at the same time so a scheduler must be used. Every request has a fixed priority whose order is set as below:

- flush

- dinv

- replacement

- store miss

- coherence response

- coherence forwarded request

- load miss

- recycled response

Once a type of request has been scheduled this block drives conveniently the output signals for the second stage.

Stage 2

Stage 2 is responsible for managing L1 cache, the MSHR and forwarding signals from Stage 1 to Stage 3. It simply contains the L1 coherence cache (L1 data cache is in load/store unit) and all related logic for managing cache hits and block replacement. The policy used to replace a block is LRU (Least Recently Used).

This module receives signals from stage 3 to update MSHR and coherence cache properly once a request is processed and from load/store unit to update LRU every time a block is accessed from the core.

Hit/miss logic

Lookup phase is split in two parts performed by:

- load/store unit;

- cache controller (stage 2).

Load/store unit performs the first lookup using only request's set; so it returns an array of tags (one per way) whose tags have the same set of the request and their privilege bits. This first lookup is performed at the same time the request is in cache controller stage 1. The second phase of lookup is performed by cache controller stage 2 using only the request's tag; this search is performed on the array provided by load/store unit. If there is a block with the same tag and the block is valid (its validity is checked with privilege bits) then a hit occurs and the way index of that block is provided to stage 3. The way index will be used by stage 3 to perform updates to coherence data of that block.

If there is no block with the same tag as the request's and no hit occurs, stage 3 takes the way index provided by LRU unit in order to replace that block (see replacement logic).

... // Second phase lookup // Result of this lookup is an array one-hot codified assign snoop_tag_way_oh[dcache_way] = ( ldst_snoop_tag[dcache_way] == cc1_request_address.tag ) & ( ldst_snoop_privileges[dcache_way].can_read | ldst_snoop_privileges[dcache_way].can_write ); ... assign snoop_tag_hit = |snoop_tag_way_oh;

Note that whenever a request arrives in stage 2 its way index in the data cache is not known yet (since hit/miss logic is computing it at the same time), hence coherence cache is looked up only issuing on the bus the request's set. The result of the snoop operation is forwarded to stage 3, which elaborates them. Stage 3 knows which way index to use for fetching correct data because meanwhile hit/miss logic will have provided it.

The choice of splitting lookup into two separate phases has been made in order to reduce the latency of the entire process.

MSHR

Miss Status Handling Register is used to handle cache lines data whose coherence transactions are pending; that is the case in which a cache block is in a non-stable state. Bear in mind that only one request per thread can be issued, MSHR has the same entry as the number of hardware threads.

An MSHR entry comprises the following data:

| Valid | Address | Thread ID | Wakeup Thread | State | Waiting For Eviction | Ack Count | Data |

|---|

- Valid: entry has valid data

- Address: entry memory address

- Thread ID: requesting HW thread id

- Wakeup Thread: wakeup thread when the transaction is over

- State: actual coherence state

- Waiting for eviction: asserted for replacement requests

- Ack count: remaining acks to receive

- Data: data associated to request

Note that entry's Data are stored in a separate SRAM memory in order to ease the lookup process.

Implementation details

Since MSHR has to provide a lookup service to stage 1 (see lookup signals) and update entries coming from stage 3 (see update signals) at the same time, dedicated read and a write ports have been implemented for this purpose.

Write port

A write policy is defined in order to define an order between writes and reads. This policy is can be set through a boolean parameter named WRITE_FIRST.

In particular, this module is instantiated with policy WRITE_FIRST set to false, this means MSHR will serve read operations before write operations; write operations are delayed of one clock cycle after they have been issued from stage 3 (because a register delays the update). Here is the code regarding write port:

// This logic is generated for each MSHR entry

generate

genvar mshr_id;

for ( mshr_id = 0; mshr_id < `MSHR_SIZE; mshr_id++ ) begin : mshr_entries

...

// Write policy

if (WRITE_FIRST == "TRUE")

// If true writes are serviced immediately

assign data_updated[mshr_id] = (enable && update_this_index) ? update_entry : data[mshr_id];

else

assign data_updated[mshr_id] = data[mshr_id];

...

// Data entries (set of registers)

always_ff @(posedge clk, posedge reset) begin

if (reset)

data[mshr_id] <= 0;

else if (enable && update_this_index)

data[mshr_id] <= update_entry;

end

end

endgenerate

Read port

Read port implements a simple hit/miss logic for requests coming from stage 1 (see lookup signals). Write policy influents which data this logic will read though; if WRITE_FIRST is set to true then lookup is made on data just updated by write logic otherwise the lookup will be made before an update, the latter is the case when reads have more priority than writes (WRITE_FIRST is false). Here is the code regarding lookup logic:

// This logic is generated for each MSHR entry

...

generate

for ( i = 0; i < `MSHR_SIZE; i++ ) begin : lookup_logic

// data_updated[] data are set according to write policy

assign hit_map[i] = ( data_updated[i].address.index == index ) && data_updated[i].valid;

end

endgenerate

...

assign hit = |hit_map;

Stage 3

Stage 3 is responsible for the actual execution of requests. Once a request is processed, this stage issues signals to the units in the above stages in order to update data properly.

In particular, this stage drives datapath to perform one of these functions:

- block replacement evaluation;

- MSHR update;

- cache memory (both data and coherence info) update.

- preparing outgoing coherence messages.

Current State Selector

Before a request is processed by coherence protocol the correct source of cache block state has to be chosen. These data could be retrieved from:

- MSHR;

- coherence data cache;

If none of the conditions above are met then cache block must be in state I because it has not been ever read or modified.

Protocol ROM

This module implements the coherence protocol as represented in the figure below. The choice to implement the protocol as a separate ROM has been made to ease further optimizations or changes to the protocol. It takes in input the current state and the request type and computes the next actions.

The coherence protocol used is MSI plus some changes due to the directory's inclusivity. In particular, a new type of forwarded request has been added, recall, that is sent by directory controller when a block has to be evicted from L2 cache. A writeback response to the memory controller follows in response to a recall only when the block is in state M. Note that a writeback response is sent to the directory controller as well in order to provide a sort of acknowledgement.

Furthermore, another type of request, called flush, has been added that simply send updated data to the from the requestor L1main memory. It also generates a writeback response even though it is directed only to the memory controller and does not impact on the coherence block state. Flushes are often used in applications for sending back to the main memory the output after the computation.

The above table refers to a baseline protocol which explains the main logic behind the Protocol ROM. Further optimizations, such as the uncoherent states, are deeply described in detail in MSI Protocol.

Replacement Logic

A cache block replacement might occur whenever a new block has to be stored into the L1 and all the sets are busy. In case of available sets, the control logic will select them avoiding replacement. Hence, an eviction occurs only when the selected block has valid information. Block validity is assured by privilege bits associated with it. These privilege bits (one for each way) come from Stage 2 that in turn has received them from load/store unit. The pseudo-LRU module, in Stage 2, selects the block to replace pointing least used way.

replaced_way_valid = cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_read | cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_write;

The address of the evicting block has to be reconstructed. In particular, its tag is provided by tag cache from load/store unit (through Stage 2) while the index is provided by the requesting address which will take its place in the cache (since the two addresses have the same set). In case of a dirty block, the data has to be fetched and send back to the main memory, stored into the data cache in Stage 2. The address offset is kept low since the eviction operation involves the entire block.

replaced_way_address.tag = cc2_request_snoop_tag[cc2_request_lru_way_idx];

replaced_way_address.index = cc2_request_address.index;

replaced_way_address.offset = {`DCACHE_OFFSET_LENGTH{1'b0}};

replaced_way_state = cc2_request_coherence_states[cc2_request_lru_way_idx];

Recapping, a replacement request is issued if:

- protocol ROM requested for a cache update due to a new incoming data;

- the block requested is not present in the L1 cache (so the update request must be a block allocation);

- replaced block is valid.

do_replacement = pr_output.write_data_on_cache && !cc2_request_snoop_hit && replaced_way_valid;

MSHR Update Logic

MSHR could be updated in three different ways:

- entry allocation;

- entry deallocation;

- entry update.

MSHR is used to store information on pending transactions. Whenever a cache line is in the MSHR it has a non-stable state, and the state stored in the MSHR is considered the most up-to-date. So a new entry allocation is made every time the cache line state turns into a non-stable state. On the other hand, deallocation of an entry is made when a cache line's state turns into a stable state and it was pending in the MSHR, this means that the ongoing transaction is over. Finally, an update is made when there is something to change regarding the stored information in the MSHR, and the cache line state is still non-stable, e.g. if the penguin transaction is waiting for acknowledges from all the sharers, whenever an ack message arrives it increases the total number of ack received (hence update this information in the MSHR), but the transaction is still ongoing until all ack messages have arrived. Each condition is represented by a signal that is properly asserted by protocol ROM.

cc3_update_mshr_en = ( pr_output.allocate_mshr_entry || pr_output.update_mshr_entry || pr_output.deallocate_mshr_entry );

Whenever the control signal do_replacement is asserted an MSHR entry is pre-allocated. This is necessary otherwise data computed by Replacement Logic could be lost. The Stage 1 checks if an entry is pre-allocated during the scheduling by reading the waiting_for_eviction bit, see Request Issue Signals.

Note that, an issued request from the Stage 1 allocates a new entry, the index of an empty entry is provided directly by MSHR (through Stage 2). Remember that, due our previous assumptions, there is surely an empty MSHR entry otherwise, the request would have not been issued (see Request Issue Signals). If the operation is an update or deallocation then the index is obtained from Stage 1 querying the MSHR on the index of the entry associated with the actual request (see MSHR Signals).

cc3_update_mshr_index = cc2_request_mshr_hit ? cc2_request_mshr_index : cc2_request_mshr_empty_index;

Cache Update Logic

Both data cache and coherence cache could be updated after a coherence transaction has been computed. Data cache is updated according to the occurrence of a replacement, in that case, command CC_REPLACEMENT is issued to load/store unit; this command ensures load/store unit will prepare the block for eviction. Otherwise, an update to cache block has to be made; if the update involves only privileges then CC_UPDATE_INFO command is issued otherwise command CC_UPDATE_INFO_DATA is issued when both the new block and its privileges are updated into the L1 cache.

// Data cache signals assign cc3_update_ldst_command = do_replacement ? CC_REPLACEMENT : ( pr_output.write_data_on_cache ? CC_UPDATE_INFO_DATA : CC_UPDATE_INFO ); assign cc3_update_ldst_way = cc2_request_snoop_hit ? cc2_request_snoop_way_idx : cc2_request_lru_way_idx; ... // Coherence cache signals assign cc3_update_coherence_state_index = cc2_request_address.index; assign cc3_update_coherence_state_way = cc2_request_snoop_hit ? cc2_request_snoop_way_idx : cc2_request_lru_way_idx; assign cc3_update_coherence_state_entry = pr_output.next_state;

Data cache is updated whenever updating privileges for a block in the L1 is necessary, or whenever a new block is received and has to be stored in the cache along with its privileges.

... cc3_update_ldst_valid = ( pr_output.update_privileges && cc2_request_snoop_hit ) || pr_output.write_data_on_cache; ...

The code above describes the condition of updating a line in the L1 cache. The cache is updated whenever block state became stable (its transaction is over and it has been deallocated from the MSHR) and there is a cache hit (it is already stored in the cache). Otherwise, whenever the coherence protocol requires the update of the cache, this is signalled through the pr_output.write_data_on_cache bit, output of the protocol ROM.

... cc3_update_coherence_state_en = ( pr_output.next_state_is_stable && cc2_request_snoop_hit ) || ( pr_output.next_state_is_stable && !cc2_request_snoop_hit && pr_output.write_data_on_cache ); ...

Furthermore, if the request is a forwarded coherence request then L1 cache data are forwarded to the message generator in stage 4 in order to be sent to the requestor.

assign cc3_snoop_data_valid = cc2_request_valid && pr_output.send_data_from_cache; assign cc3_snoop_data_set = cc2_request_address.index; assign cc3_snoop_data_way = cc2_request_snoop_way_idx;

Stage 4

This stage generates a correct request/response message for the network interface whenever a message is issued from the third stage.