Heterogeneous Tile

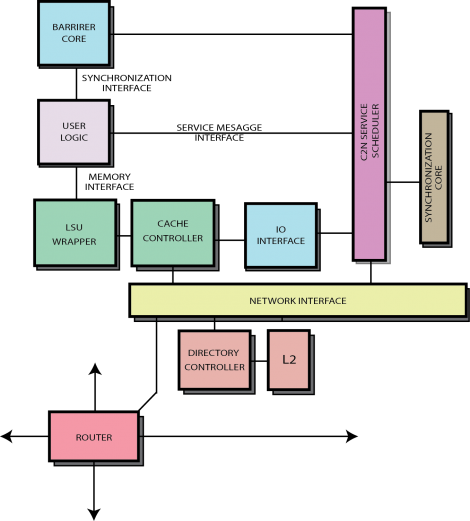

The NaplesPU project provides a heterogeneous tile integrated into the NoC, meant to be extended by the user. Such a tile provides a first example of how to integrate a custom module within the network-on-chip. The project comes along with a dedicated prototype in src/mc/tile/tile_ht.sv meant to be extended for custom logic.

The provided tile_ht module instantiates all modules typical of an NPU tile, except for the GPGPU core, and a modified version of the load/store unit wrapped into a simplified interface meant to be easily accessible for a custom component.

The HT tile provides to users two main interfaces to interact with the system: the Memory Interface and the Synchronization Interface further explained below. Such a tile provides the following parameters for user-specific configurations:

`include "npu_user_defines.sv" `include "npu_defines.sv" `include "npu_coherence_defines.sv" module tile_ht # ( parameter TILE_ID = 0, // Current tile ID parameter CORE_ID = 0, // Current core ID, not used in this type of tile parameter TILE_MEMORY_ID = 9, // ID of the memory controller tile parameter THREAD_NUMB = 8, // Supported thread number, each thread has a separate FIFO in the LSU and requests from different threads are elaborated concurrently - Must be a power of two parameter ADDRESS_WIDTH = `ADDRESS_SIZE, // Memory address width - has to be congruent with the system address width parameter DATA_WIDTH = `CACHE_LINE_WIDTH, // Data bus width - has to be congruent with the system parameter L1_WAY_NUMB = 4, // Number of way in the L1 data cache parameter L1_SET_NUMB = 32, // Number of L1 data sets parameter SYNCH_SUPP = 1 // Allocates barrier_core modules for synchronization support )

Contents

Memory Interface

The Memory Interface provides a transparent way to interact with the coherence system. The memory interface implements a simple valid/available handshake per thread, a different thread might issue different memory transaction and those are concurrently handled by the coherence system.

When a thread has a memory request, it first checks the availability bit related to its ID, if this is high the thread issues a memory transaction setting the valid bit and loading all the needed information on the Memory Interface.

Supported memory operations are reported below along with their opcodes:

LOAD_8 = 'h0 - 'b000000 LOAD_16 = 'h1 - 'b000001 LOAD_32 = 'h2 - 'b000010 LOAD_V_8 = 'h7 - 'b000111 LOAD_V_16 = 'h8 - 'b001000 LOAD_V_32 = 'h9 - 'b001001 STORE_8 = 'h20 - 'b100000 STORE_16 = 'h21 - 'b100001 STORE_32 = 'h22 - 'b100010 STORE_V_8 = 'h24 - 'b100100 STORE_V_16 = 'h25 - 'b100101 STORE_V_32 = 'h26 - 'b100110

A custom core to be integrate d in the NaplesPU system ought to implement the following interface in order to communicate with the memory system:

/* Memory Interface */ // To Heterogeneous LSU output logic req_out_valid, // Valid signal for issued memory requests output logic [31 : 0] req_out_id, // ID of the issued request, mainly used for debugging output logic [THREAD_IDX_W - 1 : 0] req_out_thread_id, // Thread ID of issued request. Requests running on different threads are dispatched to the CC conccurrently output logic [7 : 0] req_out_op, // Operation performed output logic [ADDRESS_WIDTH - 1 : 0] req_out_address, // Issued request address output logic [DATA_WIDTH - 1 : 0] req_out_data, // Data output // From Heterogeneous LSU input logic resp_in_valid, // Valid signal for the incoming responses input logic [31 : 0] resp_in_id, // ID of the incoming response, mainly used for debugging input logic [THREAD_IDX_W - 1 : 0] resp_in_thread_id, // Thread ID of the incoming response input logic [7 : 0] resp_in_op, // Operation code input logic [DATA_WIDTH - 1 : 0] resp_in_cache_line, // Incoming data input logic [BYTES_PERLINE - 1 : 0] resp_in_store_mask, // Bitmask of the position of the requesting bytes in the incoming data bus input logic [ADDRESS_WIDTH - 1 : 0] resp_in_address, // Incoming response address

The Heterogeneous Tile shares the same LSU and CC of a NPU tile, consequently the LSU forwards on the Memory Interface its backpressure signals, as follows:

// From Heterogeneous accelerator - Backpressure signals input logic [THREAD_NUMB - 1 : 0] lsu_het_almost_full, // Thread bitmask, if i-th bit is high, i-th thread cannot issue requests. input logic [THREAD_NUMB - 1 : 0] lsu_het_no_load_store_pending, // Thread bitmask, if i-th bit is low, i-th thread has no pending operations.

In particular, lsu_het_almost_full i-th bit has to be low before issuing a memory request for i-th thread.

The Memory Interface provides performance counters as part of its interface:

// From Heterogeneous LSU - Performance counters input logic resp_in_miss, // LSU miss on resp_in_address input logic resp_in_evict, // LSU eviction (replacement) on resp_in_address input logic resp_in_flush, // LSU flush on resp_in_address input logic resp_in_dinv, // LSU data cache invalidatio on resp_in_address

Those signals state when the L1 Data cache incurs in a miss, eviction (or replacement), flush, and data cache invalidation.

The LSU in the Heterogeneous Tile can be configured in two different modalities, namely Write-Through and Write-Back:

output logic lsu_het_ctrl_cache_wt, // Enable Write-Through cache configuration.

When lsu_het_ctrl_cache_wt is high the LSU acts as a Write-Through cache, dually when low it implements a Write-Back mechanism.

Finally, the Memory Interface provides error signals in case of address misaligned in the issued request:

// Heterogeneous accelerator - Flush and Error signals input logic lsu_het_error_valid, // Error coming from LSU input register_t lsu_het_error_id, // Error ID - Misaligned = 380 input logic [THREAD_IDX_W - 1 : 0] lsu_het_error_thread_id, // Thread involved in the Error

Synchronization Interface

The Synchronization Interface connects the user logic with the synchronization module core-side allocated within the tile (namely the barrier_core unit).

Such an interface allows user logic to synchronize on a thread grain, when parameter SYNCH_SUPP is high the tile implements the synchronization support allocating a barrier_core module which handles the synchronization events core-side:

// SYNCH_SUPP parameter allocates the following barrier_core, which

// provides core-side synchronization support.

generate

if ( SYNCH_SUPP == 1) begin

barrier_core # (

.TILE_ID ( TILE_ID ),

.THREAD_NUMB ( THREAD_NUMB ),

.MANYCORE ( 1 ),

.DIS_SYNCMASTER ( DISTR_SYNC )

)

u_barrier_core (

.clk ( clk ),

.reset ( reset ),

// Synch Request - Core Interface

.opf_valid ( breq_valid ),

.opf_inst_scheduled ( bc_inst_scheduled ),

.opf_fetched_op0 ( breq_barrier_id ),

.opf_fetched_op1 ( breq_thread_numb ),

.bc_release_val ( bc_release_val ),

...

);

end else begin

assign bc_release_val = {THREAD_NUMB{1'b1}};

assign c2n_account_valid = 1'b0;

assign c2n_account_message = sync_account_message_t'(0);

assign c2n_account_destination_valid = tile_mask_t'(0);

assign n2c_mes_service_consumed = 1'b0;

end

endgenerate

The synchronization mechanism supports inter- and intra- tile barrier synchronization. When a thread hits a synchronization point, it issues a request to the distributed synchronization master through the Synchronization Interface.

Then, the thread is stalled (up to the user logic) till its release signal is high again.

A custom core may implement the following interface if synchronization is requeried:

/* Synchronization Interface */ // To Barrier Core output logic breq_valid, // Hit barrier signal, sends a synchronization request output logic [31 : 0] breq_op_id, // Synchronization operation ID, mainly used for debugging output logic [THREAD_NUMB - 1 : 0] breq_thread_id, // ID of the thread perfoming the synchronization operation output logic [31 : 0] breq_barrier_id, // Barrier ID, has to be unique in case of concurrent barriers output logic [31 : 0] breq_thread_numb, // Total number - 1 of synchronizing threads on the current barrier ID // From Barrier Core input logic [THREAD_NUMB - 1 : 0] bc_release_val // Stalled threads bitmask waiting for release (the i-th bit low stalls the i-th thread)

Service Message Interface

The Service Message Interface connects the user logic with the service network. The heterogeneous tile can send message to other nodes through this interface, this is often used for host-tile communication. A custom core may implement the following interface if communication through messages is required:

/* Service Message Interface */ // From Service Network input logic message_in_valid, // Valid bit for incoming Service Message input service_message_t message_in, // Incoming message from Service Network output logic n2c_mes_service_consumed, // Service Message consumed // To Service Network output logic message_out_valid, // Valid bit for outcoming Service Message output service_message_t message_out, // Outcoming Service Message data input logic network_available, // Service Network availability bit output tile_mask_t destination_valid // One-Hot destinations bitmap

The Service Message interface is a standard valid/available interface, when message_in_valid is asserted an incoming message message_in is valid, and the user ought to assert for a clock cycle the n2c_mes_service_consumed bit, which signals to the Network Interface that the message has been correctly received and handled. The incoming message is declared as a service_message_t type, as shown in the following code snippet:

typedef struct packed {

service_message_type_t message_type;

service_message_data_t data;

} service_message_t;

Field data stores the information received, while message_type states the nature of the incoming message. In such cases this value might be HOST, in case of message from the host, or HT_CORE in case of a message from another heterogeneous tile.

On the other hand, whenever the user logic has a message to send over the network, it builds the output message message_out, storing the message body in the data field, while tying the message_type filed to HT_CORE value. Then, the destination tile (or tiles) has to be declared in the destination_valid output bitmask, each tile in the network is decoded using a bitmask, and each bit represents a different tile based on the position of the corresponding bit, e.g. bit in position 0 targets tile 0, and so on. The Network Interface connected will forward the message to each tile declared in such a mask. When both message and destinations are ready, the user first checks the availability of the network reading the network_available input bit, waiting for it if necessary. Then, the control logic might assert the message_out_valid signal forwarding both message and destinatins to the Network Interface, which will take care of the delivery from this moment onward.

Heterogeneous Dummy provided

This FSM first synchronizes with other ht in the NoC. Each dummy core in a ht tile requires a synchronization for LOCAL_BARRIER_NUMB threads (default = 4).

// Issue synchronization requests

SEND_BARRIER : begin

breq_valid <= 1'b1;

breq_barrier_id <= 42;

barrier_served <= 1'b1;

if(rem_barriers == 1)

next_state <= WAIT_SYNCH;

else

next_state <= IDLE;

end

The SEND_BARRIER state sends LOCAL_BARRIER_NUMB requests with barrier ID 42 through the Synchronization interface. It sets the total number of threads synchronizing on the barrier ID 42 equal to TOTAL_BARRIER_NUMB (=LOCAL_BARRIER_NUMB x `TILE_HT, number of heterogeneous tile in the system). When the last barrier is issued, SEND_BARRIER jumps to WAIT_SYNCH waiting for the ACK from the synchronization master.

// Synchronizes all dummy cores

WAIT_SYNCH : begin

if(&bc_release_val)

next_state <= IDLE;

end

At this point, all threads in each ht tile are synchronized, and the FSM starts all pending memory transactions.

The START_MEM_READ_TRANS performs LOCAL_WRITE_REQS read operations (default = 128), performing a LOAD_8 operation (op code = 0) each time. In the default configuration, 128 LOAD_8 operations on consecutive addresses are spread among all threads and issued to the LSU through the Memory interface. When read operations are over, the FSM starts writing operations in a similar way.

// Starting multiple read operations

START_MEM_READ_TRANS : begin

if ( rem_reads == 1 )

next_state <= DONE;

else

next_state <= IDLE;

if(lsu_het_almost_full[thread_id_read] == 1'b0) begin

read_served <= 1'b1;

req_out_valid <= 1'b1;

req_out_id <= rem_reads;

req_out_op <= 0; // LOAD_8

incr_address <= 1'b1;

req_out_thread_id <= thread_id_read;

end

end

The START_MEM_WRITE_TRANS performs LOCAL_WRITE_REQS (default = 128) write operations on consecutive addresses through the Memory interface. This time the operation performed is a STORE_8, and all ht tile are issuing the same store operation on the same addresses competing for the ownership in a transparent way. The coherence is totally handled by the LSU and CC, on the core side lsu_het_almost_full bitmap states the availability of the LSU for each thread (both writing and reading).

// Starting multiple write operations

START_MEM_WRITE_TRANS : begin

if ( pending_writes )

next_state <= IDLE;

else

next_state <= DONE;

if(lsu_het_almost_full[thread_id_write] == 1'b0 ) begin

write_served <= 1'b1;

req_out_valid <= 1'b1;

req_out_id <= rem_writes;

req_out_thread_id <= thread_id_write;

req_out_op <= 'b100000; // STORE_8

tmp_data_out[0] <= 8'hee;

incr_address <= 1'b1;

end

end

In both states, a thread first checks the availability stored in a position equal to its ID (lsu_het_almost_full[thread_id]), then performs a memory transaction.

Adding custom logic

The provided heterogeneous dummy core might be replaced with a custom accelerator or user logic, that ought to be allocated into the tile_ht replacing the following lines:

// -----------------------------------------------------------------------

// -- Tile HT - Dummy Core

// -----------------------------------------------------------------------

het_core_example #(

.TILE_ID ( TILE_ID ),

.THREAD_NUMB ( THREAD_NUMB )

) u_dummy_het_core (

.clk ( clk ),

.reset ( reset ),

/* Memory Interface */

.req_out_valid ( req_in_valid ),

.req_out_id ( req_in_id ),

.req_out_thread_id ( req_in_thread_id ),

.req_out_op ( req_in_op ),

.req_out_address ( req_in_address ),

.req_out_data ( req_in_data ),

...

/* Synchronization Interface */

.breq_valid ( breq_valid ),

.breq_op_id ( breq_op_id ),

.breq_thread_id ( breq_thread_id ),

.breq_barrier_id ( breq_barrier_id ),

.breq_thread_numb ( breq_thread_numb ),

...

);