Difference between revisions of "Coherence Injection"

(Created page with "In this guide you will find all the information on how to setup a ''Vivado'' project to test coherence injection and how to use the testbench correctly. == Setup ''Vivado'' p...") |

(→Understanding the Testbench) |

||

| (3 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | In this guide you will find all the information on how to setup a ''Vivado'' project to test coherence injection and how to use the testbench correctly. | + | In this guide, you will find all the information on how to setup a ''Vivado'' project to test coherence injection and how to use the testbench correctly. |

== Setup ''Vivado'' project == | == Setup ''Vivado'' project == | ||

| Line 6: | Line 6: | ||

For first you should install a licensed Version of Vivado. For this guide we assume to use Vivado 2018.2.2 with WebPack license. This free license is enough for simulation-purposes. | For first you should install a licensed Version of Vivado. For this guide we assume to use Vivado 2018.2.2 with WebPack license. This free license is enough for simulation-purposes. | ||

| − | === Get | + | === Get NaplesPU === |

| − | + | First, <code>git clone</code> the latest version from the NPU repository with git, then checkout <code>coherence_testing</code> branch. If the file <code>tb_coherence_injection.sv</code> exists, then you are on the right branch. | |

=== Run Vivado === | === Run Vivado === | ||

| − | Open a terminal window and place into the | + | Open a terminal window and place into the NaplesPU repository directory. Type <code>ls</code> o <code>dir</code> command, you should see these folders: |

* src | * src | ||

* toolchain | * toolchain | ||

* tools | * tools | ||

| − | Now, you have to source ''Vivado'' settings and then run the setup_project.tcl script. In the following, it will be shown how to | + | Now, you have to source ''Vivado'' settings and then run the setup_project.tcl script. In the following, it will be shown how to run this both in Linux and Windows. |

==== Linux ==== | ==== Linux ==== | ||

Go into the directory of the cloned repository: | Go into the directory of the cloned repository: | ||

| − | <code>cd ~/ | + | <code>cd ~/npu</code> |

Create the folder in which place a sample kernel image and paste the kernel there: | Create the folder in which place a sample kernel image and paste the kernel there: | ||

| Line 39: | Line 39: | ||

* ''gui'' is the mode in which execute Vivado (you can run it as ''batch'' if you prefer). | * ''gui'' is the mode in which execute Vivado (you can run it as ''batch'' if you prefer). | ||

| − | + | These parameters are actually ignored since in the coherence testbench the core NPU is physically disconnected from the cache controller. | |

==== Windows ==== | ==== Windows ==== | ||

| − | We | + | We assume Windows 10 as OS and ''git for Windows'' tool installed. |

| − | + | Press <code>Windows Key + R</code> on your keyboard, type <code>cmd</code> and press return; then go into the directory of the cloned repository: | |

| − | <code>cd C:\ | + | <code>cd C:\npu\</code> |

Create the folder in which place a sample kernel image and paste the kernel there: | Create the folder in which place a sample kernel image and paste the kernel there: | ||

| Line 52: | Line 52: | ||

<code>mkdir software\kernels</code> | <code>mkdir software\kernels</code> | ||

| − | <code>xcopy /s /i "path/to/sample-kernel" C:\ | + | <code>xcopy /s /i "path/to/sample-kernel" C:\npu\software\kernels </code> |

Now source Vivado settings: | Now source Vivado settings: | ||

| Line 67: | Line 67: | ||

* ''gui'' is the mode in which execute Vivado (you can run it as ''batch'' if you prefer). | * ''gui'' is the mode in which execute Vivado (you can run it as ''batch'' if you prefer). | ||

| − | + | These parameters are actually ignored since in the coherence testbench the core NPU is physically disconnected from the cache controller. | |

== Understanding the Testbench == | == Understanding the Testbench == | ||

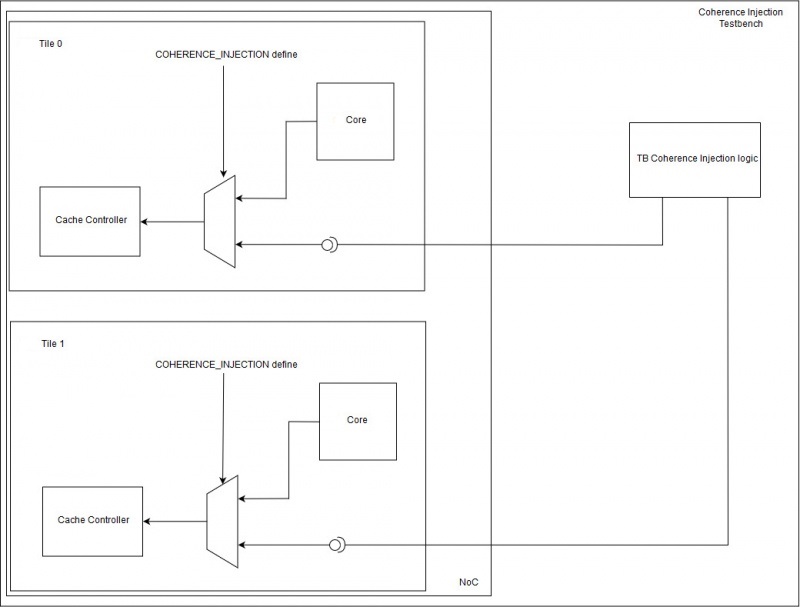

| − | In the <code>tb_coherence_injection.sv</code> testbench we are injecting miss | + | In the <code>tb_coherence_injection.sv</code> testbench we are injecting miss requests directly to the cache controller, bypassing the NPU core. |

| − | [[file: | + | [[file:tb_coherence_injection_schema_new.jpg|800px]] |

This is done by a pre-conditional assignament of the cache-controller's input signals. Following, the instantiation of the module <code>l1d_cache</code>, representing the L2 cache. | This is done by a pre-conditional assignament of the cache-controller's input signals. Following, the instantiation of the module <code>l1d_cache</code>, representing the L2 cache. | ||

<source lang=systemverilog> | <source lang=systemverilog> | ||

| − | // file: src/mc/tile/ | + | // file: src/mc/tile/tile_npu.sv |

l1d_cache #( | l1d_cache #( | ||

| Line 115: | Line 115: | ||

</source> | </source> | ||

| − | When the COHERENCE_INJECTION symbol is defined (see src/include/ | + | When the COHERENCE_INJECTION symbol is defined (see src/include/npu_user_defines.sv), the input signals of the Cache Controller related to the core requests are driven by the injection tasks rather than the Load-Store Unit, and they are named <code>_inject</code>, that are unlinked by default. Beware, if the injection testbench is enabled, but the setup_injection_channel task is not called, the Cache Controller has no input stimulus, which implies it remains idle. |

| − | In this way, from the | + | In this way, from the testbench it's possible to attach these signals and send coherence requests directly to cache controller, pretending to be the Load-Store Unit. This is the job of the task <code>setup_injection_channel</code>, that contains instruction of this type: |

<source lang=systemverilog> | <source lang=systemverilog> | ||

... | ... | ||

| − | assign | + | assign u_npu_noc.NOC_ROW_GEN[0].NOC_COL_GEN[0].TILE_NPU_INST.u_tile_npu.ldst_instruction_inject = t_00_ldst_instruction_inject; |

... | ... | ||

</source> | </source> | ||

| Line 148: | Line 148: | ||

=== Thread emulation logic === | === Thread emulation logic === | ||

| − | Trying to emulate the same behaviour of | + | Trying to emulate the same behaviour of an NPU core, the thread scheduling uses a stalling logic that prohibits to submit subsequent requests from the same thread, if a previous request from this one is currently in progress. In fact, after a submit from a thread, a bitmask is adequately set to guarantee this requirement. |

For this reason, a de-stalling logic is needed and it is implemented using a different process: | For this reason, a de-stalling logic is needed and it is implemented using a different process: | ||

| Line 163: | Line 163: | ||

=== Termination logic === | === Termination logic === | ||

| − | A termination logic for the testbench has been implemented. It will terminate if one of the following | + | A termination logic for the testbench has been implemented. It will terminate if one of the following events occurs: |

| − | * One of the | + | * One of the NPU tiles has reached the target number of requests. This number is given by the parameter <code>TB_REQUEST_NUMBER</code>; |

| − | * One of the | + | * One of the NPU tiles has all the threads stalled (aka the <code>stall_mask</code> contains all ones) and a certain number of subsequent clock ticks occurred. This number is given by the parameter <code>TB_DEADLOCK_THRESHOLD</code>. |

| − | Obviously, the first case can be seen as a success, while the second can be seen as an event of deadlock of an entire | + | Obviously, the first case can be seen as a success, while the second can be seen as an event of deadlock of an entire NPU core. |

Attachments: | Attachments: | ||

[[file:sample-kernel.zip]] | [[file:sample-kernel.zip]] | ||

Latest revision as of 15:44, 25 June 2019

In this guide, you will find all the information on how to setup a Vivado project to test coherence injection and how to use the testbench correctly.

Contents

Setup Vivado project

Install Vivado

For first you should install a licensed Version of Vivado. For this guide we assume to use Vivado 2018.2.2 with WebPack license. This free license is enough for simulation-purposes.

Get NaplesPU

First, git clone the latest version from the NPU repository with git, then checkout coherence_testing branch. If the file tb_coherence_injection.sv exists, then you are on the right branch.

Run Vivado

Open a terminal window and place into the NaplesPU repository directory. Type ls o dir command, you should see these folders:

- src

- toolchain

- tools

Now, you have to source Vivado settings and then run the setup_project.tcl script. In the following, it will be shown how to run this both in Linux and Windows.

Linux

Go into the directory of the cloned repository:

cd ~/npu

Create the folder in which place a sample kernel image and paste the kernel there:

mkdir -p software/kernels && cp -R "path/to/sample-kernel/mmsc" software/kernels

Now source Vivado settings:

source ~/Xilinx/Vivado/2018.2/settings64.sh

Finally, setup the project and wait for Vivado to be launched:

tools/vivado/setup_project.sh -k mmsc -c 3 -t $(( 16#F )) -m gui

About the parameters:

- 3 is the one-hot mask that says which core should be active: 3 is (11)2 so 2 cores;

- $(( 16#F )) is the one-hot mask that says which thread should be active for a core: F is (00001111)2 so 4 threads active;

- gui is the mode in which execute Vivado (you can run it as batch if you prefer).

These parameters are actually ignored since in the coherence testbench the core NPU is physically disconnected from the cache controller.

Windows

We assume Windows 10 as OS and git for Windows tool installed.

Press Windows Key + R on your keyboard, type cmd and press return; then go into the directory of the cloned repository:

cd C:\npu\

Create the folder in which place a sample kernel image and paste the kernel there:

mkdir software\kernels

xcopy /s /i "path/to/sample-kernel" C:\npu\software\kernels

Now source Vivado settings:

C:\Xilinx\Vivado\2018.2\settings64.bat

Finally, setup the project and wait for Vivado to be launched:

vivado -nolog -nojournal -mode gui -source tools/vivado/setup_project.tcl -tclargs mmsc 15 3

About the parameters:

- 15 is the one-hot mask that says which thread should be active for a core: 15 is (00001111)2 so 4 threads active

- 3 is the one-hot mask that says which core should be active: 3 is (11)2 so 2 cores.

- gui is the mode in which execute Vivado (you can run it as batch if you prefer).

These parameters are actually ignored since in the coherence testbench the core NPU is physically disconnected from the cache controller.

Understanding the Testbench

In the tb_coherence_injection.sv testbench we are injecting miss requests directly to the cache controller, bypassing the NPU core.

This is done by a pre-conditional assignament of the cache-controller's input signals. Following, the instantiation of the module l1d_cache, representing the L2 cache.

// file: src/mc/tile/tile_npu.sv

l1d_cache #(

.TILE_ID( TILE_ID ),

.CORE_ID( CORE_ID ) )

u_l1d_cache (

.clk ( clk ),

.reset ( reset ),

`ifndef COHERENCE_INJECTION

.ldst_instruction ( ldst_instruction ),

.ldst_address ( ldst_address ),

.ldst_miss ( ldst_miss ),

.ldst_evict ( ldst_evict ),

.ldst_cache_line ( ldst_cache_line ),

.ldst_flush ( ldst_flush ),

.ldst_dinv ( ldst_dinv ),

.ldst_dirty_mask ( ldst_dirty_mask ),

`else

.ldst_instruction ( ldst_instruction_inject ),

.ldst_address ( ldst_address_inject ),

.ldst_miss ( ldst_miss_inject ),

.ldst_evict ( ldst_evict_inject ),

.ldst_cache_line ( ldst_cache_line_inject ),

.ldst_flush ( ldst_flush_inject ),

.ldst_dinv ( ldst_dinv_inject ),

.ldst_dirty_mask ( ldst_dirty_mask_inject ),

`endif

//other signals assignament

...

);When the COHERENCE_INJECTION symbol is defined (see src/include/npu_user_defines.sv), the input signals of the Cache Controller related to the core requests are driven by the injection tasks rather than the Load-Store Unit, and they are named _inject, that are unlinked by default. Beware, if the injection testbench is enabled, but the setup_injection_channel task is not called, the Cache Controller has no input stimulus, which implies it remains idle.

In this way, from the testbench it's possible to attach these signals and send coherence requests directly to cache controller, pretending to be the Load-Store Unit. This is the job of the task setup_injection_channel, that contains instruction of this type:

...

assign u_npu_noc.NOC_ROW_GEN[0].NOC_COL_GEN[0].TILE_NPU_INST.u_tile_npu.ldst_instruction_inject = t_00_ldst_instruction_inject;

...Using the Testbench

Now it's time to use the testbench, stressing the cache-controller with ad-hoc built requests.

Generate and submit a random coherence request

Before submitting a coherence request, we have to create one first. This can be done using the function create_random_coherence_request, which populates a tb_coherence_request_t randomly, using $urandom system task.

The struct tb_coherence_request_t is made of five self-explained fields:

typedef struct packed {

tb_request_type_t request_type;

dcache_address_t address;

requestor_t requestor;

dcache_line_t cache_line;

dcache_store_mask_t dirty_mask;

} tb_coherence_request_t;Once the coherence request has been created it is possible to submit it to a cache controller. This is done by the task submit_request, that is composed by two different parts:

- From the analysis of the request type, it opportunely sets the injection signals;

- From the analysis of the requestor, it decides in which tile inject the request.

Thread emulation logic

Trying to emulate the same behaviour of an NPU core, the thread scheduling uses a stalling logic that prohibits to submit subsequent requests from the same thread, if a previous request from this one is currently in progress. In fact, after a submit from a thread, a bitmask is adequately set to guarantee this requirement. For this reason, a de-stalling logic is needed and it is implemented using a different process:

always_ff @( posedge clk ) begin : TILE0_WAKEUP

if(t_00_wakeup)

begin

t_00_stall_mask[t_00_wakeup_thread_id] <= 1'b0;

$display("[Time %t] [TESTBENCH] [TILE0] Waking up thread %d", $time(), t_00_wakeup_thread_id);

end

endTermination logic

A termination logic for the testbench has been implemented. It will terminate if one of the following events occurs:

- One of the NPU tiles has reached the target number of requests. This number is given by the parameter

TB_REQUEST_NUMBER; - One of the NPU tiles has all the threads stalled (aka the

stall_maskcontains all ones) and a certain number of subsequent clock ticks occurred. This number is given by the parameterTB_DEADLOCK_THRESHOLD.

Obviously, the first case can be seen as a success, while the second can be seen as an event of deadlock of an entire NPU core.

Attachments:

File:Sample-kernel.zip