Single Core Cache Controller

This page describes the L1 cache controller (CC) allocated in the nu+ core and directly connected to the LDST unit, Core Interface (CI), Thread Controller (TC), Memory Controller (MeC) and Instruction Cache (IC). The main task is to handle requests from the core (load/store miss, instruction miss, flush, evict, data invalidate) and to serialize them. The requests are scheduled with a fixed priority.

Type of request is READ and WRITE. Load/store and instruction misses are READ request because they have to read from the cache after that CC takes a data from memory; flush, replacement, data invalidate (DINV) are WRITE request because they have to write into the cache.

TODO: magari un disegno di tutti i componenti collegati al CC

In a single core architecture, there is no need for Miss Status Holding Register (MSHR).

Contents

Interface

This section shows the interface of the CC to/from all other linked units.

To/from Core interface

CI is a component that buffers all request to/from LDST unit. Regards to this component, it can be possible to decouple a service speed of CC and a service speed of LDST units. In fact, the cache controller can execute one request at a time but there are more than one LDST units so they can send more than one request at a time. Core interface receives a request from the LDST unit (all the event concerned to the memory: instruction miss, load/store miss, flush, evict) and put it in one of four queues. Once elaboration of CC terminated, it sends a dequeue signal to CI for delete request in queues.

Following lines of code define interface to/from core interface:

~ output logic cc_dequeue_store_request, ~ input logic ci_store_request_valid, input thread_id_t ci_store_request_thread_id, input dcache_address_t ci_store_request_address, input logic ci_store_request_coherent, ~

To/from LDST

TODO

To/from Memory controller

TODO

To/from Instruction cache

TODO

To/from Thread controller

TODO

Implementation

In this section is described how to is implemented CC.

FSM

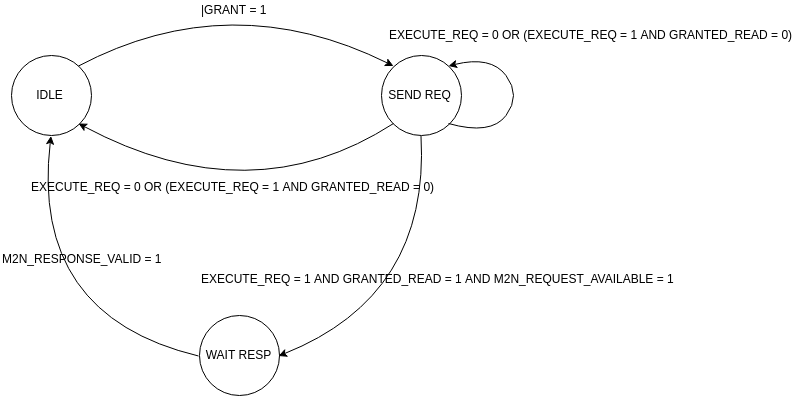

The behaviour is implemented by a finite state machine (FSM). There are three states:

- idle

- send request

- wait response

Below is represented the graph of FSM of CC.

The FSM is implemented dividing sequential and combinatorial output.

Sequential section

First of all, there is a phase that some signals are initialized.

In the IDLE state, there is a preparation of request. Preparation depends on the type of request. Below there is an example of LOAD request:

~ if (grants[LOAD]) begin granted_read <= 1'b1; granted_write <= 1'b0; granted_need_snoop <= 1'b1; granted_need_hit_miss <= 1'b0; granted_wakeup <= 1'b1; granted_thread_id <= ci_load_request_thread_id; granted_address <= ci_load_request_address; ~

There is some information such as thread ID that it sent a request or address of LOAD request or type of request. If there is at least one request, then CC move into SEND REQ state.

In the SEND REQ state, there is a logic that allows CC to send a request to the memory. If the request is executable and the memory is available, then CC can send the request by writing the address and kind (READ or WRITE) of request. If kind of request is READ, then CC need to waits for a response, else if kind of request is WRITE, CC computes a DINV request by executing following lines of code and it comes back in the IDLE state. Else if the request isn't executable, then CC comes back in the IDLE state.

~ if (grants_reg[DINV]) begin cc_update_ldst_valid <= 1'b1; cc_update_ldst_way <= way_matched_reg; cc_update_ldst_address <= granted_address; cc_update_ldst_privileges <= dcache_privileges_t'(0); cc_update_ldst_command <= CC_UPDATE_INFO_DATA; end ~

In these lines of code, CC send to LDST unit the response of line cache invalidation request without any privileges.

In the WAIT RESP state, CC waits for a response from the memory. If memory response is available, then CC comes back in the IDLE state. If kind of request is INSTR, then CC return to TC the contents of memory. Else if kind of request is LOAD or STORE, then CC performs a request by executing below lines of code:

~ end else if (grants_reg[LOAD] | grants_reg[STORE]) begin cc_update_ldst_valid <= 1'b1; cc_update_ldst_way <= counter_way[granted_address.index]; cc_update_ldst_address <= granted_address; cc_update_ldst_privileges <= dcache_privileges_t'(2'b11); cc_update_ldst_store_value <= m2n_response_data_swap; cc_update_ldst_command <= ways_full ? CC_REPLACEMENT : CC_UPDATE_INFO_DATA; ~

CC returns to LDST the way that a request has to execute, READ and WRITE privileges, data from memory that has to write if the type of request is STORE.

Combinatorial section

First of all, there is a phase that some signals are initialized.

In the IDLE state, the address of cache that a WRITE request has to compute is defined.

In the SEND REQ state, there is a logic for dequeue request. If the request is executable and the memory is available, then dequeue signal of REPLACEMENT, FLUSH, DINV and I/O write request is asserted. Else if the request isn't executable, then dequeue signal of LOAD, STORE, FLUSH and DINV is asserted.

In the WAIT RESP, once a response from memory is ready, dequeue signal of LOAD, STORE, I/O read and INSTR request is asserted.

IO, Instruction and Core Interface requests buffering

Every request has a valid bit. If this bit is high means that CC receives a request from CI. Also, there are two bits that mean there is IO or INSTR pending request. All these bits are buffered into a vector. Regards to this vector, it can be possible to schedule requests. There is a component (described [here]) that allow rounding robin schedule.

IO and INSTR requests are managed in a different way.

IO Map request

There are two queues of size 8:

- IO FIFO REQUEST

- IO FIFO RESPONSE

The format of the queues is defined by the following lines of code:

~

typedef struct packed {

thread_id_t thread;

io_operation_t operation;

dcache_address_t address;

register_t data;

} io_fifo_t;

~

Requests are buffered into IO FIFO REQUEST queue. When the IO FIFO REQUEST is full, the CC refuses further IO Map requests. Once that processing of a request is terminated, the request is dequeue from IO FIFO REQUEST and the relative response is queued into IO FIFO RESPONSE queue.

Instruction miss request

INSTR requests are buffered in INSTR FIFO queue of size 2. Data that are stored into the INSTR FIFO queue is the address of the request. The relative response of a request is sent to the TC during the execution of FSM, like below lines of code:

~ WAIT_RESP: begin if (m2n_response_valid) begin state <= IDLE; // handle req if (grants_reg[INSTR]) begin mem_instr_request_data_in <= m2n_response_data_swap; mem_instr_request_valid <= 1'b1; ~

m2n_response_data_swap register contains data from memory that they are transformed into xxx-endian if needed (TODO: chiedere a francesco che endian siamo noi).

Snoop managing

A cache hit is asserted if we have read or write privileges on such address and if the tag of the requested address is equal to an element present in the tag array.

snoop + way TODO: chiedere meglio questa parte

Memory swap

This portion of code is used to transform a vector of data from xxx-endian into xxx-endian (TODO: chiedere a francesco cosa siamo noi e in cosa trasforma). For each vector of date there is a flag ENDSWAP: if it is asserted then is need to transform the format of data.