Difference between revisions of "L1 Cache Controller"

(→Hit/miss logic) |

(→Hit/miss logic) |

||

| Line 124: | Line 124: | ||

# load/store unit; | # load/store unit; | ||

| − | # cache controller stage 2. | + | # cache controller (stage 2). |

Load/store unit performs the lookup using only request's set; so it returns an array of tags for each way whose tags have the same set of the request and their privilege bits. This first lookup is performed at the same time the request is in cache controller stage 1. The second phase of lookup is performed by cache controller stage 2 using only request's tag; this search is performed on the array provided by load/store unit. If there is a block with the same tag and the block is valid (its validity is checked with privilege bits) then the hit signal is asserted and the way index of the block is provided to stage 3. The way index will be used by stage 3 to perform updates to coherence data of that block. <br> | Load/store unit performs the lookup using only request's set; so it returns an array of tags for each way whose tags have the same set of the request and their privilege bits. This first lookup is performed at the same time the request is in cache controller stage 1. The second phase of lookup is performed by cache controller stage 2 using only request's tag; this search is performed on the array provided by load/store unit. If there is a block with the same tag and the block is valid (its validity is checked with privilege bits) then the hit signal is asserted and the way index of the block is provided to stage 3. The way index will be used by stage 3 to perform updates to coherence data of that block. <br> | ||

Revision as of 11:07, 18 December 2017

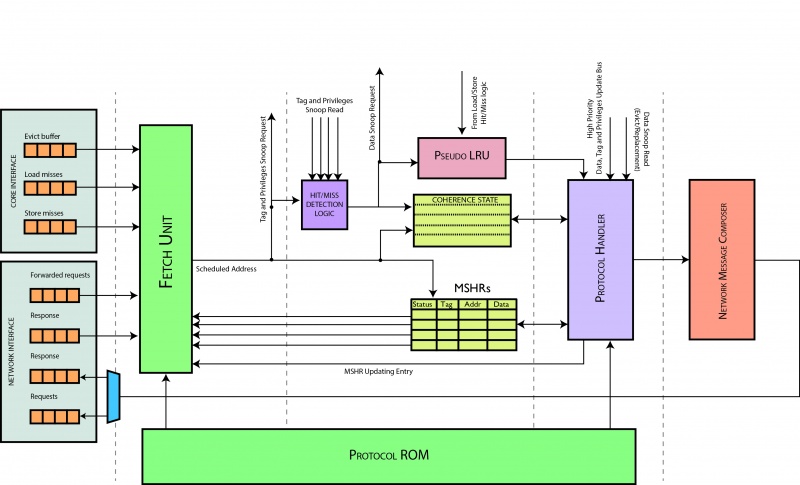

Il cache controller è composto da 4 stage, di cui 3 registrati. Prima di descrivere nel dettaglio i componenti, introduciamo la dinamica generale degli elementi.

- Stage 1. Lo scopo principale è quello di poter schedulare una tra le richieste provenienti dal core (es. cache miss) e dalla rete (es. frwd request). La scelta dell'arbitro è basata principalmente sulla presenza di un'altra richiesta nel controllore, sulla disponibilità della rete a trasmettere e sulla disponibilità del protocollo a processare il messaggio. Quest'ultimo implica la necessità di dover esaminare lo stato delle transazioni pendenti del controllore.

- Stage 2. Principalmente contiene il registro delle transazioni pendenti dal core (MSHR), il quale viene interrogato tramite una lookup table dall'arbitro nello stadio precedente altresì viene modificato dallo stadio successivo seguendo l'evoluzione del protocollo. Inoltre contiene una memoria per conservare lo stato di ogni singola linea di cache.

- Stage 3. E' sicuramente lo stadio più complesso perchè quì viene interrogato il protocollo, che sulla base della richiesta schedulata e dello stato corrente della richiesta, genera tutti i segnali per poter determinare lo stato successivo della richiesta (modificando l'MSHR), del dato in cache (modificando lo stato ed il dato nella cache L1 del datapath) e di eventuali messaggi da inviare sulla rete.

- Stage 4. E' lo stato più semplice perchè ha lo scopo di dover impacchettare eventuali messaggi da inviare sulla rete da parte del controllore. Tale stadio non è registrato e comunica direttamente con la network interface.

Come si può notare, il protocollo è interrogato sia nel primo stadio che nel terzo, quindi verrà considerato come un elemento trasversale e sarà descritto a parte.

Prima descrivere gli stage nel dettaglio, si riportano gli assunti basilari per comprendere ed introdurre le scelte architetturali:

- Il cache controller può evadere un'altra richiesta sullo stesso indirizzo solo se la precedente è uscita dal controllore

%\item When a request is issued (both from NI or CI), it can modify an MSHR entry after two clock cycles, hence the next request may read a non up to date entry. In order to avoid this, the \texttt{Fetch Unit} does not schedule two consecutive requests on the same address.

- Le transazioni coinvolgono sempre blocchi di memoria.

- Non è possibile gestire due richieste che operano sullo stesso set.

% perchè se generano più di un replacement, abbiamo più di una richiesta per core?

- Solo le richieste del core possono istanziare un valore nell'MSHR, di preciso le load, le store e le writeback.

- gli stati sono salvati in cache solo se stabili , altrimenti si trovano nell'MSHR

Contents

Stage 1

Stage 1 is responsible for the issue of requests to controller. A request could be a load miss, store miss, flush and replacement request from the local core or a coherence forwarded request or response from the network interface.

MSHR Signals

In order to find out if a request for the same block is already issued and pending, tag and sets for each type of request are provided to MSHR. MSHR data response are considered valid for that class of request if and only if its hit signal is asserted. Note that MSHR is in stage 2. Here is the code for the class of load miss signals:

// Signals to MSHR assign cc1_mshr_lookup_tag[MSHR_LOOKUP_PORT_LOAD ] = ci_load_request_address.tag; assign cc1_mshr_lookup_set[MSHR_LOOKUP_PORT_LOAD ] = ci_load_request_address.index; // Signals from MSHR assign load_mshr_hit = cc2_mshr_lookup_hit[MSHR_LOOKUP_PORT_LOAD ]; assign load_mshr_index = cc2_mshr_lookup_index[MSHR_LOOKUP_PORT_LOAD ]; assign load_mshr = cc2_mshr_lookup_entry_info[MSHR_LOOKUP_PORT_LOAD ];

Stall Protocol ROMs

In order to be compliant with the coherence protocol all incoming requests for blocks whose coherence state is non-stable state have to be stalled. This task is performed through a series of protocol ROM (one for each request type) whose output signal will stall the issue of relative request if asserted, that is for example when a block is in state SM_A and a Fwd_GetS, Fwd_GetM, recall, flush, store or replacement request for the same block is received. In order to assert this signal the protocol ROM needs the type of the request and the actual state of the block. Here is the stall logic:

stall_protocol_rom load_stall_protocol_rom ( .current_request ( load ), .current_state ( load_mshr.state ), .pr_output_stall ( stall_load ) );

stall_protocol_rom store_stall_protocol_rom ( .current_request ( store ), .current_state ( store_mshr.state ), .pr_output_stall ( stall_store ) );

stall_protocol_rom flush_stall_protocol_rom ( .current_request ( flush ), .current_state ( flush_mshr.state ), .pr_output_stall ( stall_flush ) );

stall_protocol_rom replacement_stall_protocol_rom (

.current_request ( replacement ),

.current_state ( replacement_mshr.state ),

.pr_output_stall ( stall_replacement )

);

stall_protocol_rom forwarded_stall_protocol_rom ( .current_request ( fwd_2_creq( ni_forwarded_request.packet_type ) ), .current_state ( forwarded_request_mshr.state ), .pr_output_stall ( stall_forwarded_request ) );

Note that response messages doesn't need to be stalled so a stall logic isn't required.

Request Issue Signals

In order to issue a generic request it is required that:

- MSHR has not been issued a request for the same block;

- if the request is already in MSHR it has to be not valid;

- if the request is already in MSHR and valid it must not have been stalled by Protocol ROM (see Stall signals).

- further stages are not serving a request for the same address (see ASSUNZIONI);

- network interface is available;

assign can_issue_load = ci_load_request_valid &&

( !load_mshr_hit ||

( load_mshr_hit && !load_mshr.valid) ||

( load_mshr_hit && load_mshr.valid && load_mshr.address.tag == ci_load_request_address.tag && !stall_load ) ) &&

! (( cc2_pending_valid && ( ci_load_request_address.index == cc2_pending_address.index ) ) ||

( cc3_pending_valid && ( ci_load_request_address.index == cc3_pending_address.index ) )) &&

ni_request_network_available;

Response messages doesn't need conditions for MSHR because they never use it (they never wait for following events) and are never stalled. The same goes for flush requests even though they could be stalled by the relative stall protocol ROM. Note that unlike directory controller's TSHR there is not a control about the filling of MSHR. Because of the assumptions that only a request per thread can be issued and only threads can allocate a MSHR entry, it is sufficient to size MSHR to the number of thread in order for the MSHR to be never full and make the control about his filling useless.

Finally a replacement request could be pre-allocated in MSHR (see MSHR update logic). In order for this request to be issued before every other request on the same block, an additional condition is added:

assign can_issue_replacement =

...

( !replacement_mshr_hit ||

( replacement_mshr_hit && !replacement_mshr.valid) ||

( replacement_mshr_hit && replacement_mshr.valid && ( !stall_replacement || replacement_mshr.waiting_for_eviction ) ) )

...

Requests Scheduler

Once the conditions for the issue have been verified, two or more requests could be ready at the same time so a scheduler must be used. Every request has a fixed priority whose order is set as below:

- flush

- replacement

- store miss

- coherence response

- coherence forwarded request

- load miss

Once a type of request has been scheduled this block drives conveniently the output signals for the second stage.

[?]Dalle considerazioni fatte precedentemente, è chiaro che la flush e la writeback hanno una priorità maggiore rispetto alle altre richieste al fine di poter dare alle richieste degli altri thread il dato che non sia vecchio.

Stage 2

Stage 2 is responsible for managing L1 cache, the MSHR and forwarding signals from Stage 1 to Stage 3. It simply contains the L1 coherence cache (L1 data cache is in load/store unit) and all related logic for managing cache hits and block replacement. The policy used to replace a block is LRU (Least Recently Used).

This module receives signals from stage 3 to update MSHR and coherence cache properly once a request is processed and from load/store unit to update LRU every time a block is accessed from the core.

Hit/miss logic

Cache lookup is managed in a particular way so a deeper description of its operations have to be made.

Lookup phase is split in two phases performed by:

- load/store unit;

- cache controller (stage 2).

Load/store unit performs the lookup using only request's set; so it returns an array of tags for each way whose tags have the same set of the request and their privilege bits. This first lookup is performed at the same time the request is in cache controller stage 1. The second phase of lookup is performed by cache controller stage 2 using only request's tag; this search is performed on the array provided by load/store unit. If there is a block with the same tag and the block is valid (its validity is checked with privilege bits) then the hit signal is asserted and the way index of the block is provided to stage 3. The way index will be used by stage 3 to perform updates to coherence data of that block.

If there isn't a block with the same tag as request's then hit signal is low so stage 3 will take the way index provided by LRU unit in order to replace that way (see replacement logic).

... // Second phase lookup // Result of this lookup is an array one-hot codified assign snoop_tag_way_oh[dcache_way] = ( ldst_snoop_tag[dcache_way] == cc1_request_address.tag ) & ( ldst_snoop_privileges[dcache_way].can_read | ldst_snoop_privileges[dcache_way].can_write ); ... assign snoop_tag_hit = |snoop_tag_way_oh;

Note that when the request arrives in stage 2 the way index isn't already known so coherence cache data are sent to stage 3 as an array of coherence data (one for each way). Stage 3 will know which way index to use for fetching correct data because meantime hit/miss logic will compute the correct way index and will pass it to stage 3.

The choice of splitting lookup phase has been made in order to reduce the latency of the entire process.

MSHR

Il Miss Status Handling Register contiene informazioni relative a tutte le richieste di miss sollevate dal core che non sono ancora state definitivamente evase. Così come detto precedentemente, tale MSHR ha un numero di entry pari al numero di thread in quanto solo una richiesta alla volta può essere eseguita per thread. L'MSHR deve poter fornire le informazioni in maniera combinatoriale a chi lo interroga e deve poter fornire anche un modo per poter modificare la struttura delle entry. Per questo motivo, la struttura dell'MSHR può essere suddivisa in una parte di lettura e in una di scrittura delle entry. La parte di lettura dell'MSHR deve poter fornire tali informazioni agli altri stadi in maniera combinatoriale. Quindi, anche se non è tipico di una MSHR standard, le informazioni lette sono prelevate tramite una lookup table. Una entry della MSHR contiene le segueni informazioni:

| Valid | State | Address | Thread Id | Data | Ack Count | Waiting For Eviction | Wakeup Thread |

|---|

- Valid: indica se la entry è utilizzata o meno

- State: raccoglie lo stato attuale della richiesta relativo al protocollo di coerenza, usato sia per poter

- Address: indirizzo di memoria a cui si riferisce la richiesta

- Thread ID: id del thread che ha sollevato la richiesta

- Data: dati che accompagnano la richiesta

- Ack count: numero di ack che la richiesta deve ancora ricevere

- Waiting for eviction: asserito se sull'indirizzo della richiesta deve essere eseguita una eviction ed ha senso solo se è asserito anche il bit di valid.

- Wakeup Thread : determina se il thread dovrà essere risvegliato al termine della transazione

Di seguito è riportato il punto in cui viene eseguita la lettura della entry. Tale lettura viene ulteriormente replicata per ogni lookup port (nel nostro caso sei).

generate for ( i = 0; i < `MSHR_SIZE; i++ ) begin : lookup_logic assign hit_map[i] = /*( mshr_entries[i].address.tag == tag ) &&*/ ( mshr_entries[i].address.index == index ) && mshr_entries[i].valid; end endgenerate oh_to_idx #( .NUM_SIGNALS( `MSHR_SIZE ) ) u_oh_to_idx ( .index ( selected_index ), .one_hot( hit_map ) ); assign mshr_entry = mshr_entries[selected_index];

Supponendo che l'MSHR sia di tipo read-first, per ogni entry viene effettuato il controllo sull'address: se c'è una richiesta già pendente valida, allora viene asserito il bit nella hit map, al quale invierà la corrispondente entry in uscita.

Per quanto riguarda la parte di scrittura, viene specifcato qual è l'id della entry da aggiornare con le informazioni da inserire. Supponendo sia read-first, le informazioni aggiornate saranno disponibili il colpo di clock successivo all'aggiornamento. Infine si vuol notare che nell'MSHR sono stati separati i dati della entry dalle altre informazioni per poter evitare di appesantire il processo di lookup dato che non servirebbero ai fini delle decisioni dello scheduling. Tali dati sono messi nel banco di memoria "mshr_data_sram".

Stage 3

Stage 3 is responsible for the actual execution of requests. Once a request is processed, this stage issues signals to the units in the above stages in order to update data properly.

In particular, this stage drives datapath to perform one of these functions:

- block replacement evaluation;

- MSHR update;

- cache memory (both data and coherence info) update.

- preparing outgoing coherence messages.

Current State Selector

Before a request is processed by coherence protocol the correct source of cache block state has to be chosen. These data could be retrieved from:

- MSHR;

- coherence data cache;

If none of the conditions above are met then cache block must be in state I because it has not been ever read or modified.

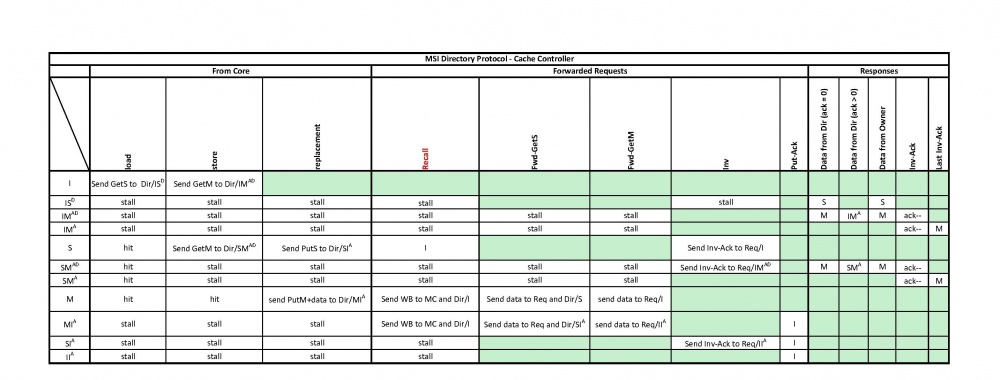

Protocol ROM

This module implements the coherence protocol as represented in figure below. The choice to implement the protocol as a separate ROM has been made to ease further optimizations or changes to the protocol. It takes in input the current state and the request type and computes next actions.

The coherence protocol used is MSI plus some changes due to the directory's inclusivity. In particular a new type of forwarded request has been added, recall, that is sent by directory controller when a block has to be evicted from L2 cache. A writeback response to the memory controller follows in response to a recall only when the block is in state M. Note that a writeback response is sent to the directory controller as well in order to provide a sort of acknowledgement.

Furthermore another type of request, flush (not present in figure), has been added that simply send updated data to the memory. It also generates a writeback response even though it is directed only to memory controller and doesn't change its coherence block state while a writeback response to a recall invalidates that block. (scrivere xkè si è aggiunto qsto messaggio di flush).

Replacement Logic

A cache block replacement is required when a new block has to be saved into a full L1 cache. In order to do that a block in L1 cache has to be chosen for a replacement. This replaced block must be valid in order for a replacement to be issued; block validity is assured by privilege bits associated to it. These privilege bits (one for each way) come from Stage 2 that in turn has received them from load/store unit. Index of way to replace is provided by LRU unit in Stage 2 that selects least used way.

replaced_way_valid = cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_read | cc2_request_snoop_privileges[cc2_request_lru_way_idx].can_write;

Afterwards the address of the replaced way has to be computed. In particular its tag is provided by tag cache from load/store unit (through Stage 2) while the index is provided by the request (tag cache does't contain index data). Coherence data are provided by coherence data cache in Stage 2. Offset is not needed because the entire block cache is replaced so offset is all 0s.

replaced_way_address.tag = cc2_request_snoop_tag[cc2_request_lru_way_idx];

replaced_way_address.index = cc2_request_address.index;

replaced_way_address.offset = {`DCACHE_OFFSET_LENGTH{1'b0}};

replaced_way_state = cc2_request_coherence_states[cc2_request_lru_way_idx];

Finally a replacement request has to be issued if:

- protocol ROM requested for a cache update;

- the block requested is not present in L1 cache (so the update request must be a block allocation);

- replaced block is valid.

do_replacement = pr_output.write_data_on_cache && !cc2_request_snoop_hit && replaced_way_valid;

MSHR Update Logic

MSHR could be updated in three different ways:

- entry allocation;

- entry deallocation;

- entry update.

MSHR is used to store cache lines data whose coherence transactions are pending. This is the case in which a cache line is in a non-stable state. So an entry allocation is made every time the cache line's state moves towards a non-stable state. In opposite way a deallocation is made when a cache line's state enters a stable state. Finally an update is made when there is something to change regarding the MSHR line but cache line's state is yet non-stable. Each condition is represented by a signal that is properly asserted by protocol ROM.

cc3_update_mshr_en = ( pr_output.allocate_mshr_entry || pr_output.update_mshr_entry || pr_output.deallocate_mshr_entry );

MSHR update data depends on whether a replacement is available, that is when do_replacement is asserted. When there is not a replacement then a MSHR entry is allocated or updated according to signals from protocol ROM while when there is a replacement then data from Replacement Logic are used to allocate a MSHR entry. Actually when do_replacement is asserted then an MSHR entry is pre-allocated. This is necessary otherwise data computed by Replacement Logic about replaced way would get lost. In order for Stage 1 to remember that the entry is only a pre-allocation (remember that MSHR stores cache block data whose coherence transactions are already pending but in this case coherence transaction is not yet started) bit waiting_for_eviction is asserted (see Request Issue Signals).

Note that if the update is an entry allocation then the index of an empty entry is provided directly by MSHR (through Stage 2). Remember that at this point there is surely an empty MSHR entry otherwise the request would have not been issued (see Request Issue Signals). If the operation is an update or a deallocation then the index is obtained from Stage 1 (through Stage 2) in which MSHR is queried (see MSHR Signals) for the index of the entry associated with the actual request.

cc3_update_mshr_index = cc2_request_mshr_hit ? cc2_request_mshr_index : cc2_request_mshr_empty_index;

Cache Update Logic

Both data cache and coherence cache could be updated after a coherence transaction has been computed. Data cache data is updated according to the occurrence of a replacement, in that case command CC_REPLACEMENT is issued to load/store unit; this command ensures load/store unit will prepare the block for an eviction. Otherwise an update to cache block has to be made; if the update data regards only data privileges then CC_UPDATE_INFO command is issued otherwise command CC_UPDATE_INFO_DATA is issued when both the new block and its privileges has to be written in L1 cache.

// Data cache signals assign cc3_update_ldst_command = do_replacement ? CC_REPLACEMENT : ( pr_output.write_data_on_cache ? CC_UPDATE_INFO_DATA : CC_UPDATE_INFO ); assign cc3_update_ldst_way = cc2_request_snoop_hit ? cc2_request_snoop_way_idx : cc2_request_lru_way_idx; ... // Coherence cache signals assign cc3_update_coherence_state_index = cc2_request_address.index; assign cc3_update_coherence_state_way = cc2_request_snoop_hit ? cc2_request_snoop_way_idx : cc2_request_lru_way_idx; assign cc3_update_coherence_state_entry = pr_output.next_state;

Note the control about the cache way to update; if the block is already present in L1 cache then its way index is used otherwise the least recently used way index is used, that is the case of a replacement.

Data cache is updated every time there is the need to change privileges for a block already present is L1 cache or when a new block is received and has to be written along with its privileges.

... cc3_update_ldst_valid = ( pr_output.update_privileges && cc2_request_snoop_hit ) || pr_output.write_data_on_cache; ...

Coherence cache is updated when block state became stable (for pending requests MSHR stores these data) and there is a cache hit. If there is not a cache hit then protocol ROM must have the necessity to write a new data block on L1 cache.

... cc3_update_coherence_state_en = ( pr_output.next_state_is_stable && cc2_request_snoop_hit ) || ( pr_output.next_state_is_stable && !cc2_request_snoop_hit && pr_output.write_data_on_cache ); ...

Furthermore if the request is a forwarded coherence request then L1 cache data are forwarded to the message generator in stage 4 in order to be sent to the requestor.

assign cc3_snoop_data_valid = cc2_request_valid && pr_output.send_data_from_cache; assign cc3_snoop_data_set = cc2_request_address.index; assign cc3_snoop_data_way = cc2_request_snoop_way_idx;

Stage 4

This stage formats a request/response message for the network interface whenever one is available. Messages are formatted compliant to the coherence protocol.